Visualization¶

Concepts¶

IT Operations Analytics is a popular method that allows to gather various types of data from an IT infrastructure and analyse them according to some user-defined metrics, with the primary purposes to recognise patterns and behaviours. The outcome allows IT managers to prevent bottleneck, optimise and plan improvements to the infrastructure.

The ITOA Module¶

As its name implies, the purpose of NetEye’s ITOA module is the practice of gathering, processing, and analysing the full spectrum of operational data, ranging from raw data to technical details about your IT infrastructure and to guide decisions, understand resource utilization and predict potential issues.

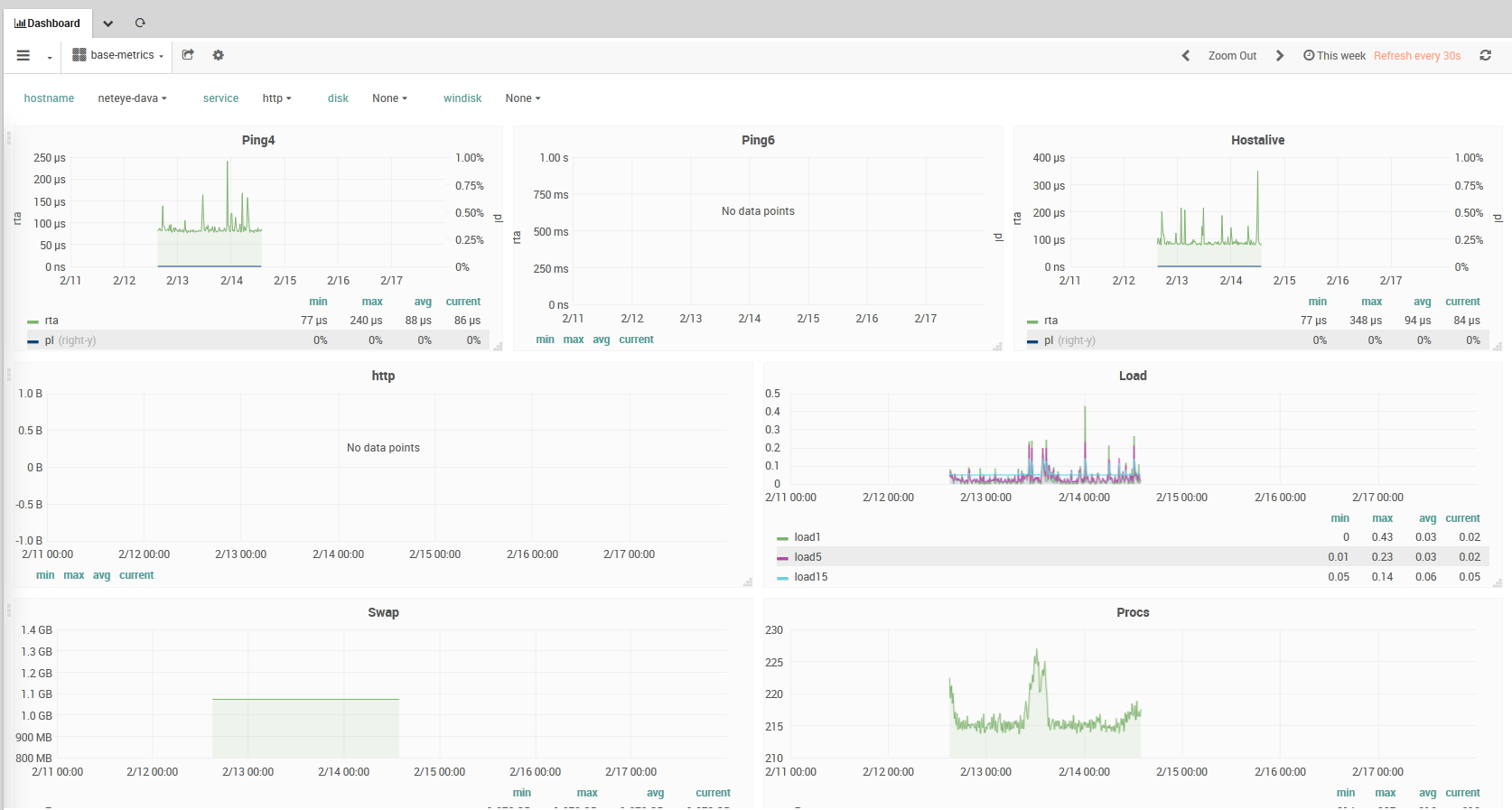

NetEye’s ITOA module provides the tools to quickly develop from operational data to solutions for bottlenecks and improve performance and throughput. To accomplish these purposes, NetEye’s ITOA module allows to collect, aggregate, and compute data from network interfaces, applications, and systems, then analyse them and finally to present them in a visual form, typically dashboards like the one shown in Fig. 153.

The ITOA module is built around the following software:

InfluxDB, a time series database, used to store the data gathered

Grafana, a web application for analytics and visualisation of data, used to display the data in form of dashboards

Telegraf, an agent to collect, process, and write metrics to InfluxDB

NATS, a message broker used in distributed systems, which is used to forward data collected from publishers (usually NetEye satellites) to subscribers (the NetEye node that collects data)

Icinga2, while interacting with several NetEye components, in this case is considered for its ability to write to InfluxDB performance data (perfdata) and numerical datapoints, gathered from the checks that it executes

Besides the above-mentioned software, actors involved in ITOA are: Users with roles, Data sources, Rows, Panels, and Dashboards.

While there is a large number of actors, their interaction is straightforward and can be envisioned as follows: Icinga and Telegraf pick up data about network traffic, applications, NetEye, and the system, from the system itself (either single node or cluster), its satellites, agents, and send them–directly or using NATS–to InfluxDB. Users with appropriate privileges can build queries using either InfluxQL or Flux to pull data from InfluxDB and create Panels to be arranged within a Dashboard. The final result of this process is a Dashboard similar to the one shown in Fig. 153.

Fig. 153 An example Performance Data Dashboard¶

We suggest to use the new Flux language for building queries and evaluate them against InfluxDB. Flux is a functional language alternative to InfluxQL, aimed at data query and analysis, that overcomes a number of InfluxQL limitations and adds various useful functions, like for example, joins, pivot tables, histograms, geo-temporal data among other. This official comparison between InfluxQL and Flux shows the differences between the two approaches.

See also

Documentation resources about the ITOA components.

Users¶

A User in the ITOA module is associated with a named account. A user belongs to an Organization (the ITOA module currently supports only one Organization), and can be assigned different levels of privileges through roles.

User authentication for ITOA is integrated with general NetEye authentication. However, user permission management is not currently integrated and instead must be done within the ITOA module. When a non-administrative user accesses ITOA the first time, an account will be autogenerated with a default role of Viewer. The permissions found in will not be applied in ITOA. Instead, the administrator must explicitly set those permissions via , clicking on the user’s Login, and setting the Role for each Organization desired at the bottom of the panel.

Data Sources¶

Grafana supports many different storage backends for your time series data (Data Source). Each data source has a specific Query Editor that is customized for the features and capabilities that that particular data source exposes.

The following data sources are officially supported:

InfluxDB

Elasticsearch

JSON files

The query language and capabilities of each data source are obviously very different. You can combine data from multiple data sources in a single Dashboard, but each individual Panel is tied to a specific data source that belongs to a particular Organization. Thus you cannot mix data from multiple data sources in a single panel.

Note

Elasticsearch Data Sources are described in the SIEM module.

Rows¶

A Row is a logical divider within a Dashboard, and is used to group Panels together.

Rows are always 12 “units” wide. These units are automatically scaled dependent on the horizontal resolution of your browser. You can control the relative width of Panels within a row by setting their own width. We utilize a unit abstraction to ensure that Grafana will look great on all screens, both small and large.

Rows can be collapsed by clicking on the Row Title. If you save a Dashboard with a row collapsed, it will be saved in that state, and will not pre-load those graphs while the row remains collapsed.

Panels¶

The Panel is the basic visualization building block in the ITOA module. Each Panel provides a Query Editor (whose form is dependent on the Data Source selected in the panel) that allows you to extract the necessary data underlying the visualization that will be shown on the Panel.

There are a wide variety of styling and formatting options that each Panel exposes to allow you to create a great visual. Panels can be dragged and dropped and rearranged on the Dashboard, and can also be resized.

There are currently five Panel types: Graph, Singlestat, Dashlist, Table, and Text.

Panels like the Graph panel allow you to include as many metrics and series as you want. Other panels like Singlestat require a reduction of a single query into a single number. Dashlist and Text are special panels that do not connect to any Data Source.

Panels can be made more dynamic by utilizing Dashboard Templating variable strings within the panel configuration (including queries to your Data Source configured in the Query Editor). Utilize the Repeating Panel functionality to dynamically create or remove Panels based on the Templating Variables selected.

The time range on panels is by default the range set in the Dashboard time picker, although this can be overridden by utilizing panel-specific time settings.

Panels (or an entire Dashboard) can be easily shared in a variety of ways. For instance, you can send a URL to someone who has a user account on your NetEye system. You can also use the Snapshot feature to encode all the data currently being viewed into a static and interactive JSON document. It’s like emailing a screenshot, but also so much better because it will be interactive!

Dashboards¶

The Dashboard is where it all comes together. Dashboards can be thought of as of a set of one or more panels organized and arranged into one or more rows.

The time period for all panels in the dashboard can be controlled simultaneously by changing the dashboard time picker in the upper right of the Dashboard. Similarly, dashboards can utilize Templating to make them more dynamic and interactive.

Dashboards can even be tagged, and the dashboard picker provides quick, searchable access to all dashboards in a particular Organization.

Telegraf Metrics in NetEye¶

Telegraf is an agent written in Go for collecting, processing, aggregating, and writing metrics. To use it in NetEye you will need to install the telegraf package using yum.

Telegraf is entirely plugin-driven and has 4 distinct plugin types:

Input Plugins: Collect metrics from the system, services, or 3rd party APIs

Processor Plugins: Transform, decorate, and/or filter metrics

Aggregator Plugins: Create aggregate metrics (e.g. mean, min, max, quantiles, etc.)

Output Plugins: Write metrics to various destinations

For more information about Telegraf please refer to the official documentation on GitHub.

Configuring User Permissions¶

User permissions in the ITOA module can be managed by configuring and assigning Roles in NetEye.

The ITOA Module can be accessed directly from the NetEye GUI (within the ITOA menu) using Single Sign On, if the logged user has permissions to access (see below). Upon the first access to ITOA from a user, that user will be created inside ITOA with ITOA permissions initialized.

Note

The ITOA menu entry will not be visible to the user, if he doesn’t have any of the listed Grafana Organization Role (i.e., Admin, Editor or Viewer) in NetEye.

User Management¶

In the ITOA Module, each Role can have one assigned Organization and a respective Organization Role, one of Admin, Editor, and Viewer). Optionally, a list of Teams belonging to the Organization can also be specified.

You can refer to the official Grafana docs to learn more about the user management model of Grafana with Organizations and related Permissions.

If a user belongs to more than one Role within different Organizations, they will be able to access each Organization. If a user belongs to more than one Role within the same Organization but different Organization Roles, they will be assigned the most permissive Organization Role ( Admin >> Editor >> Viewer ).

Example: For a Role in NetEye with the ability to edit, delete or create dashboards in the Grafana “Main Org.”, the **Organization* “Main Org.” must be configured with either the “*Editor” or the “Admin” Organization Role.

Performance Graph¶

To show the Performance Graph in the status page for each monitored object, a separate permission is required, but it is not necessary to set it to a specific Organization.

Configuration Form¶

The Analytics module adds the following fields for each role:

Organization:* The name of one Grafana organization. This setting also requires a role to be set. If the organization does not exist in Grafana, then nothing will happen.

Role: Either the

Viewer,EditororAdminrole that will be granted on the specified Organization.Teams: A comma-separated list of teams* which must exist in the specified organization.

analytics/view-performance-graph: Enabling this option will allow each user to see the Performance Graph for every monitoring object. However, this will have no effect on a user’s access rights inside Grafana, they will merely be able to navigate the Performance Dashboard from the monitoring view. In order to correctly see the Graph, a user should have at least general access also to module Grafana with grafana/graph. For examples on how to correctly configure hosts/services graphs, please refer to Icingaweb2 Module Grafana doc

Visualizing Dashboards¶

We have fully integrated Grafana dashboards into NetEye, accessible by selecting ITOA in the left sidebar menu.

Adding a Dashlet¶

You can create a widget containing your ITOA Dashboard and add it to the NetEye home page by following the user guide in Using NetEye’s dashlets. Before copying the Grafana URL from the browser you can switch to your preferred Grafana visualization mode by clicking on the cycle-view icon

One click will remove the left menu

The second click will remove also the top bar

Global search integration¶

You can search within your dashboards simply by using Lampo, or the NetEye global search box at the top of the screen.

The search results will be shown in a table in the main NetEye dashboard. You can open the resulting dashboard in a new tab.

Configuration¶

Telegraf Configuration¶

A default configuration file for a Telegraf agent can be generated by executing:

telegraf config > <telegraf_configuration_directory>/${INSTANCE}.conf

The configuration file <telegraf_configuration_directory>/${INSTANCE}.conf contains a complete list of configuration options. InfluxDB as output and cpu, disk, diskio, kernel, mem, processes, and system as inputs are enabled by default. Before starting the Telegraf agent, edit the initial configuration to specify your inputs (where the metrics come from) and outputs (where the metrics go). Please refer to the official documentation on how to configure Telegraf for your specific use case.

Note

Please note that the configuration path may change with your specific installation version and operating

system. In NetEye <telegraf_configuration_directory> is located in /neteye/local/telegraf/conf/.

Warning

Files under path /neteye/local/telegraf/conf/neteye_* are NetEye configuration and must not

be modified by the user. If you need to add configurations to these Telegraf instances, you can add them

in their respective .d drop-in folders. For example, to add configurations to /neteye/local/telegraf/conf/neteye_consumer_influxdb_telegraf_master.conf

you can add a file inside /neteye/local/telegraf/conf/neteye_consumer_influxdb_telegraf_master.d/.

Running a Local Telegraf Instance in NetEye¶

To run a Telegraf instance in NetEye, the user must create a dedicated configuration file i.e., $INSTANCE.conf

in directory /neteye/local/telegraf/conf/ as already described in the

Telegraf Configuration section and start the service using the command below

neteye# systemctl start telegraf-local@${INSTANCE}

The telegraf-local service will load the configuration file named $INSTANCE.conf e.g.

telegraf-local@test.service will look for the configuration file /neteye/local/telegraf/conf/test.conf.

Note

Please note that all installations of NetEye use service

telegraf-local instead of a standard telegraf, which is

enhanced with NetEye-specific functions that guarantee flawless

interaction with Telegraf. Moreover, on a NetEye Master, a service

based on telegraf-local takes care of collecting its default

metrics.

Telegraf logs are collected by the journald agent and can be viewed by using journalctl.

For example, to inspect the log of the Telegraf instance called telegraf-local@${INSTANCE}

the user can type:

neteye# journalctl -u telegraf-local@${INSTANCE} -f

However, the Telegraf instance can be configured to write the logs to a specific file. This can be set in the configuration file as follows:

logfile = "/neteye/local/telegraf/log/${INSTANCE}.log"

Debugging output can be enabled by setting the debug flag to true in the configuration file:

debug = true

Note

This is a local service, not a Clusterized service hence it runs only on the node you started it.

InfluxDB-only Nodes¶

Warning

The support of InfluxDB-only nodes is currently experimental. They are designed to offload traffic from clusters that process an extraordinarily high volume of metrics. If you think that this could be beneficial for you, please get in touch with your consultant. The feature will become publicly available in the upcoming releases.

Starting from NetEye 4.27, it is possible to use, in cluster installations, an InfluxDB-only node as target instance for the data collected by the Telegraf consumer of a NetEye Tenant.

To use an InfluxDB-only node, you have to create an entry of type InfluxDBOnlyNodes in the file

/etc/neteye-cluster, as in the following example:

{

"Hostname" : "my-neteye-cluster.example.com",

"Nodes" : [

{

"addr" : "192.168.47.1",

"hostname" : "my-neteye-01",

"hostname_ext" : "my-neteye-01.example.com",

"id" : 1

},

{

"addr" : "192.168.47.2",

"hostname" : "my-neteye-02",

"hostname_ext" : "my-neteye-02.example.com",

"id" : 2

},

{

"addr" : "192.168.47.3",

"hostname" : "my-neteye-03",

"hostname_ext" : "my-neteye-03.example.com",

"id" : 3

},

{

"addr" : "192.168.47.4",

"hostname" : "my-neteye-04",

"hostname_ext" : "my-neteye-04.example.com",

"id" : 4

}

],

"ElasticOnlyNodes": [

{

"addr" : "192.168.47.5",

"hostname" : "my-neteye-05",

"hostname_ext" : "my-neteye-05.example.com",

"id" : 5

}

],

"VotingOnlyNode" : {

"addr" : "192.168.47.6",

"hostname" : "my-neteye-06",

"hostname_ext" : "my-neteye-06.example.com",

"id" : 6

},

"InfluxDBOnlyNodes": [

{

"addr" : "192.168.47.7",

"hostname" : "my-neteye-07",

"hostname_ext" : "my-neteye-07.example.com"

}

]

}

By default, the parameters used to connect to the InfluxDB instance are the following:

Port: 8086

InfluxDB administrator user: root

In case you need to use different parameters because, for example, the name of the InfluxDB administrator user is

admin instead of root, it is possible to specify them in the node definition,

as follows:

{

"InfluxDBOnlyNodes":

[

{

"addr" : "192.168.47.7",

"hostname" : "my-neteye-07",

"hostname_ext" : "my-neteye-07.example.com",

"influxdb_connection": {

"port": 8085,

"admin_username": "admin"

}

}

]

}

After the node has been added to the cluster configuration, please ensure this is synchronized to all Cluster Nodes by executing the neteye config cluster sync command.

Subsequently, you need to add the password of the InfluxDB administrator user in the

file /root/.pwd_influxdb_username_hostname and adjust its

permissions to ensure it is readable only by the root system user. For example, if the default root

InfluxDB user is used to connect to the external InfluxDB instance defined above, the commands to be executed will

be the following:

cluster-node-1# echo "password" > /root/.pwd_influxdb_root_neteye04.neteyelocal

cluster-node-1# chmod 640 /root/.pwd_influxdb_root_neteye04.neteyelocal

cluster-node-1# chown root:root /root/.pwd_influxdb_root_neteye04.neteyelocal

Afterwards, it is possible to create a NetEye Tenant that uses the added node as InfluxDB target, by following the Configuration of Tenants procedure.

Write Data to InfluxDB¶

Starting with NetEye 4.19, InfluxDB is protected with username and password authentication.

Hence, to send data to InfluxDB you must create a dedicated user with limited privileges to be used in

Telegraf configuration.

For example, to create a write-only user on database icinga2, you can do like this

CREATE USER "myuser" WITH PASSWORD 'securepassword'

GRANT WRITE ON "icinga2" TO "myuser"

Hint

InfluxDB default administrator username is root and the password can be found

in the file /root/.pwd_influxdb_root.

To write data to InfluxDB you must configure the dedicate output section in Telegraf configuration to use SSL connection and Basic Authentication:

[[outputs.influxdb]]

urls = ["https://influxdb.neteyelocal:8086"]

username = "myuser"

password = "securepassword"

Custom Retention Policies¶

By default, the Retention Policy of InfluxDB is set to 550 days for all Telegraf databases, which includes telegraf_master as well as a database for each tenant, following the procedure and naming conventions in Multi Tenancy configuration explained. You can change it under . To apply the new settings run neteye install.

Warning

Keep in mind that modifying the duration of retention policy will retroactively affect shards, which means it will delete data older than the duration you are setting.

The Telegraf Agent can define its own Retention Policy. This may be useful when for example the Telegraf Agent is producing some metrics that either occupy a lot of size on disk, or need to be kept for a long time due to their importance.

Suppose that you want some Telegraf metrics to be stored on InfluxDB in the database my_tenant with the

Retention Policy named six_months. In this case you should proceed as follows:

Ensure that the six_months Retention Policy is present in InfluxDB for the database

my_tenantIdentify the Telegraf Agent(s) that is producing the metrics and login to the host where this Agent is running

Modify the configuration of the Telegraf Agent(s) by adding the tag retention_policy with value six_months to the metrics. For example you can add the tag in the global_tags of the Telegraf Agent:

[global_tags] retention_policy = "six_months"

Restart the Telegraf Agent

Once this procedure has been performed, the metrics gathered from the Telegraf Agent will be automatically written with the InfluxDB Retention Policy specified by the Agent’s retention_policy tag. Indeed, this procedure works because the Telegraf consumers that run on the NetEye Master are pre-configured by NetEye in such a way that they write in InfluxDB using the Retention Policy they receive inside the retention_policy tag.

Telegraf on monitored hosts¶

On NetEye, Telegraf is installed by default as part of NetEye core. For hosts that don’t have NetEye installed, we provide packages for the most common systems. The version of the Telegraf on the host should always match the version of NetEye it communicates with. It can be pulled from the corresponding repository.

Installing Telegraf on CentOS / Fedora / RedHat

The Package for CentOS, Fedora and RHEL on an x86_64 architecture can be directly installed from the NetEye

repository by adding the file /etc/yum.repo.d/neteye-telegraf.repo with the following content:

[neteye-telegraf] name=NetEye Telegraf Packages baseurl= https://repo.wuerth-phoenix.com/centos/x86_64/neteye-4.38-telegraf/ gpgcheck=0 enabled=1 priority=1

Then, install Telegraf with the command dnf install telegraf.

Installing Telegraf on Debian / Ubuntu

To add the Telegraf repository on Ubuntu or Debian systems please create file

neteye-telegraf.list in the directory /etc/apt/sources.list.d/.

deb [trusted=yes] https://repo.wuerth-phoenix.com/debian/x86_64/neteye-4.38-telegraf/ stable main

Run apt update to update the repository data and apt install telegraf to install Telegraf.

Note

In order to verify the repository signature, the ca-certificates package must be installed on the target machine. This can be achieved with the command apt install ca-certificates

Installing Telegraf on Windows

The files for Windows are hosted on https://repo.wuerth-phoenix.com/windows/x86_64/neteye-4.38-telegraf/ as a zip file containing the executable and a configuration file.

Installing Telegraf on Linux

For all other Linux distributions that run x86_64, we provide a .tar.gz archive to install. It

can be found on https://repo.wuerth-phoenix.com/linux-generic/x86_64/neteye-4.38-telegraf/.

Installing Telegraf on other Platforms

If you need to install Telegraf on MacOS or on an architecture other than x86_64, please refer to the official Telegraf download page under section Telegraf open source data collector.

Send metrics directly to Master¶

NetEye provides a NATS user and its certificates to connect external or internal Telegraf instances directly to the NATS master instance.

The NATS user for this purpose is telegraf_wo, it only has the ability to publish on subject telegraf.> and cannot subscribe to any subject.

The related certificates are located in

/neteye/local/telegraf/conf/certs/telegraf_wo.crt.pem

/neteye/local/telegraf/conf/certs/root-ca.crt

/neteye/local/telegraf/conf/certs/private/telegraf_wo.key.pem

The NATS server will take care of adding the prefix master. to all messages sent by this user.

All data sent with this user are automatically collected and written to InfluxDB by a local Telegraf instance using the NATS user telegraf_ro. This user, as opposed to the user used to send the data, can subscribe to the subject master.telegraf.> but cannot publish any message.

To setup a Telegraf agent, please follow the official Telegraf documentation.

After setting up a new Telegraf instance, the output section of the configuration file needs to be edited to make it look like the following:

[[outputs.nats]]

## URLs of NATS servers

servers = ["nats://<nats_master_fqdn>:4222"]

## NATS subject for producer messages

subject = "telegraf.metrics"

## Use Transport Layer Security

secure = true

tls_ca = "<telegraf_certs_directory>/root-ca.crt"

tls_cert = "<telegraf_certs_directory>/telegraf_wo.crt.pem"

tls_key = "<telegraf_certs_directory>/private/telegraf_wo.key.pem"

data_format = "influx"

Note

In case of an agent remember to copy the certificates from the master to the agent machine.

Configuration in Multitenant environments

NetEye provides a NATS user and its certificates also for each Tenant in multitenant environments. By using a specific Tenant’s user to send the metrics to the NATS master instance, the complete separation of the data between Tenants is ensured. The following configuration example shows how to use the specific ACME Tenant’s NATS user.

Note

The certificate files are located in NetEye in /root/security/nats-client/<tenant-name>/certs/ and in /root/security/ca/root-ca.crt. They need to be copied to the host where the Telegraf runs.

[[outputs.nats]]

## URLs of NATS servers

servers = ["nats://<nats_master_fqdn>:4222"]

## NATS subject for producer messages

subject = "telegraf.metrics"

## Use Transport Layer Security

secure = true

tls_ca = "<telegraf_certs_directory>/root-ca.crt"

tls_cert = "<telegraf_certs_directory>/acme-telegraf_wo.crt.pem"

tls_key = "<telegraf_certs_directory>/private/acme-telegraf_wo.key.pem"

data_format = "influx"

Send metrics through Satellite¶

Note

The Satellite must be reachable by Telegraf using the Satellite FQDN

In order to connect external Telegraf instances to the NATS master through a Satellite, NetEye provides a set of certificates. These certificates are located in

/neteye/local/telegraf/conf/certs/telegraf-agent.crt.pem

/neteye/local/telegraf/conf/certs/root-ca.crt

/neteye/local/telegraf/conf/certs/private/telegraf-agent.key.pem

and must be copied to the machine you want to configure Telegraf on. Configure file ownership and/or permissions in order to make the certificates and the key readable by Telegraf.

Edit the output section of the configuration file of the Telegraf agent to make it look like the following:

[[outputs.nats]]

## URLs of NATS servers

servers = ["nats://<satellite_fqdn>:4222"]

## NATS subject for producer messages

subject = "telegraf.metrics"

## Use Transport Layer Security

secure = true

## Optional TLS Config

tls_ca = "<telegraf_certs_directory>/root-ca.crt"

tls_cert = "<telegraf_certs_directory>/telegraf-agent.crt.pem"

tls_key = "<telegraf_certs_directory>/private/telegraf-agent.key.pem"

data_format = "influx"

Warning

Change configuration accordingly with your actual paths and Satellite FQDN. It is mandatory, however, that the subject matches telegraf.metrics, you can experience data losses otherwise.

Telegraf Configuration Migration to Local Service¶

In this chapter we are going to explain how to migrate a Telegraf consumer/collector configuration to be compliant with the supported NetEye 4 configurations introduced in NetEye 4.19.

Note

This procedure is valid for Telegraf collectors or consumers that run in NetEye installations, either single instances or clusters, thus it can be skipped for external Telegraf agents. To configure an external Telegraf agent, please refer to section Send metrics through Satellite.

To migrate your Telegraf to local service you have to:

Navigate to the Telegraf configuration folder:

neteye# cd /neteye/shared/telegraf/

Backup the running configuration folder:

neteye# cp -a conf conf.backup

Now you can perform the upgrade from NetEye 4.18 to NetEye 4.19

Stop and disable all running telegraf instances e.g. systemctl stop telegraf@myconf

Move your Telegraf configurations from

/neteye/shared/telegraf/conf.backupto/neteye/local/telegraf/confStart a new telegraf-local instance for each configuration e.g. systemctl start telegraf-local@myconf

Customizing Performance Graph¶

If the default Performance Graph for a check command is not suitable for your needs, you can adapt it by providing your own dashboard.

First of all, create the desired ITOA dashboard in the Main Org.

Note

You are advised to not modify the preconfigured dashboards in the neteye-performance-graphs folder, as they will be overwritten at the next neteye install execution.

Finally you have to update the mapping from check command to dashboard in the section.

If you want to change an existing graph, you must update the Dashboard UID field using the UID of the previously created dashboard.

To add a new graph please refer to Icingaweb2 Module Grafana doc

Advanced: Automating Creation of Custom Performance Graphs¶

If you need to automate the creation of custom performance graphs, you can do so by using a preconfigured JSON file in the following way:

Create an ITOA dashboard with the desired panel

Name the dashboard the same as the check command

Export the dashboard in JSON format by clicking

Save the JSON as

/usr/share/grafana/public/dashboards/neteye-performance-graphs/neteye/<check-command>.jsonAt this point, a couple of changes to the JSON are needed:

Remove the uid field at the root level

Set the id field at the root level to null

Set the datasource field of the panel to null

To customize any option of the performance graph, you can do so by adding an ini file in

/usr/share/grafana/public/dashboards/neteye-performance-graphs/neteye/<check-command>.ini.The ini file must have the same name as the check command

Create a section with the same name as the check command

Add the options you want to customize in the section as key-value pairs

Example:

/usr/share/grafana/public/dashboards/neteye-performance-graphs/neteye/my_check_command.ini[my_check_command] panelId = "1,2" customVars = "&os=$os$"

Run the neteye install command to apply the changes

# neteye install