Architecture¶

This section introduces you to the various possibilities to install NetEye: single node, which is the most basic setup, and Cluster.

Within a cluster, which provides redundancy and dedicated communication and management channels among nodes, dedicated Elasticsearch nodes and Voting only nodes can be configured. See Section Cluster Nodes for more information. Additionally, Satellite nodes can complete a Cluster setup.

Single Node¶

NetEye can run in a Single Node Architecture, that is, as a self-contained server. This setup is ideal for small environments and infrastructure, where limited resources are necessary, and requires only to install it, carry out the initial configuration, and then start working on it: define services, hosts, and so on and so forth.

On NetEye Single Node installations, NetEye services are managed by systemd, see next section.

However, when dealing with large infrastructures, in which hundreds of hosts and services are present, and many more of NetEye’s functionalities are required, a clustered NetEye installation will prove more effective.

NetEye Systemd Targets¶

A single systemd target is responsible for managing start and

stop operations of services which belong to NetEye. Systemd

Targets are special systemd units, which provide no service by

themselves, but are needed to both group sub-services and serve as

reference point for other systemd services and systemd targets.

In NetEye all systemd services that depend on one of the several

neteye-[...].target systemd units, are connected in such a way,

that whenever the systemd target is started or stopped, each dependent

systemd service will also be started or stopped.

Note

Even though there exist more than one

neteye-[...].target, the NetEye autosetup scripts take care

of enabling only one of them based on the contents of

/etc/neteye-cluster.

If you want to discover which NetEye Systemd Target is currently active, you can use the following code snippet:

# systemctl list-units "neteye*.target"

UNIT LOAD ACTIVE SUB DESCRIPTION

neteye-cluster-local.target loaded active active NetEye Cluster Local Services Target

For single node installations, this systemd target is called

neteye.target. You can verify which services are bound to the target

by either using systemctl list-dependencies command, even if the

systemd target is currently not enabled, like so:

# systemctl list-dependencies neteye.target

neteye.target

● ├─elasticsearch.service

● ├─eventhandlerd.service

● ├─grafana-server.service

● ├─httpd.service

● ├─icinga2-master.service

[...]

The commands /usr/sbin/neteye start, /usr/bin/neteye stop and

/usr/bin/neteye status are wrapper scripts around this systemd

functionality to expose an ergonomic interface.

Cluster¶

NetEye 4’s clustering service is based on the RedHat 7 High Availability Clustering technologies:

Corosync: Provides group communication between a set of nodes, application restart upon failure, and a quorum system.

Pacemaker: Provides cluster management, lock management, and fencing.

DRBD: Provides data redundancy by mirroring devices (hard drives, partitions, logical volumes, etc.) between hosts in real time.

“Local” NetEye services running simultaneously on each NetEye node

( i.e. not managed by Pacemaker and Corosync ), are managed by a

dedicated systemd target unit called

neteye-cluster-local.target. This reduced set of local services

is managed exactly alike the Single Node neteye target:

# systemctl list-dependencies neteye-cluster-local.target

neteye-cluster-local.target

● └─drbd.service

● └─elasticsearch.service

● └─icinga2.service

[...]

If you have not yet installed clustering services, please turn to the Cluster Installation page for setup instructions.

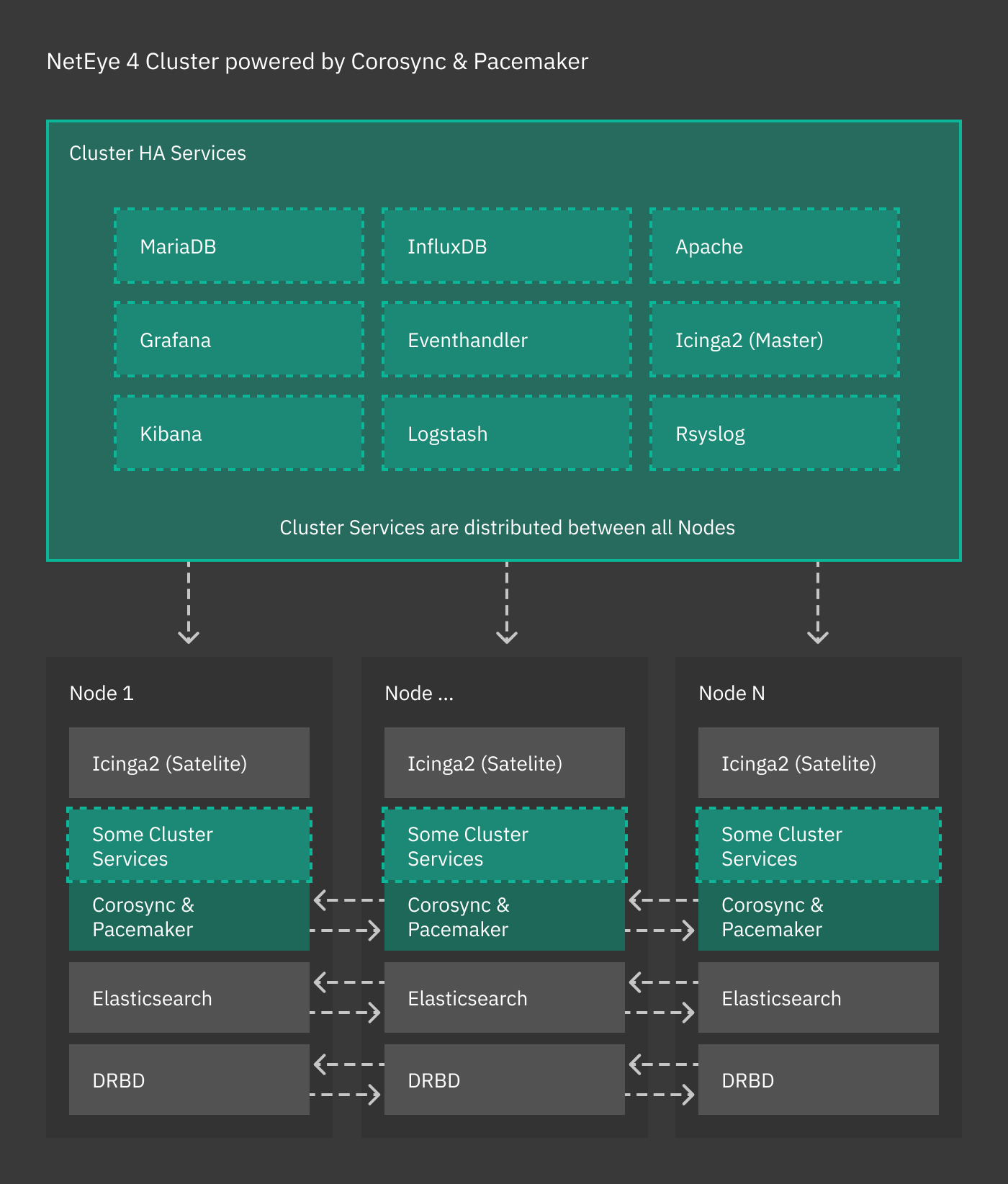

Cluster resources are typically quartets consisting of an internal floating IP, a DRBD device, a filesystem, and a (systemd) service. Fig. 1 shows the general case for High Availability, where cluster services are distributed across nodes, while other services (Icinga 2, Elasticsearch, and DRBD) handle their own clustering requirements.

Fig. 1 The NetEye cluster architecture.¶

Special cases¶

The following services use their own native clustering capabilities rather than Red Hat HA Clustering. NetEye will also take advantage of their inbuilt load balancing capabilities.

Icinga 2 Cluster: For N+1 Icinga 2 instances, the following are created:

N Icinga 2 satellites (1 per cluster node) for check execution

1 Icinga 2 master node for satellite configuration, notifications, an IDO connection, etc.

Elasticsearch: Each cluster node runs a local master-eligible Elasticsearch service, connected to all other nodes. Elasticsearch itself chooses which nodes can form a quorum (note that all NetEye cluster nodes are master eligible by default), and so manual quorum setup is no longer required.

Manage Cluster Service Resources¶

To manage service resources you can use a set of scripts provided with NetEye. These scripts are wrappers of the PCS and DRBD APIs.

Adding a Service Resource to a Cluster¶

To add a service resource you can follow these steps:

Create a services configuration file (Services.conf) with only the service to add (an example file template can be found in /usr/share/doc/neteye-setup-*/doc/templates/, but your derived file does not need to go in any particular location)

Run the cluster_service_setup.pl script on your Services.conf file:

# cd /usr/share/neteye/scripts/cluster # ./cluster_service_setup.pl -c Services.conf

Clustering FAQ¶

Parsing errors in Services.conf / ClusterSetup.conf To assist you in debugging potential JSON errors, the following command can be used to check for correctness:

# cat ${ConfFile} | jq

Secure Intracluster Communication¶

Security between the nodes in a cluster is just as important as front-facing security. Because nodes in a cluster must trust each other completely to provide failover services and be efficient, the lack of an intracluster security mechanism means one compromised cluster node can read and modify data throughout the cluster.

NetEye uses certificates signed by a Certificate Authority to ensure that only trusted nodes can join the cluster, to encrypt data passing between nodes so that externals cannot tamper with your data, and allows for certificate revocation for the certificates of each component in each module.

Two examples of cluster-based modules are:

DRBD, which replicates block devices over the network

The ELK stack, which the NetEye 4 Log Management is based on.

Modules that Use Intracluster Security¶

The Log Manager modules use secure communication:

Module |

Enforcement Mechanism |

Component |

|---|---|---|

Log Manager |

X-Pack Security |

Elasticsearch |

Logstash |

||

Kibana |

Satellite¶

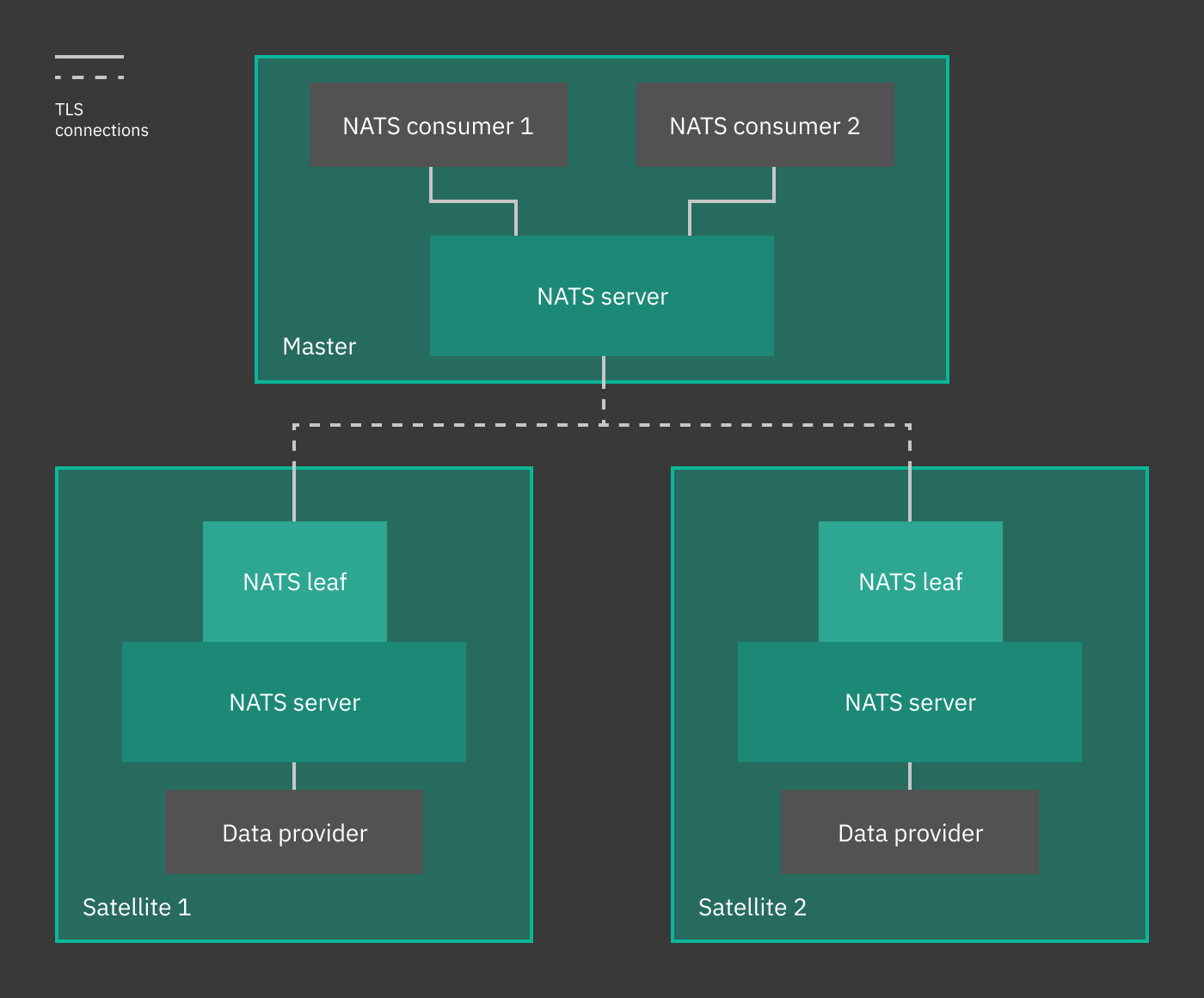

Satellites communicate with other nodes using the NATS Server, the default message broker in NetEye. If you want to learn more about NATS you can refer to the official NATS documentation

Exploiting NATS functionalities: Multi Tenancy and NATS Leaf¶

One interesting functionality provided by NATS Server is the support for a secure, TLS-based, multi tenancy, that can be secured using multiple accounts. According to the Multi Tenancy using Accounts documentation, it is thus possible to create self-contained, isolated communications from multiple clients to a single server, that will then process independently all data streams. This ability can be exploited on NetEye clusters from 4.12 onwards, in which the single server is the NetEye master and the clients are the NetEye satellites.

The architecture is depicted in the image below. Here, we see similar configurations on the NetEye master (left) and on the satellite (right, only one depicted but multiple can be used). On the master, there are Telegraf consumers that process data coming from clients to the NATS server. On each satellite, a Telegraf instance sends data to the local NATS server. Here, data can be processed immediately, but the can also be forwarded to the Master’s NATS server, thanks to a NATS leaf node, configured to add authentication and a security layer to the data to prevent any third-party interception. On the Master, data are stored in InfluxDB and can be accessed by multiple Telegraf Consumer, which are also independent of each other, allowing therefore a same stream to be processed multiple times for different purposes.

Fig. 2 Architecture of NATS Server with two satellites.¶