Monitoring - Detection¶

How to Use the Tornado How Tos¶

We assume that you are using a shell environment rather than the Tornado GUI. If Tornado is not already installed, you can install it as follows (the minimum Tornado version is 0.10.0):

# yum install tornado --enablerepo=neteye-extras

As a preliminary test, make sure that the Tornado service is up:

# neteye status

If you do not see any of the Tornado services in the list, then Tornado is not properly installed.

# systemctl daemon-reload

If instead the Tornado services are there, but marked DOWN, you will need to start them.

DOWN [3] tornado.service

DOWN [3] tornado_icinga2_collector.service

DOWN [3] tornado_webhook_collector.service

In either event, you should then start all NetEye services and check that they are running:

# neteye start

# neteye status

Alternatively, you can check the status of the Tornado service by itself:

# systemctl status tornado

... Active: active (running) ...

Finally, run a check on the default Tornado configuration directory. You should see the following output:

# tornado --config-dir=/neteye/shared/tornado/conf check

Check Tornado configuration

The configuration is correct.

How To Use the Email Collector¶

This How To is intended to help you configure, use and test the Email Collector in your existing NetEye Tornado installation. We will configure a new rule to log all incoming mail subject lines in one file for each from email address.

Before continuing, you should first check the prerequisites for Tornado.

Step #1: Email and Package Configuration¶

For testing purposes, we will use mailx to locally send email messages to the eventgw mailbox on NetEye via postfix. In a production environment, you or your Administrator will need to configure the mail infrastructure such that the eventgw mailbox on NetEye is integrated into your company’s mail infrastructure.

If you’ve just upgraded your Tornado installation, run neteye-secure-install, then make sure the Email Collector service is running:

# systemctl status tornado_email_collector.service ● tornado_email_collector.service - Tornado Email Collector - Data Collector for procmail Loaded: loaded (/usr/lib/systemd/system/tornado_email_collector.service; disabled; vendor preset: disabled) Drop-In: /etc/systemd/system/tornado_email_collector.service.d └─neteye.conf Active: active (running) since Thu 2019-06-20 19:08:53 CEST; 20h agoSend an email to the dedicated eventgw user which will then be processed by Tornado:

# echo "TestContent" | mail -s TestSubject eventgw@localhostNow test that an email sent to that address makes it to Tornado (the timestamp reported by journalctl should be at most a second or two after you send the email):

# journalctl -u tornado_email_collector.service Jun 21 15:11:59 host.example.com tornado_email_collector[12240]: [2019-06-21][15:11:59] [tornado_common::actors::uds_server][INFO] UdsServerActor - new client connected to [/var/run/tornado/email.sock]``

Step #2: Service and Rule Configuration¶

Now let’s configure a simple rule that just archives the subject and sender of an email into a log file.

Here’s an example of an Event created by the Email Collector:

{

"type": "email",

"created_ms": 1554130814854,

"payload": {

"date": 1475417182,

"subject": "TestSubject",

"to": "email1@example.com",

"from": "email2@example.com",

"cc": "",

"body": "TestContent",

"attachments": []

}

}

Our rule needs to match incoming events of type email, and when one matches, extract the subject field and the from field (sender) from the payload object. Rules used when Tornado is running are found in /neteye/shared/tornado/conf/rules.d/, but we’ll model our rule based on one of the example rules found here:

/usr/lib64/tornado/examples/rules/

Since we want to match any email event, let’s adapt the matching part of the rule found in /usr/lib64/tornado/examples/rules/001_all_emails.json. And since we want to run the archive executor, let’s adapt the action part of the rule found in /usr/lib64/tornado/examples/rules/010_archive_all.json.

Here’s our new rule containing both parts:

{

"name": "all_email_messages",

"description": "This matches all email messages, extracting sender and subject",

"continue": true,

"active": true,

"constraint": {

"WHERE": {

"type": "AND",

"operators": [

{

"type": "equal",

"first": "${event.type}",

"second": "email"

}

]

},

"WITH": {}

},

"actions": [

{

"id": "archive",

"payload": {

"sender": "${event.payload.from}",

"subject": "${event.payload.subject}",

"event": "${event}",

"archive_type": "archive_mail"

}

}

]

}

Changing the “second” field of the WHERE constraint as above will cause the rule to match with any email event. In the “actions” section, we add the “sender” field which will extract the “from” field in the email, the “subject” field to extract the subject, and change the archive type to “archive_mail”. We’ll see why in Step #3.

Remember to save our new rule where Tornado will look for active rules, which in the default configuration is /neteye/shared/tornado/conf/rules.d/. Let’s give it a name like 030_mail_to_archive.json.

Also remember that whenever you create a new rule and save the file in that directory, you will need to restart the Tornado service. And it’s always helpful to run a check first to make sure there are no syntactic errors in your new rule:

# tornado --config-dir=/neteye/shared/tornado/conf check

# systemctl restart tornado.service

Step #3: Configure the Archive Executor¶

If you look at the file /neteye/shared/tornado/conf/archive_executor.toml, which is the configuration file for the Archive Executor, you will see that the default base archive path is set to /neteye/shared/tornado/data/archive/. Let’s keep the first part, but under “[paths]” let’s add a specific directory (relative to the base directory given for “base_path”). This will use the keyword “archive_mail”, which matches the value of the “archive_type” field in the “action” part of our rule from Section #3, and will include our “source” field, which extracted the source IP from the original event’s payload:

base_path = "/neteye/shared/tornado/data/archive/"

default_path = "/default/default.log"

file_cache_size = 10

file_cache_ttl_secs = 1

[paths]

"archive_mail" = "/email/${sender}/extracted.log"

Combining the base and specific paths yields the full path where the log file will be saved (automatically creating directories if necessary), with our “source” variable instantiated. So if the sender was email2@example.com, the log file’s name will be:

/neteye/shared/tornado/data/archive/email/email2/extracted.log

When an SNMP event is received, the field “event” under “payload” will be written into that file. Since we have only specified “event”, the entire event will be saved to the log file.

Step #4: Check the Resulting Email Match¶

Let’s see how our newly configured Email Collector works using a bash shell.

First we will again manually send an email to be intercepted by Tornado like this:

# echo "The email body." | mail -s "Test Subject" eventgw@localhost

Event processing should be almost immediate, so right away you can check the result of the match by looking at the log file configured by the Archive executor. There you should see the sender and subject written into the file as we specified during Step #3:

# less /neteye/shared/tornado/data/archive/email/eventgw/extracted.log

And that’s it! You’ve successfully configured Tornado to process emails and logging the subject and sender to a dynamic directory per sender.

How To Match on an Event With Dynamic OIDs¶

This How-To is intended to help you creating and configuring rules that match Events, where part of a key is dynamic. In particular we’re looking at Snmptraps containing OIDs with an increasing counter as a postfix.

This example shows a particular Snmptrapd Collector Event with dynamic OIDs; However, it applies perfectly to any situation where it is required to extract values from dynamically changing keys.

Understanding the Use Case¶

In some situations, Devices or Network Monitoring Systems emit SNMP Traps, appending a progressive number to the OIDs to render them uniquely identifiable. This leads to the generation of events with this format:

{

"type":"snmptrapd",

"created_ms":"1553765890000",

"payload":{

"protocol":"UDP",

"src_ip":"127.0.1.1",

"src_port":"41543",

"dest_ip":"127.0.2.2",

"PDUInfo":{

"version":"1",

"notificationtype":"TRAP"

},

"oids":{

"MWRM2-NMS-MIB::netmasterAlarmNeIpv4Address.20146578": {

"content": "127.0.0.12"

},

"MWRM2-NMS-MIB::netmasterAlarmNeStatus.20146578": {

"content": "Critical"

}

}

}

}

Here, the two entries in the oids section have a dynamic suffix

consisting of a number different for each event; in this specific event,

it is 20146578.

Due to the presence of the dynamic suffix, a simple path expression like

${event.payload.oids."MWRM2-NMS-MIB::netmasterAlarmNeIpv4Address".content}

would be ineffective. Consequently, we need a specific solution to

access the content of that changing key.

As we are going show, the solution consists of two steps: 1. Create a

Rule called my_extractor to extract the desired value from the

dynamic keys 2. Create a matching Rule that uses the extracted value

Step #1: Creation of an extractor Rule¶

To access the value of the

MWRM2-NMS-MIB::netmasterAlarmNeIpv4Address.?????? key, we will use

the single_key_match Regex extractor in the WITH clause.

The single_key_match extractor allows defining a regular expression

that is applied to the keys of a JSON object. If and only if there is

exactly one key matching it, the value associated with the matched key

is returned.

In our case the first rule is:

{

"name": "my_extractor",

"description": "",

"continue": true,

"active": true,

"constraint": {

"WHERE": null,

"WITH": {

"netmasterAlarmNeIpv4Address": {

"from": "${event.payload.oids}",

"regex": {

"single_key_match": "MWRM2-NMS-MIB::netmasterAlarmNeIpv4Address.[0-9]+"

}

}

}

},

"actions": []

}

This rule: - has an empty WHERE, so it matches every incoming event

- creates an extracted variable named netmasterAlarmNeIpv4Address;

this variables contains the value of the OID whose key matches the

regular expression:

MWRM2-NMS-MIB::netmasterAlarmNeIpv4Address.[0-9]+

When the previously described event is received, the extracted variable

netmasterAlarmNeIpv4Address will have the following value:

{

"content": "127.0.0.12"

}

From this point, all the rules in the same Ruleset that follows the

my_extractor Rule can access the extracted value through the path

expression ${_variables.my_extractor.netmasterAlarmNeIpv4Address}.

Step #2: Creation of the matching Rule¶

We can now create a new rule that matches on the

netmasterAlarmNeIpv4Address extracted value. As we are interested in

matching the IP, our rule definition is:

{

"name": "match_on_ip4",

"description": "This rule matches all events whose netmasterAlarmNeIpv4Address is 127.0.0.12",

"continue": true,

"active": true,

"constraint": {

"WHERE": {

"type": "equals",

"first": "${_variables.my_extractor.netmasterAlarmNeIpv4Address.content}",

"second": "127.0.0.12"

},

"WITH": {}

},

"actions": []

}

Now we have a rule that matches on the netmasterAlarmNeIpv4Address

using a static path expression even if the source Event contained

dynamically changing OIDs.

How To Use the Tornado Self-Monitoring API¶

This How To is intended to help you quickly configure the Tornado self-monitoring API server.

Before continuing, you should first check the prerequisites for Tornado.

The self-monitoring API server is created as part of the standard Tornado installation within NetEye 4. You can check it is functioning properly via curl:

# curl 127.0.0.1:4748/monitoring

<div>

<h1>Available endpoints:</h1>

<ul>

<li><a href="/monitoring/ping">Ping</a></li>

</ul>

</div>

In general it’s not safe from a security standpoint to have a server open to the world by default. In fact, the Tornado self-monitoring API server is highly locked down, and if you were to try to connect to it using the server’s external network address, or even from the non-loopback address on the server itself, you would find that it doesn’t work at all:

# curl http://192.0.2.51:4748/monitoring

curl: (7) Failed connect to 192.0.2.51:4748; Connection refused

The server process is started as part of the service tornado.service. You can check the parameters currently in use as follows:

# ps aux | grep tornado

root 6776 0.0 0.3 528980 7488 pts/0 Sl 10:02 0:00 /usr/bin/tornado --config-dir /neteye/shared/tornado/conf --logger-level=info --logger-stdout daemon

The IP address and port are not included, indicating the system is using the defaults, so we’ll need to configure the server to make it more useful.

Step #1: Setting Up the Self-Monitoring API Server¶

During installation, NetEye 4 automatically configures the Tornado self-monitoring API server to start up with the following defaults:

IP:* 127.0.0.1

Port: 4748 (TCP)

Firewall: Enabled

The file that defines the service can be found at /usr/lib/systemd/system/tornado.service:

[Unit]

Description=Tornado - Event Processing Engine

[Service]

Type=simple

#User=tornado

RuntimeDirectory=tornado

ExecStart=/usr/bin/tornado \

--config-dir /neteye/shared/tornado/conf --logger-level=info --logger-stdout \

daemon

Restart=on-failure

RestartSec=3

# Other Restart options: or always, on-abort, etc

[Install]

WantedBy=neteye.target

If you want to change the default address and port, you shouldn’t just modify that file directly, since any changes would disappear after the next package update. Instead, you can modify the override file at /etc/systemd/system/tornado.service.d/neteye.conf, or create a reverse proxy in Apache, creating a /tornado/ route that forwards requests to the localhost on the desired port.

ExecStart=/usr/bin/tornado \

--config-dir /neteye/shared/tornado/conf --logger-level=info --logger-stdout \

daemon --web-server-ip=192.0.2.51 --web-server-port=4748

Now we’ll have to restart the Tornado service with our new parameters:

# systemctl daemon-reload

# systemctl restart tornado

Finally, if we want our REST API to be visible externally, we’ll need to either open up the port we just declared in the firewall, or use the reverse proxy described above. Otherwise, connection requests to the API server will be refused.

Step #2: Testing the Self-Monitoring API¶

You can now test your REST API in a shell, both on the server itself as well as from other, external clients:

# curl http://192.0.2.51:4748/monitoring

If you try with the browser, you should see the self-monitoring API page that currently consists of a link to the “Ping” endpoint:

http://192.0.2.51:4748/monitoring

If you click on it and see a response like the following, then you have successfully configured your self-monitoring API server:

message "pong - 2019-04-26T12:00:40.166378773+02:00"

Of course, you can do the same thing with curl, too:

# curl http://192.0.2.51:4748/monitoring/ping | jq .

{

"message": "pong - 2019-04-26T14:06:04.573329037+02:00"

}

How To Use the Monitoring Executor for setting statuses on any object¶

This How To is intended to help you configure, use, and test the Monitoring Executor, a new tornado feature that allows to automatically create new hosts, services, or both in case an event received by tornado refers to objects not yet known to Icinga and the Director. The Monitoring Executor performs a process-check-results on Icinga Objects, creating hosts and services in both Icinga and the Director if they do not exist already.

Before continuing, you should first check the requirements for Tornado.

Requirements for the Monitoring Executor¶

A setup is needed on both tornado and Icinga Director / Icinga 2:

Tornado¶

In a production environment, Tornado can accept events from any collector and even via an HTTP POST request with a JSON payload; we will however show how to manually send an event to Tornado from the GUI to let a suitable rule to fire and create the objects.

To allow for a correct interaction between Tornado and Icinga 2 / Icinga Director, you need to make sure that the corresponding username and password are properly set to your dedicated Tornado user in the files:

/neteye/shared/tornado/conf/icinga2_client_executor.toml

/neteye/shared/tornado/conf/director_client_executor.toml

Note

If one or both passwords are empty, probably you need to

execute the neteye_secure_install script, that will take care

of setting the passwords.

Icinga Director / Icinga 2¶

In Icinga, create a host template and a service template that we will then use to create the hosts, so make sure to write down the name given to the two templates.

Create a host template called host_template_example with the following properties:

Check command: dummy

Execute active checks: No

Accept passive checks: Yes

Create a service template called service_template_example with the following properties:

Check command: dummy

Execute active checks: No

Accept passive checks: Yes

Deploy this configuration to Icinga 2

Scenario¶

We will set up a rule that intercepts an event sent by the SNMPtrapd Collector, so let’s suppose that Tornado receives the following event.

{

"created_ms": 1000,

"type": "snmptrapd",

"payload": {

"src_port": "9999",

"dest_ip": "192.168.0.1",

"oids": {

"SNMPv2-MIB::snmpTrapOID.0": {

"datatype": "OID",

"content": "ECI-ALARM-MIB::eciMajorAlarm"

},

"ECI-ALARM-MIB::eciLSNExt1": {

"content": "ALM_UNACKNOWLEDGED",

"datatype": "STRING"

},

"ECI-ALARM-MIB::eciObjectName": {

"content": "service.example.com",

"datatype": "STRING"

}

},

"src_ip": "172.16.0.1",

"protocol": "UDP",

"PDUInfo": {

"messageid": 0,

"notificationtype": "TRAP",

"version": 1,

"errorindex": 0,

"transactionid": 1,

"errorstatus": 0,

"receivedfrom": "UDP: [172.16.0.1]:9999->[192.168.0.1]:4444",

"community": "public",

"requestid": 1

}

}

}

We want to use the payload of this event to carry out two actions:

Set the status of a service

create the host and service if the do not exist already.

To do so, we will build a suitable rule in Tornado, that defines the conditions for triggering the actions and exploits the Monitoring Executor’s ability to interact with Icinga to actually carry them out.

Warning

When you create your own rules, please pay attention to the correct escaping of the SNMP strings, or the rule might not fire correctly!

Step #1: Define the Rule’s Conditions¶

This first task is fairly simple: Given the event shown in the Scenario

above, we want to set a critical status on the service specified in

payload -> oids -> ECI-ALARM-MIB::eciObjectName -> content, when

both these conditions are met:

payload -> oids -> SNMPv2-MIB::snmpTrapOID.0 -> contentis equal toECI-ALARM-MIB::eciMajorAlarmpayload -> oids -> ECI-ALARM-MIB::eciLSNExt1 -> contentis equal toALM_UNACKNOWLEDGED

We capture all these conditions by creating the following rule in the Tornado GUI (you can set the not mentioned options at your preference):

Name: choose the name you prefer

Description: a string like Set the critical status for snmptrap

Constraint: you can copy and paste it for simplicity:

"WHERE": {

"type": "AND",

"operators": [

{

"type": "equals",

"first": "${event.type}",

"second": "snmptrapd"

},

{

"type": "equals",

"first": "${event.payload.oids.\"SNMPv2-MIB::snmpTrapOID.0\".content}",

"second": "ECI-ALARM-MIB::eciMajorAlarm"

},

{

"type": "equals",

"first": "${event.payload.oids.\"ECI-ALARM-MIB::eciLSNExt1\".content}",

"second": "ALM_UNACKNOWLEDGED"

}

]

},

Step #2: Define the Actions¶

Now that we have defined the rule’s conditions, we want the rule to trigger the two actions

a Monitoring action that will set the critical status for the service specified in

payload -> oids -> ECI-ALARM-MIB::eciObjectName -> contentif necessary, create the underlying host and service in Icinga2 and in the Director

To do so we need to configure the payload of the Monitoring action as follows (check the snippet below).

action_name must be set to

create_and_or_process_service_passive_check_resultprocess_check_result_payload must contain:

the service on which to perform the process-check-result, using the

<host_name>!<service_name>notationexit_status for a critical event, which is equal to 2

a human readable plugin_output

The fields contained in this payload correspond to those that are used by the Icinga2 APIs, therefore you can also provide more data in the payload to create more precise rules.

host_creation_payload takes care of creating the underlying host with the following properties:

a (hard-coded) host name (although it can be configured from the payload of the event)

the host template we created in the Requirements section (i.e., host_template_example)

service_creation_payload takes care of creating the underlying service with the following properties:

the name of the service as provided in

payload -> oids -> ECI-ALARM-MIB::eciObjectName -> contentthe service template we created in the Requirements section (i.e., service_template_example)

the host as the one specified in

host_creation_payload

Warning

In process_check_result_payload it is mandatory to specify the object on which to perform the process-check-result with the field “service” (or “host”, in case of check result on a host). This means that for example specifying the object with the field “filter” is not valid

The above actions can be written as the following JSON code, that you can copy and paste within the Actions textfield of the rule. Make sure to maintain the existing square brackets in the textfield!

{ "id": "monitoring", "payload": { "action_name": "create_and_or_process_service_passive_check_result", "process_check_result_payload": { "exit_status": "2", "plugin_output": "CRITICAL - Found alarm ${event.payload.oids.\"SNMPv2-MIB::snmpTrapOID.0\".content}", "service": "acme-host!${event.payload.oids.\"ECI-ALARM-MIB::eciObjectName\".content}", "type": "Service" }, "host_creation_payload": { "object_type": "Object", "object_name": "acme-host", "imports": "host_template_example" }, "service_creation_payload": { "object_type": "Object", "host": "acme-host", "object_name": "${event.payload.oids.\"ECI-ALARM-MIB::eciObjectName\".content}", "imports": "service_template_example" } } }

Now we can save the rule and then deploy the Rule.

Step #3: Send the Event¶

With the rule deployed, we can now use the Tornado GUI’s test window to send a payload and test the rule.

In the test window, add snmptrapd as Event Type and paste the following code as Payload. Now, with the Enable execution of actions disabled, click on the Run test button. If everything is correct, you will see a MATCHED string appear on the left-hand side of the rule’s name. Now, enable the execution of actions and click again on the Run test button.

Now, on the NetEye GUI you should see that in both Icinga 2 and in the Director:

a new host unknown-host was created–in Icinga 2 it will have state pending since we did no checks on it

a new service service.example.com was created–in Icinga 2 with state critical (may be SOFT, depending on your configuration)

Conclusions¶

In this how-to we created a specific rule for setting the critical status of a non-existing service. Of course with more information on the incoming events we may want to add different rules so that Tornado can set the status of the service to ok for example, when another event with different content arrives.

How To Use the Numerical Operators¶

This How To is intended to help you configure, use and test rules involving the numerical operators, allowing you to compare quantities in a given Event either to each other or to a constant in the rule. One important use case is in IoT, where you may be remotely measuring temperatures, humidities, and other physically measurable quantities in order to decide whether, for example, to gracefully shut down a server.

Before continuing, you should first make sure the prerequisites for Tornado. are satisfied.

Step #1: Simulating Rising Temperatures¶

Because IoT hardware and its reporting software differ significantly from one installation to another, we will use the Event Simulator to simulate a rising series of temperatures (Celsius) resulting in an action to shut down a server.

To do this, we will construct an Event that we can repeat, manually changing the temperature each time:

# curl -H "content-type: application/json" \

-X POST -vvv \

-d '{"event":{"type":"iot-temp", "created_ms":111, "payload": {"temperature":55, "ip":"198.51.100.11"}}, "process_type":"Full"}' \

http://localhost:4748/api/v1_beta/event/current/send | jq .

Step #2: Configuring a Rule with Comparisons¶

To start, let’s create a rule that checks all incoming IoT temperature events, extracts the temperature and source IP field, and if the temperature is too high, uses the Archive Executor to write a summary message of the event into a log file in a “Temperatures” directory, then a subdirectory named for the source IP (this would allow us to sort temperatures by their source and keep them in different log directories). Given the “high temperature” specification, let’s choose the “greater than” operator:

Operator |

Description |

|---|---|

gt |

Greater than |

ge |

Greater than or equal to |

lt |

Less than |

le |

Less than or equal to |

All these operators can work with values of type Number, String, Bool, null and Array, but we will just use Number for temperatures.

Now it’s time to build our rule. The event needs to be both of type iot-temp and to have its temperature measurement be greater than 57 (Celsius), which we will do by comparing the computed value of ${event.payload.temperature} to the number 57:

{

"description": "This rule logs when a temperature is above a given value.",

"continue": true,

"active": true,

"constraint": {

"WHERE": {

"type": "AND",

"operators": [

{

"type": "equal",

"first": "${event.type}",

"second": "iot-temp"

},

{

"type": "gt",

"first":"${event.payload.temperature}",

"second": 57

}

]

},

"WITH": {}

},

"actions": [

{

"id": "archive",

"payload": {

"id": "archive",

"payload": {

"event": "At ${event.created_ms}, device ${event.payload.ip} exceeded the temperature limit at ${event.payload.temperature} degrees.",

"archive_type": "iot_temp",

"source": "${event.payload.ip}"

}

}

}

]

}

We’d like our rule to output a meaningful message to the archive log, for instance:

At 17:43:22, device 198.51.100.11 exceeded the temperature limit at 59 degrees.

Our log message that implements string interpolation should then have the following template:

${event.created_ms}, device ${event.payload.ip} exceeded the temperature limit at ${event.payload.temperature} degrees.

So our rule needs to check incoming events of type iot-temp, and when one matches, extract the relevant fields from the payload array.

Remember to save our new rule where Tornado will look for active rules, which in the default configuration is /neteye/shared/tornado/conf/rules.d/. Let’s give it a name like 040_hot_temp_archive.json.

Also remember that whenever you create a new rule and save the file in that directory, you will need to restart the Tornado service. And it’s always helpful to run a check first to make sure there are no syntactic errors in your new rule:

# tornado --config-dir=/neteye/shared/tornado/conf check

# systemctl restart tornado.service

Step #3: Configure the Archive Executor¶

If you look at the file /neteye/shared/tornado/conf/archive_executor.toml, which is the configuration file for the Archive Executor, you will see that the default base archive path is set to /neteye/shared/tornado/data/archive/. Let’s keep the first part, but under “[paths]” let’s add a specific directory (relative to the base directory given for “base_path”). This will use the keyword “iot_temp”, which matches the “archive_type” in the “action” part of our rule from Step #2, and will include our “source” field, which extracted the source IP from the original event’s payload::

base_path = "/neteye/shared/tornado/data/archive/"

default_path = "/default/default.log"

file_cache_size = 10

file_cache_ttl_secs = 1

[paths]

"iot_temp" = "/temp/${source}/too_hot.log"

Combining the base and specific paths yields the full path where the log file will be saved (automatically creating directories if necessary), with our “source” variable instantiated. So if the source IP was 198.51.100.11, the log file’s name will be:

/neteye/shared/tornado/data/archive/temp/198.51.100.11/too_hot.log

Then whenever an IoT temperature event is received above the declared temperature, our custom message with the values for time, IP and temperature will be written out to the log file.

Step #4: Watch Tornado “in Action”¶

Let’s observe how our newly configured temperature monitor works using a bash shell. Open a shell and trigger the following events manually:

# curl -H "content-type: application/json" \

-X POST -vvv \

-d '{"event":{"type":"iot-temp", "created_ms":111, "payload": {"temperature":55, "ip":"198.51.100.11"}}, "process_type":"Full"}' \

http://localhost:4748/api/v1_beta/event/current/send | jq .

# curl -H "content-type: application/json" \

-X POST -vvv \

-d '{"event":{"type":"iot-temp", "created_ms":111, "payload": {"temperature":57, "ip":"198.51.100.11"}}, "process_type":"Full"}' \

http://localhost:4748/api/v1_beta/event/current/send | jq .

So far if you look at our new log file, you shouldn’t see anything at all. After all, the two temperature events so far haven’t been greater than 57 degrees, so they haven’t matched our rule:

# cat /neteye/shared/tornado/data/archive/temp/198.51.100.11/too_hot.log

<empty>

And now our server has gotten hot. So let’s simulate the next temperature reading:

# curl -H "content-type: application/json" \

-X POST -vvv \

-d '{"event":{"type":"iot-temp", "created_ms":111, "payload": {"temperature":59, "ip":"198.51.100.11"}}, "process_type":"Full"}' \

http://localhost:4748/api/v1_beta/event/current/send | jq .

There you should see the full event written into the file we specified during Step #2:

# cat /neteye/shared/tornado/data/archive/temp/198.51.100.11/too_hot.log

At 17:43:22, device 198.51.100.11 exceeded the temperature limit at 59 degrees.

Wrapping Up

That’s it! You’ve successfully configured Tornado to respond to high temperature events by logging them in a directory specific to temperature sensor readings for each individual network device.

You can also use different executors, such as the Icinga 2 Executor, to send IoT events as monitoring events straight to Icinga 2 where you can see the events in a NetEye dashboard. The Icinga documentation shows you which commands the executor must implement to achieve this.

How To Simulate Events¶

This How To is intended to help you learn how to simulate incoming events (such as monitoring events, network events, email or SMS messages) in order to test that the rules you configure will properly match those events and correctly invoke the chosen actions.

Before continuing, you should first check the prerequisites for Tornado.

Step #1: Checking for Rule Matches¶

First let’s test whether we can correctly match a rule. To do this, we will purposefully not execute any actions should a rule match. This capability is designed into Tornado’s Event Simulator by setting the process_type to SkipActions. The possible values for process_type are:

Full: the event is processed and linked actions are executed

SkipActions: the event is processed but actions are not executed

So let’s put that in our JSON request, and add some dummy values for the required fields. We’ll pipe the results through the jq utility for now so that we can more easily interpret the results:

# curl -H "content-type: application/json" \

-X POST -vvv \

-d '{"event":{"type":"something", "created_ms":111, "payload": {}}, "process_type":"SkipActions"}' \

http://localhost:4748/api/v1_beta/event/current/send | jq .

* About to connect() to localhost port 4748 (#0)

* Trying ::1...

* Connection refused

* Trying 127.0.0.1...

* Connected to localhost (127.0.0.1) port 4748 (#0)

> POST /api/v1_beta/event/current/send HTTP/1.1

> User-Agent: curl/7.29.0

> Host: localhost:4748

> Accept: */*

> content-type: application/json

> Content-Length: 93

>

* upload completely sent off: 93 out of 93 bytes

< HTTP/1.1 200 OK

< content-length: 653

< content-type: application/json

< date: Mon, 20 May 2019 15:22:35 GMT

<

{ [data not shown]

100 746 100 653 100 93 12933 1841 --:--:-- --:--:-- --:--:-- 12803

* Connection #0 to host localhost left intact

{

"event": {

"type": "something",

"created_ms": 111,

"payload": {}

},

"result": {

"type": "Rules",

"rules": {

"rules": {

"all_emails": {

"rule_name": "all_emails",

"status": "NotMatched",

"actions": [],

"message": null

},

"emails_with_temperature": {

"rule_name": "emails_with_temperature",

"status": "NotMatched",

"actions": [],

"message": null

},

"archive_all": {

"rule_name": "archive_all",

"status": "Matched",

"actions": [

{

"id": "archive",

"payload": {

"archive_type": "one",

"event": {

"created_ms": 111,

"payload": {},

"type": "something"

}

}

}

],

"message": null

},

"icinga_process_check_result": {

"rule_name": "icinga_process_check_result",

"status": "NotMatched",

"actions": [],

"message": null

}

},

"extracted_vars": {}

}

}

}

What we sent is copied in the event field. The result of the matching process is the value in the results field. If you look at the rule_name fields, you can see the four rules that were checked: all_emails, emails_with_temperature, archive_all, and icinga_process_check_result.

Looking at the status field, we can see that only the archive_all rule matched our incoming event, while the remaining rules have status NotMatched with empty actions fields. The actions field for our matched rule can help inform us what would happen if we had selected the process_type Full instead of SkipActions.

Step #2: Actions after Matches¶

If we repeat the same command as above, but with process_type set to Full, then that Archive action will be executed. We won’t repeat that command here because on a production system, it can be dangerous to execute an action unless you know what you are doing. The effects of poorly configured actions can include shutting down your entire monitoring server, or crashing the server or VM.

If you have configured your Archive Executor, we can now check the results of running that command. This executor is relatively safe, as it just writes the input event into a log file. So for instance if we configured the executor to save data in /test/all.log, we should be able to see the output immediately:

# cat /neteye/shared/tornado/data/archive/test/all.log

{"type":"something","payload":{},"created_ms":111}

How To Use the SNMP Trap Daemon Collector¶

This How To is intended to help you configure, use and test the SNMP Trap Daemon Collector in your existing NetEye Tornado installation.

Before continuing, you should first check the prerequisites for Tornado.

Step #1: Verify that the SNMP Trap Daemon is Working Properly¶

Restart the SNMP Trap service to be certain it has loaded the latest configuration:

# systemctl restart snmptrapd.service

To test that the SNMP Trap daemon started correctly, you should see output like this when running the following command (especially the “loaded successfully” line):

# journalctl -u snmptrapd

Apr 16 11:00:22 tornadotest systemd[1]: Starting Simple Network Management Protocol (SNMP) Trap Daemon....

Apr 16 11:00:23 tornadotest snmptrapd[14872]: The snmptrapd_collector was loaded successfully.

Then test that the SNMP Trap daemon is receiving SNMP events properly by sending a fake SNMP message with the command:

# snmptrap -v 2c -c public localhost '' 1.3.6.1.4.1.8072.2.3.0.1 1.3.6.1.4.1.8072.2.3.2.1 i 123456

Now run the command journalctl -u snmptrapd again. You should see that output similar to this has been appended to the end of the file::

Apr 16 11:08:31 tornadotest snmptrapd[14924]: localhost [UDP: [127.0.0.1]:60889->[127.0.0.1]:162]: Trap , DISMAN-EVENT-MIB::sysUpTimeInstance = Timeticks: (6558389) 18:13:03.89, SNMPv2-MIB::snmpTrapOID.0 = OID: NET-SNMP-EXAMPLES-

Apr 16 11:08:31 tornadotest snmptrapd[14924]: perl callback function 0x557bffdca698 returns 1

Apr 16 11:08:31 tornadotest snmptrapd[14924]: perl callback function 0x557bfff44988 returns 1

Apr 16 11:08:31 tornadotest snmptrapd[14924]: ACK

If you do not see these lines, or the “loaded successfully” message above, there is a problem with your SNMP Trap Collector that must be addressed before continuing with this How To.

Step #2: Configuring SNMP Trap Collector Rules¶

Unlike other collectors, the SNMP Trap Collector does not reside in its own process, but as inline Perl code within the snmptrapd service. For reference, you can find it here:

/usr/share/neteye/tornado/scripts/snmptrapd_collector.pl

To start, let’s create a rule that matches all incoming SNMP Trap events, extracts the source IP field, and uses the Archive Executor to write the entire event into a log file in a directory named for the source IP (this would allow us to keep events from different network devices in different log directories). The SNMP Trap Collector produces a JSON structure, which we will serialize to write into the file defined in Step #3.

A JSON structure representing an incoming SNMP Trap Event looks like this:

{

"type":"snmptrapd",

"created_ms":"1553765890000",

"payload":{

"protocol":"UDP",

"src_ip":"127.0.1.1",

"src_port":"41543",

"dest_ip":"127.0.2.2",

"PDUInfo":{

"version":"1",

"errorstatus":"0",

"community":"public",

"receivedfrom":"UDP: [127.0.1.1]:41543->[127.0.2.2]:162",

"transactionid":"1",

"errorindex":"0",

"messageid":"0",

"requestid":"414568963",

"notificationtype":"TRAP"

},

"oids":{

"iso.3.6.1.2.1.1.3.0":"67",

"iso.3.6.1.6.3.1.1.4.1.0":"6",

"iso.3.6.1.4.1.8072.2.3.2.1":"2"

}

}

}

So our rule needs to match incoming events of type snmptrapd, and when one matches, extract the src_ip field from the payload array. Although the rules used when Tornado is running are found in /neteye/shared/tornado/conf/rules.d/, we’ll model our rule based on one of the example rules found here::

/usr/lib64/tornado/examples/rules/

Since we want to match any SNMP event, let’s adapt the matching part of the rule found in /usr/lib64/tornado/examples/rules/001_all_emails.json. And since we want to run the archive executor, let’s adapt the action part of the rule found in /usr/lib64/tornado/examples/rules/010_archive_all.json.

Here’s our new rule containing both parts:

{

"name": "all_snmptraps",

"description": "This matches all snmp events",

"continue": true,

"active": true,

"constraint": {

"WHERE": {

"type": "AND",

"operators": [

{

"type": "equal",

"first": "${event.type}",

"second": "snmptrapd"

}

]

},

"WITH": {}

},

"actions": [

{

"id": "archive",

"payload": {

"event": "${event}",

"source": "${event.payload.src_ip}",

"archive_type": "trap"

}

}

]

}

Changing the “second” field of the WHERE constraint as above will cause the rule to match with any SNMP event. In the “actions” section, we add the “source” field which will extract the source IP, and change the archive type to “trap”. We’ll see why in Step #3.

Remember to save our new rule where Tornado will look for active rules, which in the default configuration is /neteye/shared/tornado/conf/rules.d/. Let’s give it a name like 030_snmp_to_archive.json.

Also remember that whenever you create a new rule and save the file in that directory, you will need to restart the Tornado service. And it’s always helpful to run a check first to make sure there are no syntactic errors in your new rule:

# tornado --config-dir=/neteye/shared/tornado/conf check

# systemctl restart tornado.service

Step #3: Configure the Archive Executor¶

If you look at the file /neteye/shared/tornado/conf/archive_executor.toml, which is the configuration file for the Archive Executor, you will see that the default base archive path is set to /neteye/shared/tornado/data/archive/. Let’s keep the first part, but under “[paths]” let’s add a specific directory (relative to the base directory given for “base_path”. This will use the keyword “trap”, which matches the “archive_type” in the “action” part of our rule from Section #3, and will include our “source” field, which extracted the source IP from the original event’s payload:

base_path = "/neteye/shared/tornado/data/archive/"

default_path = "/default/default.log"

file_cache_size = 10

file_cache_ttl_secs = 1

[paths]

"trap" = "/trap/${source}/all.log"

Combining the base and specific paths yields the full path where the log file will be saved (automatically creating directories if necessary), with our “source” variable instantiated. So if the source IP was 127.0.0.1, the log file’s name will be:

/neteye/shared/tornado/data/archive/trap/127.0.0.1/all.log

When an SNMP event is received, the field “event” under “payload” will be written into that file. Since we have only specified “event”, the entire event will be saved to the log file.

Step #4: Watch Tornado “in Action”¶

Let’s observe how our newly configured SNMP Trap Collector works using a bash shell. If you want to see what happens when an event is processed, open two separate shells to:

Show internal activity in the matcher engine

Send SNMP events manually, and display the results

In the first shell, run the following command to see the result of rule matches in real-time:

# journalctl -f -u snmptrapd

In the second shell, we will manually initiate simulated SNMP Trap events like this:

# snmptrap -v 2c -c public localhost '' 1.3.6.1.4.1.8072.2.3.0.1 1.3.6.1.4.1.8072.2.3.2.1 i 123456

What you should see is that when the SNMP event is initiated in the second shell, output appears in the first shell, indicating that the event has been successfully matched. In addition, we can now look at the result of the match by looking at the log file configured by the archive executor.

There you should see the full event written into the file we specified during Step #3:

/neteye/shared/tornado/data/archive/trap/127.0.0.1/all.log

Wrapping Up¶

That’s it! You’ve successfully configured Tornado to respond to SNMP trap events by logging them in a directory specific to each network device.

You can also use different executors, such as the Icinga 2 Executor, to send SNMP Trap events as monitoring events straight to Icinga 2 where you can see the events in a NetEye dashboard. The Icinga documentation shows you which commands the executor must implement to achieve this.

How To Use the Icinga2 Executor with String Interpolation¶

This advanced How To is intended to help you configure, use and test the Icinga2 Executor in combination with the String Interpolation feature, creating a passive check-only monitoring service result with dynamic generation of the check’s resulting content. The general approach however can be used to dynamically execute Icinga 2 actions (or any other action) based on the content of the event.

Before continuing, you should first check the prerequisites for Tornado.

Step #1: Prerequisites¶

Tornado:

For testing purposes we will manually send an event to Tornado via the shell. In a production environment, Tornado can accept events from any collector and even via an HTTP POST request with a JSON payload.

Make sure that the username and password are properly set to your dedicated Tornado user in Icinga 2:

/neteye/shared/tornado/conf/icinga2_client_executor.toml

Icinga Director / Icinga 2:

Create a host called host.example.com* with no particular requirements

Create a service template with the following properties:

Check command: dummy

Execute active checks: No

Accept passive checks: Yes

Create a service* called my_dummy on the host host.example.com that imports the previously created service template

Deploy this configuration to Icinga 2

Step #2: Service and Rule Configuration¶

Below is an example of an event that we will use throughout this How To, including sending it to Tornado. For now, keep it handy as you read the next section, since the rules we will configure are based on this specific format:

{

"type": "dummy_passive_check",

"created_ms": 1000,

"payload": {

"hostname": "host.example.com",

"service": "my_dummy",

"exit_status": "2",

"measured": {

"result1": "0.1",

"result2": "98"

}

}

}

Now let’s configure a rule with the following WHERE constraints:

It matches events of type dummy_passive_check

It requires the service name to be my_dummy

It contains the critical exit_code 2

We can achieve this by creating the following rule in

/neteye/shared/tornado/conf/rules.d/, in a file called

900_icinga2_my_checkresult_crit.json:

{

"description": "Set the critical status for my_dummy checks in Icinga 2",

"continue": true,

"active": true,

"constraint": {

"WHERE": {

"type": "AND",

"operators": [

{

"type": "equal",

"first": "${event.type}",

"second": "dummy_passive_check"

},

{

"type": "equal",

"first": "${event.payload.service}",

"second": "my_dummy"

},

{

"type": "equal",

"first": "${event.payload.exit_status}",

"second": "2"

}

]

},

"WITH": {}

},

"actions": []

}

In addition, we want our rule to trigger an Icinga 2 action with a passive check result that:

Applies to the my_dummy service of the host in ${event.payload.hostname}

Sets the exit_status to critical* (=2)

Adds a human readable plugin_output

Adds a machine readable performance_data field with two simple static thresholds:

result1 perfdata: contains imaginary millisecond duration, with 300ms warn and 500ms crit threshold

result2 perfdata: contains imaginary percentages, with 80% warn and 95% crit

Now let’s add these desired actions to the rule above to create this final version:

{

"description": "Set the critical status for my_dummy checks in Icinga 2",

"continue": true,

"active": true,

"constraint": {

"WHERE": {

"type": "AND",

"operators": [

{

"type": "equal",

"first": "${event.type}",

"second": "dummy_passive_check"

},

{

"type": "equal",

"first": "${event.payload.service}",

"second": "my_dummy"

},

{

"type": "equal",

"first": "${event.payload.exit_status}",

"second": "2"

}

]

},

"WITH": {}

},

"actions": [

{

"id": "icinga2",

"payload": {

"icinga2_action_name": "process-check-result",

"icinga2_action_payload": {

"exit_status": "${event.payload.exit_status}",

"plugin_output": "CRITICAL - Result1 is ${event.payload.measured.result1}ms Result2 is ${event.payload.measured.result2}%",

"performance_data": "result_1=${event.payload.measured.result1}ms;300.0;500.0;0.0 result_2=${event.payload.measured.result2}%;80;95;0",

"filter": "host.name==\"${event.payload.hostname}\" && service.name==\"${event.payload.service}\"",

"type": "Service"

}

}

}

]

}

Remember that whenever you create a new rule or edit an existing one, you will need to restart the Tornado service. It is also helpful to run a check to make sure there are no syntactic errors in your new rule:

# tornado --config-dir=/neteye/shared/tornado/conf check

# systemctl restart tornado.service

If you performed all the above steps correctly, you should notice that whenever an event matches the rule, the body of the generated action will no longer contain any of the original placeholders ${event.payload.*}. In fact, they are replaced by the actual values extracted from the event. If one or more placeholders cannot be resolved, the entire action will fail.

Step #3: Send the Event and Set the Status¶

Open a browser and verify that you have deployed the required configuration to Icinga 2. This can be done by navigating to the Overview > Services > host.example.com: my_dummy service (note that this link will not work if you have not followed all the steps above). You should see that it is still in the Pending state as no active checks have been executed.

We can now use the tornado-send-event helper command to send the JSON content of a file to the Tornado API. So now create a file called payload.json with the following content in your home directory:

{

"type": "dummy_passive_check",

"created_ms": 1000,

"payload": {

"hostname": "host.example.com",

"service": "my_dummy",

"exit_status": "2",

"measured": {

"result1": "0.1",

"result2": "98"

}

}

}

Send it to Tornado using the following command:

# tornado-send-event ~/payload.json

This should trigger our rule and produce a response similar to the following:

{

"event": {

"type": "dummy_passive_check",

"created_ms": 1000,

"payload": {

"service": "my_dummy",

"measured": {

"result1": "0.1",

"result2": "98"

},

"exit_status": "2",

"hostname": "host.example.com"

}

},

"result": {

"type": "Rules",

"rules": {

"rules": {

[...omitted...]

"icinga2_my_checkresult_crit": {

"rule_name": "icinga2_my_checkresult_crit",

"status": "Matched",

"actions": [

{

"id": "icinga2",

"payload": {

"icinga2_action_name": "process-check-result",

"icinga2_action_payload": {

"exit_status": "2",

"filter": "host.name==\"host.example.com\" && service.name==\"my_dummy\"",

"performance_data": "result_1=0.1ms;300.0;500.0;0.0 result_2=98%;80;95;0",

"plugin_output": "CRITICAL - Result1 is 0.1ms Result2 is 98%",

"type": "Service"

}

}

}

},

"message": null

],

[...omitted...]

"extracted_vars": {}

}

}

}

Now open your browser and check the service in Icinga 2 again. You’ll see that it has NOT changed yet. This behavior is intentional: in order to avoid triggering actions accidentally, the tornado-send-event command executes no actions by default. We can tell tornado to actually execute the actions by passing the -f flag to the script as follows:

tornado-send-event ~/payload.json -f

Checking the Service once again should now show that it has turned red and its state has become soft critical. Depending on your configuration, after a few additional executions it will end up in the hard critical state.

As you may notice, if we change the exit_code in the event payload to anything other than 2, the rule will no longer match since we filter out everything that is not a critical event. Adding another rule that filters only on OK states (exit_code == 0), and then sets the service state to an OK state, is left as an exercise to the reader.

How To Send vSphereDB Events or Alarms to Tornado¶

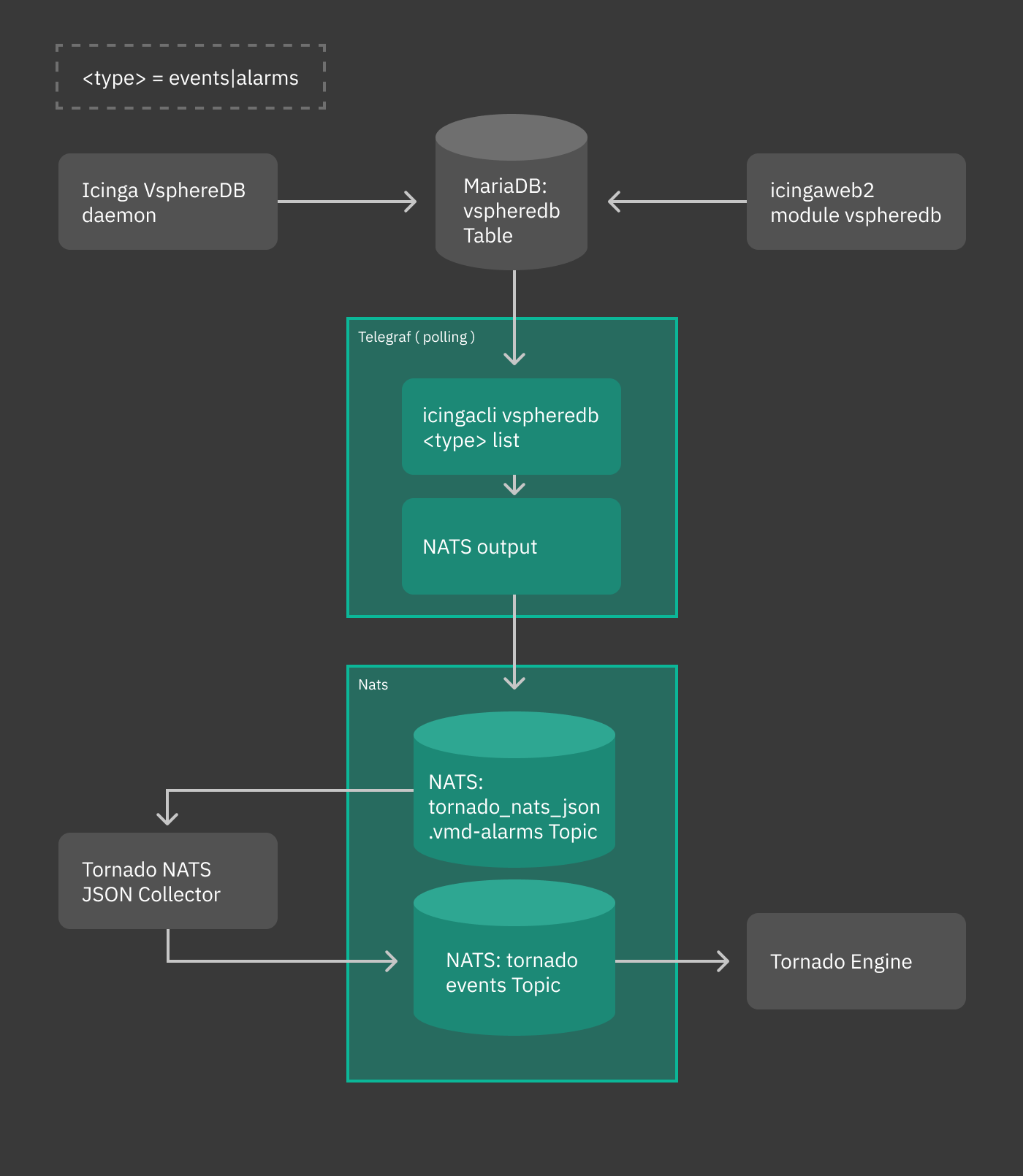

This How To is intended to explain you the workflow to send all events and alarms of vSphereDB - VMWare to Tornado, such that you can use the monitoring executor to send passive check results.

You can see a sketch of the workflow in the following diagram.

Fig. 147 vsphere workflow¶

In our scenario, we receive an alarm about a virtual machine whose memory usage surpasses a given threshold. We want that a new host be created, with a service in CRITICAL status. To do so, we also exploit the abilities of the recently introduced Monitoring Executor.

Overview¶

Events and alarms collected by vSphere/VMD are sent to telegraf, then converted to JSON and fed to the Tornado NATS JSON Collector. All this part of the process is automatic. When installed, suitable configuration files are written in order to simplify the whole process of intercommunication across these services.

Once the event or the alarm reaches Tornado, it can be processed by writing appropriate rules to react to that event or alarm. This is the only part of the whole process that requires some effort.

In the remainder of this how to we give some high level description of the various involved parts and conclude with the design of a rule that matches the event output by vSphere.

Telegraf plugin¶

The telegraf plugin is used to collect the metrics from the vSphereDB

i.e. events or alarms via the exec input plugin. This input plugin

executes the icingacli command

icingacli vspheredb <events/alarms> list --mark-as-read to fetch

them in JSON format from the vSphereDB and send it to NATS as output

using the nats output plugin.

To keep track of the events and alarms sent, a database table is created, which stores the last fetched IDs of the events and alarms.

Warning

When updating tornado in existing installations, the latest event and alarm will be marked. Only more recent events and alarms will then be sent to tornado, to avoid flooding the tornado engine.

NATS JSON Collector¶

The newly introduced NATS JSON Collector will receive the vSphere events and alarms in JSON format from the NATS communication channel and then convert them into the internal Tornado Event structure, and forward them to the Tornado Engine.

Tornado Event Rule¶

Now, suppose you receive the following alarm from vSphere:

{

"type": "tornado_nats_json.vmd-alarms",

"created_ms": 1596023834321,

"payload": {

"data": {

"fields": {

"alarm_name": "Virtual machine memory usage",

"event_type": "AlarmStatusChangedEvent",

"full_message": "Alarm Virtual machine memory usage on pulp2-repo-vm changed from Gray to Red",

"moref": "vm-165825",

"object_name": "pulp2-repo-vm",

"object_type": "VirtualMachine",

"overall_status": "red",

"status_from": "gray",

"status_to": "red"

},

"name": "exec_vmd_alarm",

"tags": {

"host": "0f0892f9a8cf"

},

"timestamp": 1596023830

}

}

}

This alarm notifies that a virtual machine changed its memory usage from Gray to Red, along with other information. We want that for incoming alarms like these, tornado emits a notification. We need therefore to define a Constraint that matches the abovementioned alarm:

{

"WHERE": {

"type": "AND",

"operators": [

{

"type": "equals",

"first": "${event.type}",

"second": "tornado_nats_json.vmd-alarms"

},

{

"type": "equals",

"first": "${event.payload.data.fields.event_type}",

"second": "AlarmStatusChangedEvent"

},

{

"type": "equals",

"first": "${event.payload.data.fields.status_to}",

"second": "red"

}

]

},

"WITH": {}

}

The corresponding Actions can be defined like in the following sample snippet:

[

{

"id": "monitoring",

"payload": {

"action_name": "create_and_or_process_service_passive_check_result",

"host_creation_payload": {

"object_type": "Object",

"imports": "vm_template",

"object_name": "${event.payload.data.fields.object_name}"

},

"service_creation_payload": {

"object_type": "Object",

"host": "${event.payload.data.fields.object_name}",

"imports": "vm_alarm_service_template",

"object_name": "${event.payload.data.fields.event_type}"

},

"process_check_result_payload": {

"type": "Service",

"service": "${event.payload.data.fields.object_name}!${event.payload.data.fields.event_type}",

"exit_status": "2",

"plugin_output": "CRITICAL - Found alarm '${event.payload.data.fields.alarm_name}' ${event.payload.data.fields.full_message}"

}

}

}

]

This action creates a new host with the following characteristics, retrieved from the JSON sent from vSphere:

the name of the host will be extracted from the

event.payload.data.fields.object namefield, therefore it will be pulp2-repo-vmthe associated service will be defined from the

event.payload.data.fields.event_typefield, hence AlarmStatusChangedEvent

How To Use the Webhook Collector¶

Webhooks in Tornado Webhook Collector are defined by JSON files, which

must be stored in the folder

/neteye/shared/tornado_webhook_collector/conf/webhooks/. Examples of

configurations of webhooks for the Tornado Webhook Collector can be

found in the directory

/usr/share/doc/tornado-<tornado_version>/examples/webhook_collector_webhooks/.

Detailed information on how to configure webhooks in Tornado can the found inside the official Tornado documentation; in particular, the Webhook Collector documentation describes the architecture of the Webhook Collector and its configuration parameters and options.

In NetEye, you can send events to the Tornado Webhook Collector by posting the events to the endpoint

https://<neteye-hostname>/tornado/webhook/event/<webhook_id>?token=<webhook_token>

where <webhook_id> and <webhook_token> are respectively the

id and the token defined in the webhook’s JSON configuration.

How To Modify Extracted Variables¶

This How-To is intended to help you creating rules that modify extracted variables to simplify their usage by the Rule’s Actions.

Understanding the Use Case¶

We want to set the monitoring status of the windows_host Host as

reaction to a Tornado Event. To achieve this, we need to call the Icinga

API by using the very same hostname; nevertheless, in some cases, the

incoming events could contain the hostname in uppercase.

We can consider this Event as example:

{

"type":"snmptrapd",

"created_ms":"1553765890000",

"payload":{

"protocol":"UDP",

"src_ip":"127.0.1.1",

"src_port":"41543",

"dest_ip":"127.0.2.2",

"hostname": "WINDOWS_HOST"

}

}

In this case, to correctly match our Host when calling the Icinga API,

we need to process the ${event.payload.hostname} value transforming

it before it is sent.

Creation of an extractor Rule¶

To achieve our objective we will use a WITH clause with some

modifiers_post:

{

"WITH": {

"hostname": {

"from": "${event.payload.hostname}",

"regex": {

"match": ".*",

"group_match_idx": 0

},

"modifiers_post": [

{

"type": "Lowercase"

}

]

}

}

}

This WITH clause creates an extracted variable hostname that: -

is initially populated with the string WINDOWS_HOST extracted from

the payload; - then, has its value altered by the Lowercase modifier

that sets it to windows_host

From this point, the lowercased variable can be used by the Rule’s

action with the usual path expression ${_variables.hostname}.

So, the full rule could be:

{

"name": "my_extractor",

"description": "",

"continue": true,

"active": true,

"constraint": {

"WHERE": null,

"WITH": {

"hostname": {

"from": "${event.payload.hostname}",

"regex": {

"match": ".*",

"group_match_idx": 0

},

"modifiers_post": [

{

"type": "Lowercase"

}

]

}

}

},

"actions": [

{

"id": "icinga2",

"payload": {

"icinga2_action_name": "process-check-result",

"icinga2_action_payload": {

"exit_status": "1",

"plugin_output": "",

"filter": "host.name==\"${_variables.hostname}\"",

"type": "Host"

}

}

}

]

}

How To migrate Tornado to NATS¶

In this chapter we are going to explain how to migrate the communication channel inside Tornado from simple TCP connections to a communication via NATS server. In order to do so, you will need to modify the configuration files of both the Tornado Engine and of the Tornado Collectors.

Prerequisites:¶

NATS server already configured inside NetEye

Warning

Support for TCP communication in Tornado will be removed in the next version, therefore remember to migrate the configuration before the next upgrade!

Tornado Engine migration¶

The configuration file of the Tornado engine is:

/neteye/shared/tornado/conf/tornado.toml

The steps required are:

To disable the TCP communication: you need to set the entry

event_tcp_socket_enabledtofalse, as follows:event_tcp_socket_enabled = false

Warning

When

event_tcp_socket_enabledis set tofalse, the entriesevent_socket_ipandevent_socket_portwill be ignored by TornadoTo enable NATS communication: you need to set the entry

nats_enabledtotrueas follows:nats_enabled = trueSet the address of the NATS server that Tornado needs to connect with. By default it is set to

nats-server.neteyelocal:4222. If this does not suit your configuration you need to set the address(es) accordingly:nats.client.addresses = ["nats-server.neteyelocal:4222"]

Restart the Tornado daemon.

If you are on a NetEye single instance::

systemctl restart tornado.service

If you are on a cluster environment:

pcs resource restart tornado

More information about the settings of the Tornado configuration file can be found in the official documentation of Tornado.

Tornado Collectors migration¶

Each Tornado Collector has its own configuration file which can be found in the filepath:

/neteye/shared/tornado_<COLLECTOR_NAME>/conf/<COLLECTOR_NAME>.toml

For example, the configuration file of the Webhook Collector has

filepath

/neteye/shared/tornado_webhook_collector/conf/webhook_collector.toml

You need to configure each Tornado Collector as follows:

If still present in the configuration file of the collector, please remove these two deprecated entries:

tornado_event_socket_ip = [...] tornado_event_socket_port = [...]

Comment the entries related to TCP communication, i.e.:

# tcp_socket_ip = [...] # tcp_socket_port = [...]Uncomment (and if needed adjust to your configuration) the entries related to NATS communication, i.e.:

nats.client.addresses = ["nats-server.neteyelocal:4222"] nats.subject = "tornado.events"

Restart the Tornado Collector.

If you are on a NetEye single instance:

systemctl restart tornado_<COLLECTOR_NAME>.service

If you are on a cluster environment:

pcs resource restart tornado_<COLLECTOR_NAME>