Concepts¶

Architecture of NetEye SIEM Module¶

The SIEM module is based on the Elastic stack and is intended to provide various means to manage–collect, process, and sign–log files produced by NetEye and by the various services running on it.

SIEM in computer security refers to a set of practices whose purpose is to collect log files from different hosts and services, usually running on the internal network infrastructure of a company or enterprise, and process them for disparate purposes including security analysis, data compliance, log auditing, reporting, alerting, performance analysis, and much more.

Typical components of a SIEM solution include:

a log collector, which can be multiple software that concur to receive log files and convert them to a given format

a storage facility, typically a (distributed) database

a visualisation engine, to create dashboards and reports

some kind of time-stamping solution, to provide data unchangeability, useful for log auditing and compliance to laws and regulations

NetEye SIEM Module components¶

NetEye SIEM solution is mostly based on the Elastic Stack components, in particular:

Elasticsearch and Elasticsearch cluster

Elasticsearch can be installed in different modalities, the simplest being as a service running on a NetEye single instance.

When running a NetEye Cluster with the SIEM module installed, Elasticsearch can be run as either a parallel Elasticsearch Cluster or as an Elastic node within the NetEye cluster. Please refer to NetEye’s Cluster Architecture for details.

Elasticsearch, regardless of how it is installed, is used in the context of SIEM for multiple purposes:

as a database to store all the log files that have been collected and processed

as a search engine over the data stored

to process data (though this function is carried out also by other components, see below)

- BEAT

A Beat is a small, self-contained agent installed on devices within an infrastructure (mostly servers and workstations) that acts as a client to send data to a centralised server where they are processed in a suitable way.

Beats are part of the Elastic Stack; they gather data and send them to Logstash.

There are different types of Beat agents available, each tailored for a different type of data. BEATs supported by NetEye are described in section The Elastic Beat feature.

- Logstash

Logstash is responsible for the collection of logs, (pre-)processing them, and forwarding them to the defined storage: an Elasticsearch cluster or to El Proxy. Logs are collected from disparate sources, including Beats, syslog, and REST endpoints.

- El Proxy

The purpose of the Elastic Blockchain Proxy is to receive data from Logstash and process: first, the hash of the data is calculated, then data are signed and saved into a blockchain, which guarantees their unchangeability over time, and finally everything is sent to Elastic. Please refer to section El Proxy Architecture for more information.

- Kibana

A GUI for Elasticsearch, its functionalities include:

visualise data stored in Elasticsearch

create dashboards for quick data access

define queries against the underlying Elasticsearch

integration with Elastic’s SIEM module for log analysis and rule-based threats detection

use of machine-learning to improve log analysis

More information about these components can be found in the remainder of this section.

General Elasticsearch Cluster Information¶

In order to avoid excessive, useless network traffic generated when the cluster reallocates shards across cluster nodes after you restart an Elasticsearch instance, NetEye employs systemd post-start and pre-stop scripts to automatically enable and disable shard allocation properly on the current node whenever the Elasticsearch service is started or stopped by systemctl.

Details on how shard allocation works can be found here.

Note

By starting a stopped Elasticsearch instance, shard allocation will be enabled globally for the entire cluster. So if you have more than one Elasticsearch instance down, shards will be reallocated in order to prevent data loss.

- Therefore best practice is to:

Never keep an Elasticsearch instance stopped on purpose. Stop it only for maintenance reasons (e.g. for restarting the server) and start it up again as soon as possible.

Restart or stop/start one Elasticsearch node at a time. If something bad happens and multiple Elasticsearch nodes go down, then start them all up again together.

Elastic Only Nodes¶

From Neteye 4.9 is possible to install Elastic-only nodes in order to improve elasticsearch performance without a full Neteye installation.

To create an elastic only node you have to create an entry of type

ElasticOnlyNodes in the file /etc/neteye-cluster as in the following

example. Syntax is the same used for standard Node

{ "ElasticOnlyNodes": [

{

"addr" : "192.168.47.3",

"hostname" : "neteye03.neteyelocal",

"hostname_ext" : "rdneteye03.si.wp.lan"

}

]

}

Voting Only Nodes¶

From Neteye 4.16 is possible to install Voting-only nodes in order to add a node dedicated only to provide quorum. If SIEM module is installed this node provides a voting-only functionalities also to Elasticsearch cluster.

This functionality is achieved configuring the node as voting-only master-eligible node specifying the

variable ES_NODE_ROLES="master, voting_only" in the sysconfig file

/neteye/local/elasticsearch/conf/sysconfig/elasticsearch-voting-only.

Voting-only node is defined in /etc/neteye-cluster as in the following example

{ "VotingOnlyNode": {

"addr" : "192.168.47.3",

"hostname" : "neteye03.neteyelocal",

"hostname_ext" : "rdneteye03.si.wp.lan",

"id" : 3

}

}

Please note that VotingOnlyNode is a json obect and not an array because you can have a single Voting-only node in a NetEye cluster.

Elasticsearch Clusters¶

Design and Configuration¶

With NetEye 4 we recommend that you use at least 3 nodes to form an Elasticsearch cluster. If nevertheless you decide you need a 2-node cluster, our next recommendation is that you talk with a Würth Phoenix NetEye Solution Architect who can fully explain the risks in your specific environment and help you develop strategies that can mitigate those risks.

Elasticsearch coordination

subsystem

is in charge to choose which nodes can form a quorum (note that all

NetEye cluster nodes are master eligible by default). If Log Manager is

installed, the neteye_secure_install script will properly set

seed_hosts and initial_master_nodes according to Elasticsearch’s

recommendations and no manual intervention is required.

neteye_secure_install will set two options to configure cluster

discovery:

discovery.seed_hosts: ["host1", "host2", "host3"]

cluster.initial_master_nodes: ["node1"]

Please note that the value for initial_master_nodes will be set only on the first installed node of the cluster (it is optional on other nodes and if set it must be the same for all nodes in the cluster). Option seed_hosts will be set on all cluster nodes, included Elastic Only nodes, and will have the same value on all nodes.

Elasticsearch reverse proxy¶

Starting with NetEye 4.13, NGINX has been added to NetEye. NGINX acts as a reverse proxy, by exposing a single endpoint and acting as a load-balancer, to distribute incoming requests across all nodes and, in this case, to all Elasticsearch instances. This solution improves the overall performance and reliability of the cluster.

The elasticsearch endpoint is reachable at URI https://elasticsearch.neteyelocal:9200/. Please note that this is the same port used before so no additional change is required; old certificates used for elastic are still valid with the new configuration.

All services connected elastic stack services like Kibana, Logstash and Filebeat have been updated in order to reflect this improvement and to take advantages of the new load balancing feature.

Elasticsearch Only Nodes¶

Large NetEye installations, especially those running the SIEM feature module, will often have multi-node clusters where some nodes are only running Elasticsearch. An Elasticsearch Only node can be setup with a procedure similar to NetEye nodes.

An Elasticsearch Only node does not belong to the RedHat cluster so that it runs a local master-eligible Elasticsearch service, connected to all other Elasticsearch nodes.

El Proxy¶

El Proxy (also called Elastic Blockchain Proxy) allows a secure live signature of log streams from Logstash to Elasticsearch.

It provides protection against data tampering by transforming an input stream of plain logs into a secured blockchain where each log is cryptographically signed.

Warning

NetEye administrators have unrestricted control over El Proxy logs stored on Elasticsearch and over the acknowledgement indices (see Acknowledging Corruptions of a Blockchain). Therefore, we strongly suggest following the Principle of Least Privilege, investing the appropriate time and effort to ensure that the people on NetEye have the right roles and the minimum permissions.

Architecture¶

From an high level point of view, three are the main components of the architecture:

The first component is Logstash, which collects logs from various sources and sends them to the El Proxy using the

json_batchformat of Elastic’s http-output plugin.Note

Due to the fact that the El Proxy does not provide persistence, Logstash should always be configured to take care of the persistence of the involved logs pipelines.

The second component is El Proxy itself, which receives batches of logs from Logstash, signs every log with a cryptographic key used only once, and, finally, forwards the signed logs to the Elasticsearch Bulk API;

The third component is Elasticsearch, which acquires the signed logs from El Proxy and persists them on the dedicated index.

How the El Proxy works¶

El Proxy uses a set of Signature Keys to sign the incoming logs and then sends them to Elasticsearch. Each log file is signed with a different Signature Key (seeded from the previous Signature Key); the signature includes the hash of the previous log. The logs that for any reason cannot be indexed in Elasticsearch are written in a Dead Letter Queue.

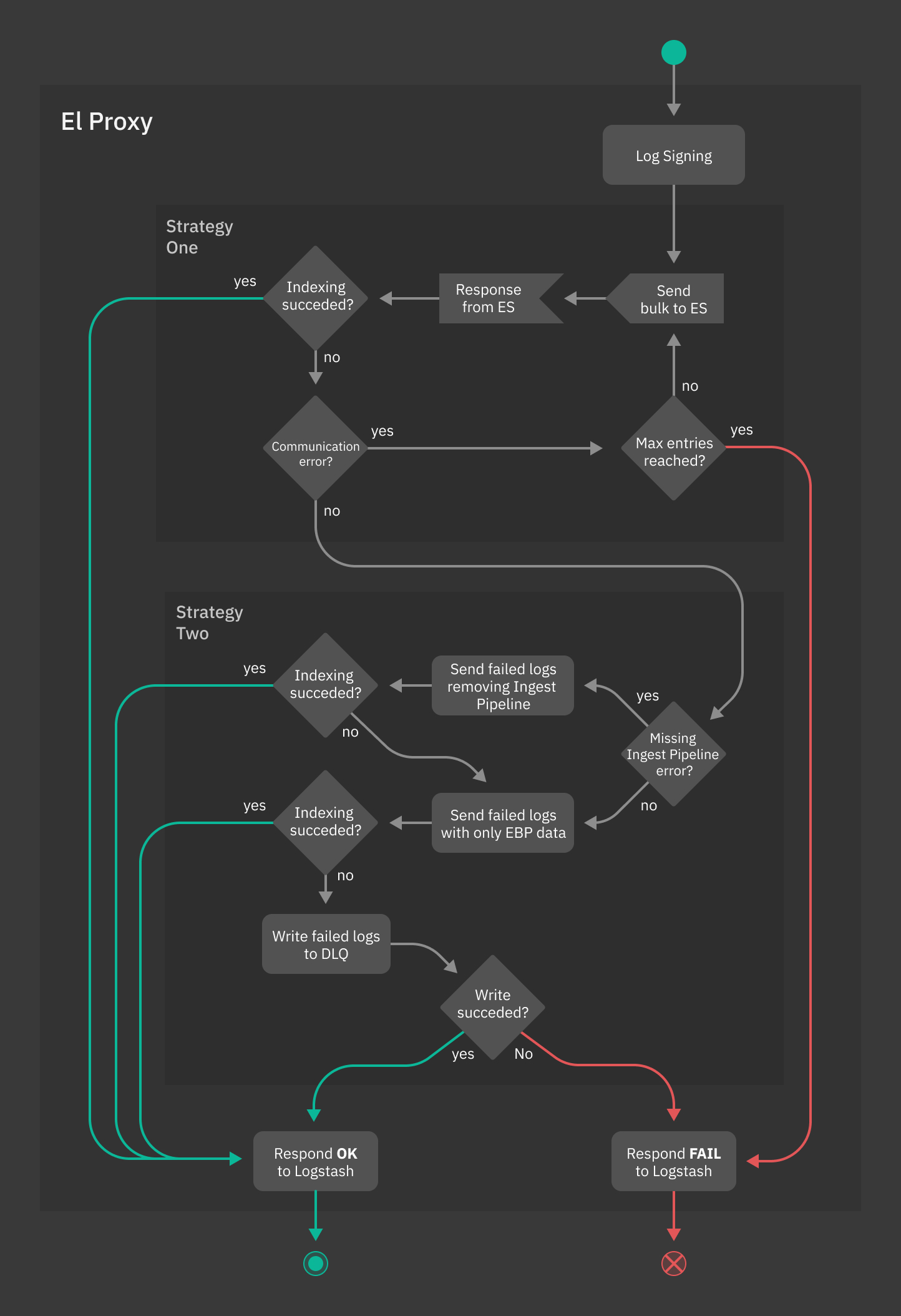

The flowchart depicted in Fig. 203 offers a high-level overview on the process followed by El Proxy to sign a batch of logs. You can notice in particular how El Proxy handles Signature Keys once a batch of logs is written (or not written).

Fig. 203 El Proxy flowchart Overview¶

Health Checks¶

To make sure that the blockchain is sound and no errors occurred during the collection of the logs, NetEye defines different Health Checks to alert the users of any irregularities that may occur.

logmanager-blockchain-creation-status: Makes sure that all logs are written correctly into the blockchain.logmanager-blockchain-keys: Checks the status of the Signature Keys backups used to sign the logs.logmanager-blockchain-missing-elasticsearch-pipeline: Checks if any Elasticsearch ingest pipeline used to enrich logs is missing.

REST Endpoints¶

The El Proxy receives data from REST endpoints:

log endpoint:

description: Receives and processes a single log message in JSON format

path : /api/log

method: POST

request type: JSON

request example:

{ "entry": "A log message" }

response: The HTTP Status code 200 is used to signal a successful processing. Other HTTP status codes indicate a failure.

log_batch endpoint:

description: Receives and processes an array of log messages in JSON format

path : /api/log_batch

method: POST

request type: JSON

request example:

[ { "entry": "A log message", "other": "Additional values...", "EBP_METADATA": { "agent": { "type": "auditbeat", "version": "7.10.1" }, "customer": "neteye", "retention": "6_months", "blockchain_tag": "0", "event": { "module": "elproxysigned" }, "pipeline": "my_es_ingest_pipeline" } }, { "entry1": "Another log message", "entry2": "Another log message", "EBP_METADATA": { "agent": { "type": "auditbeat", "version": "7.10.1" }, "customer": "neteye", "retention": "6_months", "blockchain_tag": "0", "event": { "module": "elproxysigned" }, "pipeline": "my_es_ingest_pipeline" } }, { "entry": "Again, another message", "EBP_METADATA": { "agent": { "type": "auditbeat", "version": "7.10.1" }, "customer": "neteye", "retention": "6_months", "blockchain_tag": "0", "event": { "module": "elproxysigned" } } } ]

response: The HTTP Status code 200 is used to signal a successful processing. Other HTTP status codes indicate a failure.

DeadLetterQueue status endpoint:

description: Returns the status of the Dead Letter Queue. The status contains information on whether the DLQ is empty or not.

path : /api/v1/status/dlq

method: GET

response: The status of the DLQ in JSON format.

response example:

{ "empty": true }

KeysBackup status endpoint:

description: Returns the status of the keys backup. The status contains information on whether any key backup is present in the

{data_backup_dir}folder.path : /api/v1/status/keys_backup

method: GET

response: The status of the Keys Backup in JSON format.

response example:

{ "empty": true }

Log Signature Flow - Generation of Signature Keys¶

The El Proxy achieves secure logging by authentically encrypting each log record with an individual cryptographic key used only once and protects the integrity of the whole log archive by a cryptographic authentication code.

The encryption keys are generated on-the-fly when needed and saved on

the filesystem in the {data_dir} folder. Each key is bound to a

specific customer, module, retention policy and blockchain tag; they

are saved in the filesystem with the following naming convention:

{data_dir}/{customer}/{module}-{customer}-{retention_policy}-{blockchain_tag}_key.json

When a new key is generated, a copy of the key file is created in the {data_backup_dir} folder

with the same naming convention.

As soon as a new key file is created in the {data_backup_dir} folder, the Icinga2 service

logmanager-blockchain-keys-neteyelocal will enter in CRITICAL state, indicating

that a new key has been generated on the system. The new key must be moved in a safe place such

as a password manager or a restricted access storage.

The content of the key file is in the form:

{

"key": "initial_key",

"iteration": 0

}

Where:

keyis the encryption key to be used to sign the next incoming logiteration: is the iteration number for which the signature key is valid.

Every time a log is signed, a new pair key/iteration is generated starting from the last one. The new pair will have the following values:

keyequals to the SHA256 hash of the previous keyiterationequals to the previous iteration incremented by one

For example, if the key at iteration 10 is:

{

"key": "abcdefghilmno",

"iteration": 10

}

the next key will be:

{

"key": "d1bf0c925ec44e073f18df0d70857be56578f43f6c150f119e931e85a3ae5cb4",

"iteration": 11

}

This mechanism creates a blockchain of keys that cannot be altered without breaking the validity of the entire chain.

In addition, every time a set of logs is successfully sent to Elasticsearch, the specific key file is updated with the new value. Consequently, every reference to the previous keys is removed from the system making it impossible to recover and reuse old keys.

However, in case of unmanageable Elasticsearch errors, the El Proxy will reply with an error message to Logstash and will reuse the keys of the failed call for the next incoming logs.

Note

To be valid, the iteration values of signed logs in

Elasticsearch should be incremental with no missing or duplicated

values.

When the first log is received after its startup, the El Proxy calls

Elasticsearch to query for the last indexed log iteration value to

determine the correct iteration number for the next log. If the

last log iteration value returned from Elasticsearch is greater

than the value stored in the key file, the El Proxy will fail to

process the log.

Log Signature Flow - How are signature keys used¶

For each incoming log, the El Proxy retrieves the first available encryption key, as described in the previous section, and then uses it to calculate the HMAC-SHA256 hash of the log.

The calculation of the HMAC hash takes into account:

the log itself as received from Logstash

the iteration number

the timestamp

the hash of the previous log

At this point, the signed log is a simple JSON object composed by the following fields:

All fields of the original log : all fields from the original log message

ES_BLOCKCHAIN: an object containing all the El Proxy’s calculated values. They are:

fields: fields of the original log used by the signature process

hash: the hmac hash calculate as described before

previous_hash: the hmac hash of the previous log message

iteration: the iteration number of the signature key

timestamp_ms: the signature epoch timestamp in milliseconds

For example, given this key:

{

"key": "d1bf0c925ec44e073f18df0d70857be56578f43f6c150f119e931e85a3ae5cb4",

"iteration": 11

}

when this log is received:

{

"value": "A log message",

"origin": "linux-apache2",

"EBP_METADATA": {

"agent": {

"type": "auditbeat",

"version": "7.10.1"

},

"customer": "neteye",

"retention": "6_months",

"blockchain_tag": "0",

"event": {

"module": "elproxysigned"

}

}

}

then this signed log will be generated:

{

"value": "A log message",

"origin": "linux-apache2",

"EBP_METADATA": {

"agent": {

"type": "auditbeat",

"version": "7.10.1"

},

"customer": "neteye",

"retention": "6_months",

"blockchain_tag": "0",

"event": {

"module": "elproxysigned"

}

},

"ES_BLOCKCHAIN": {

"fields": {

"value": "A log message",

"origin": "linux-apache2"

},

"hash": "HASH_OF_THE_CURRENT_LOG",

"previous_hash": "HASH_OF_THE_PREVIOUS_LOG",

"iteration": 11,

"timestamp_ms": 123456789

}

}

The diagram shown in Fig. 204 offers a detailed view on how El Proxy uses the Signature Keys to sign a batch of Logs.

Fig. 204 El Proxy Log Signing flowchart¶

Below you can find the meaning of the variables used in the flowchart.

- SIG_KEY(b)

The key used to sign the logs of a given Blockchain b.

- ES_LAST_LOG(b)

The log with greatest iteration saved in Elasticsearch for the Blockchain b.

Log Signature Flow - How the index name is determined¶

The name of the Elasticsearch index for the signed logs is determined by the content of

the EBP_METADATA field of the incoming Log.

The index name has the following structure:

{EBP_METADATA.agent.type}-{EBP_METADATA.agent.version}-{EBP_METADATA.event.module}-{EBP_METADATA.customer}-{EBP_METADATA.retention}-{EBP_METADATA.blockchain_tag}-YYYY.MM.DD

The following rules and constraints are valid:

All of these fields are mandatory:

EBP_METADATA.agent.typeEBP_METADATA.customerEBP_METADATA.retentionEBP_METADATA.blockchain_tag

The

YYYY.MM.DDpart of the index name is based on the epoch timestamp of the signatureIf the

{EBP_METADATA.event.module}is not present, El Proxy will use by defaultelproxysigned

For example, given this log is received on 23 March, 2021:

{

"value": "A log message",

"origin": "linux-apache2",

"EBP_METADATA": {

"agent": {

"type": "auditbeat",

"version": "7.10.1"

},

"customer": "neteye",

"retention": "6_months",

"blockchain_tag": "0",

"event": {

"module": "elproxysigned"

}

}

}

Then the inferred index name is: auditbeat-7.10.1-elproxysigned-neteye-6_months-0-2021.03.23

As a consequence of the default values and of the default Logstash configuration,

most of the indexes created by El Proxy will have elproxysigned in the name.

Consequently, special care should be applied when manipulating those indexes and documents;

in particular, the user must not delete or rename *-elproxysigned-* indices manually nor alter

the content of ES_BLOCKCHAIN or EBP_METADATA fields as any change could lead to a broken

blockchain.

How Elasticsearch Ingest Pipeline are defined for each log¶

A common use case is that logs going through El Proxy need to be enriched by some Elasticsearch Ingest Pipeline when they are indexed in Elasticsearch.

El Proxy supports this use case and allows its callers to specify, for each log, the ID

of Ingest Pipeline that needs to enrich the log. The ID of the Ingest Pipeline is

defined by the field EBP_METADATA.pipeline of the incoming log.

If EBP_METADATA.pipeline is left empty, the log will not be preprocessed by any

specific Ingest Pipeline.

Sequential logs processing¶

An important aspect to bear in mind of is that the log requests for the same blockchain are always processed sequentially by El Proxy. This means that, when a batch of logs is received from Logstash, it is queued in an in-memory queue and it will be processed only when all the previously received requests are completed.

This behavior is required to assure that the blockchain is kept coherent

with no holes in the iteration sequence.

Nevertheless, as no parallel processing is possible for a single blockchain, this puts some hard limits on the maximum throughput reachable.

Blockchain Verification¶

After having performed the verification setup, it is possible to use the verify subcommand provided by El Proxy, to ensure the underlying blockchain was not changed or corrupted. The command retrieves the signature data of each log stored in the Elasticsearch blockchain, recalculates the hash of each log and compares it with the one stored in the signature, reporting a CorruptionId for all non-matching logs of the blockchain. The CorruptionId can then also be used with the acknowledge command, as explained in section Acknowledging Corruptions of a Blockchain.

By default, the command verifies always two batches in parallel, however the argument

--concurrent-batches allows the user to increase the amount of parallel workers.

The flowchart depicted in figure Fig. 205 provides a detailed view of the operations performed during the verification process and the reporting of discovered corruptions.

Fig. 205 El Proxy verification process Overview¶

For more information on the verify subcommand and its parameters, please consult the associated configuration section.

Verification and Retention Policies

The blockchain stored in Elasticsearch is subject to a specific retention policy which defines how long the logs will be kept. When the logs reach their maximum age, Elasticsearch deletes them. If the logs inside the blockchain get deleted as an effect of the retention policy, it is impossible to verify the blockchain from start to end. For this reason, El Proxy must consider the retention policy and thus start verifying the blockchain from the first present log.

El Proxy uses a so-called Blockchain State History (BSH) file to retrieve the first present log inside the blockchain. This file is updated on every successful verification and contains one entry for each day that specifies the first and last iteration of the indexed logs for that day.

When the verify command is executed, El Proxy fetches the BSH file searching for the entry corresponding to the first day that still contains all the logs at the moment of the verification. If the entry is present, El Proxy can retrieve the first iteration for that day and start the verification from the iteration specified in the entry. If the entry is not present or El Proxy cannot get the first iteration from the BSH, then El Proxy directly queries Elasticsearch to retrieve the first present log.

Note that although querying Elasticsearch is an option, the only way for El Proxy to verify the blockchain’s integrity is to retrieve the first iteration from the BSH. For this reason, if the first log is retrieved by querying Elasticsearch, the verify command will throw a warning after the successful verification. For more information about warnings and errors that could appear during the verification, please consult associated section.

Verification with Retries

The elasticsearch-indexing-delay parameter of the verify command can help when the Blockchain subject to verification contains recently created logs. The reason is that Elasticsearch might take some time to index a document; therefore, El Proxy could be trying to verify a batch in which some logs are missing. In order to avoid the erroneous failure of the verification, if El Proxy detects that some logs could be missing because of an indexing delay of Elasticsearch, it repeats the whole batch verification. The parameter elasticsearch-indexing-delay defines the maximum allowed time in seconds for Elasticsearch to index a document. If Elasticsearch takes more than elasticsearch-indexing-delay seconds to index a log, the verification will fail (see El Proxy Configuration). If we name timestamp_next_log the timestamp of the next present log and timestamp_verification the timestamp of the log verification, El Proxy considers a log as missing due to Elasticsearch indexing delay whenever timestamp_next_log > timestamp_verification - elasticsearch-indexing-delay. It is worth noticing that El Proxy will always retry the batch verification for a finite amount of time, corresponding to elasticsearch-indexing-delay seconds in the worst case.

Error Handling¶

El Proxy implements two different recovery strategies in case of errors. The first one is a simple retry strategy used in case of unrecoverable communication errors with Elasticsearch; the second one, instead, is used in case Elasticsearch has issues with processing some of the sent logs and aims at addressing a widely known issue named backpressure.

Figure Fig. 206 shows how El Proxy integrates the two aforementioned strategies, which will be thoroughly explained in the next paragraphs.

Fig. 206 Error Handling in El Proxy¶

Strategy One: Bulk retry¶

El Proxy implements an optional retry strategy to handle communication errors with Elasticsearch; when enabled (see the Configuration section), whenever a generic error is returned by Elasticsearch, El Proxy will retry for a fixed amount of times to resubmit the same signed_logs to Elasticsearch.

This strategy permits to deal, for example, with temporary networking issues without forcing Logstash to resubmit the logs for a new from-scratch processing.

Nevertheless, while this can be useful in dealing with a large set of use cases, it should also be used very carefully. In fact, due to the completely sequential nature of the blockchain processing, a too high number of retries could lead to an ever growing queue of logs waiting to be processed while El Proxy is busy with processing over and over again the same failed logs.

In conclusion, whether it is better to let El Proxy fail fast or retry more times is a decision that needs a careful, case-by-case analysis.

Strategy Two: Single Log Reprocessing and Dead Letter Queue¶

In some cases, Elasticsearch can correctly process the log bulk request but it can fail to index some of the contained logs. In this situation, El Proxy reprocesses only those failed logs and follows a procedure aimed at ensuring that each signed log will be indexed in Elasticsearch.

The procedure is the following:

If the log indexing error is caused by the fact that the Elasticsearch Ingest Pipeline specified for the log does not exist in Elasticserach, then:

El Proxy tries to index again the log without specifying any Ingest Pipeline. When doing this, El Proxy will also add the tag

_ebp_remove_pipelineto the Elasticsearch document, so that once the log is indexed, it will be visible that the Ingest Pipeline was bypassed during the document indexing.If the indexing fails again, El Proxy proceeds with step 2 of this procedure.

El Proxy removes from the failed log all the fields not required by the signature process and sends the modified document to Elasticsearch. Note that also all the document tags (including the

_ebp_remove_pipelinetag) will be removed from the document.This strategy is based on the assumption that the indexing of the specific log fails due to incompatible operations requested by a pipeline. For example, a pipeline could attempt to lowercase a non-string field causing the failure. Consequently, resubmitting the log without the problematic fields could lead to a successful indexing.

If after following this procedure some logs are still not indexed in Elasticsearch, then El Proxy will dump the failed logs to a Dead Letter Queue on the filesystem and it will send an ok response to Logstash. Usually, when this happens, the blockchain will be in an incoherent state caused by holes in the iteration numeric progression. There is no automatic way to recover from this state. The administrator is in charge of investigating the issue and manually acknowledge the problem.

As mentioned before, the Dead Letter Queue is saved on the filesystem inside the

{dlq_dir} folder as set in the configuration file.

The Dead Letter Queue consists of a set of text files in Newline delimited JSON format

with each row in a file representing a failed log.

These files are grouped by customer and index name following the naming convention:

{dlq_dir}/{customer}/{index_name}.ndjson

where {index_name} is the name of the Elasticsearch index where the failed logs

were supposed to be written.

Each entry in the Dead Letter Queue file contains the original log whose indexation failed and, if present, the Elasticsearch error that caused the failure. For example:

{

"document": {

"value": "A log message",

"origin": "linux-apache2",

"EBP_METADATA": {

"agent": {

"type": "auditbeat",

"version": "7.10.1"

},

"customer": "neteye",

"retention": "6_months",

"blockchain_tag": "0",

"event": {

"module": "elproxysigned"

}

},

"ES_BLOCKCHAIN": {

"fields": {

"value": "A log message",

"origin": "linux-apache2"

},

"hash": "HASH_OF_THE_CURRENT_LOG",

"previous_hash": "HASH_OF_THE_PREVIOUS_LOG",

"iteration": 11,

"timestamp_ms": 123456789

}

},

"el_error": {

"reason": "field [string_field] of type [java.lang.Integer] cannot be cast to [java.lang.String]",

"type": "illegal_argument_exception"

}

}

Example Scenarios¶

As a running example, let’s imagine sending the following authentication event to El Proxy:

{

"host": {

"name": "myhost.wp.lan",

"ip": "172.17.0.2"

},

"event": {

"category": "authentication"

}

}

To help you understand how the El Proxy architecture works together with Logstash and Elasticsearch, please have a look at the following scenarios.

In each scenario, the event is sent from Logstash to Elasticsearch through El Proxy.

Scenario 1¶

In our first scenario, no particular error happens during the signing process, so El Proxy signs the event, adds a new block to an existing blockchain, or creates a new chain from scratch if needed, and indexes the resulting document in a dedicated Elasticsearch index.

This is the most basic scenario, please refer to How the El Proxy works for additional details.

Scenario 2¶

As in the previous example, El Proxy signs the event, adds it to the blockchain, and indexes it in Elasticsearch.

Logstash, however, goes down before getting notified by El Proxy about the success of the whole signing/adding/indexing operation. Logstash, then, can not acknowledge the correct delivery of the event, even though El Proxy has already successfully indexed the event.

Logstash, in the meanwhile, is restarted successfully and it sends the same event to El Proxy again. El Proxy goes through the signing/adding/indexing operation for a second time, creating a duplicated event in Elasticsearch, but keeping a coherent blockchain.

Scenario 3¶

In this scenario, El Proxy is down while Logstash is sending events to it and therefore the event cannot be signed, added to the blockchain, and indexed.

In this case, Logstash tries to send the event to El Proxy until succeeding. If also Logstash is restarted before being able to successfully send the event to El Proxy, no event loss is experienced since events are disk persisted. As soon as Logstash is up and running again, it will send the pending event to El Proxy. Differently from scenario 2, this will not cause any event duplication in Elasticsearch.

Scenario 4¶

In this scenario, Logstash, instead of sending the example event to El Proxy, sends an event with a field that does not match the Elasticsearch mapping definition of the index in which the resulting document will be stored.

In the running example, the host field is mapped as an object (as you can see in the code snipped

reported in the introduction). Logstash, however, has received an event in which the host field

appears as a string:

{

"host": "myhost.wp.lan",

"event": {

"category": "authentication"

}

}

El Proxy signs the event, adds it to the blockchain, and tries to index it in Elasticsearch.

Elasticsearch, however, refuses to index the document, returning an error to El Proxy.

El Proxy then removes all event fields that are not specified in the configuration file

elastic_blockchain_proxy_fields.toml for being signed and tries to reindex the event again.

At this point we can have different outcomes:

the host field is not included in the signature:

the field is removed from the event, fixing the mapping definition issue, and the resulting document is then successfully indexed

the host field must be included in the signature:

the mapping definition issue still exists, then the event is again rejected by Elasticsearch

the event is then put in the Dead Letter Queue (DLQ) waiting for manual intervention

in case of failure writing the event in the DQL, El Proxy returns an error to Logstash, which tries to send the event again

Please refer to El Proxy Configuration for additional details.

Scenario 5¶

In this scenario, El Proxy signs the event and adds it to the blockchain. When trying to index the event in Elasticsearch, however, El Proxy gets some communication errors from Elasticsearch. For example, Elasticsearch is temporarily down, or the disk has less than 15% of free space, causing Elasticsearch to refuse to index.

Then, El Proxy retries to index the event with exponential back-off and:

if succeeding before hitting the maximum amount of retries, then the event is indexed

if the maximum amount of retries is hit without indexing, then an error is returned to Logstash, which tries to send the event again

The number of retries can be defined in the El Proxy configuration. Please refer to El Proxy Configuration for additional details.

Scenario 6 - Verification¶

In this scenario, we would like to verify the blockchain to ensure that it does not contain any

corruption. To achieve this, we run the elastic_blockchain_proxy verify command and we

obtain a report about the state of the blockchain.

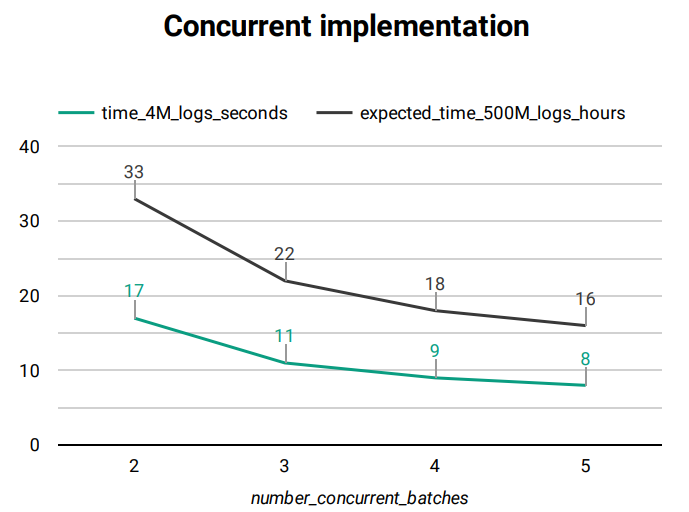

The verification process requires some time, mainly to gather the necessary data from Elasticsearch (queries). In fact, the entire blockchain needs to be queried to obtain the fields needed by the verification and this operation is performed in batches to comply with Elasticsearch query limits. Therefore, the number of logs present in the blockchain heavily impacts the time required for the verification: in case of hundred of millions of logs, hours of processing are needed.

Thereby, with the goal of speeding up the process, the verification command can verify more batches concurrently, with the default set to 2, as described in the El Proxy Configuration.

Warning

We discourage increasing the default number of concurrent batches, as this may cause a general slow down of Elasticsearch due to overloading.

In order to understand the performance of the verification process on a specific system, the

elastic_blockchain_proxy verify-cpu-bench can be used to verify a

customizable number of sample logs, using a specific number of concurrent processes. This helps in

understanding the hardware performance of our system with respect to the verification process.

Graph Fig. 203 outlines how the increase of concurrent batches during the verification affects the time taken by the process, on a typical system.

Fig. 207 El Proxy concurrency Graph¶

time_4M_logs_seconds: number of seconds taken by the verification of about 4 millions of logs

expected_time_500M_logs_hours: projection of the time taken by the verification of about 500 millions logs, in hours

Troubleshooting problems¶

Health Check logmanager-blockchain-creation-status is CRITICAL¶

If this Health Check is CRITICAL, it means that some logs could not be written successfully to Elasticsearch. The logs in question are then written to the Dead Letter Queue, where you can also find the reason for which they could not be indexed in Elasticsearch.

To resolve this issue visit section Handling Logs in Dead Letter Queue

Health Check logmanager-blockchain-keys is CRITICAL¶

This Health Check verifies that no backup of the Signature Key is present on the NetEye installation, to prevent any tampering on the blockchains from a compromised system.

Background and solution for this issue can be found in section Log Signature Flow - Generation of Signature Keys.

Health Check logmanager-blockchain-missing-elasticsearch-pipeline is CRITICAL¶

If a log was sent through Logstash with a non-existing pipeline, Elasticsearch will refuse to persist the log and return with an error. As seen in the section Error Handling the Health Check then queries Elasticsearch periodically for logs with that tag and if found, set the status to CRITICAL.

To resolve this, remove the tags from the document in Elasticsearch.

Agents¶

The Elastic Beat feature¶

NetEye can receive data from Beats installed on monitored hosts (i.e., on the clients).

NetEye currently supports Filebeat as a Beat agent and the Filebeat NetFlow Module for internal use. Additional information about the Beat feature can be found in the official documentation.

The remainder of this section shows first how NetEye is configured to receive data from Beats, i.e., as a receiving point for data sent by Beats, then explains how to install and configure Beats on clients, using SSL certificates to protect the communication.

Overview of NetEye’s Beat infrastructure setup¶

Beats are part of the SIEM module, which is an additional module, that can be installed following the directions in the NetEye Additional Components Installation section if you have the subscription.

Warning

Beats are intended as a replacement for Safed, even if they can coexist. However, since both Beat and Safed might process the same data, they would double the time and resources required, therefore it is suggested to activate only one of them.

The NetEye implementation allows Logstash to listen to incoming data on a secured TCP port (5044). Logstash then sends data into two flows:

to a file on disk, in the /neteye/shared/rsyslog/data folder, with the following name:

%{[agent][hostname]}/%{+YYYY}/%{+MM}/%{+dd}/[LS]%{[host][hostname]}.log. The format of the file is the same used forsafedfiles. This file is encrypted and its integrity validated, like it happens for Safed, and written to disk to preserve its inalterability.to Elastic, to be displayed into preconfigured Kibana dashboards.

Communication is SSL protected, and certificates need to be installed on clients together with the agents, see next section for more information.

Note

When the module is installed there is no data flow until agents are installed on the clients to be monitored. Indeed, deployment on NetEye consists only of the set up of the listening infrastructure.

The Beat feature is currently a CLI-only feature: no GUI is available and the configuration should be done by editing configuration files.