Advanced Topics¶

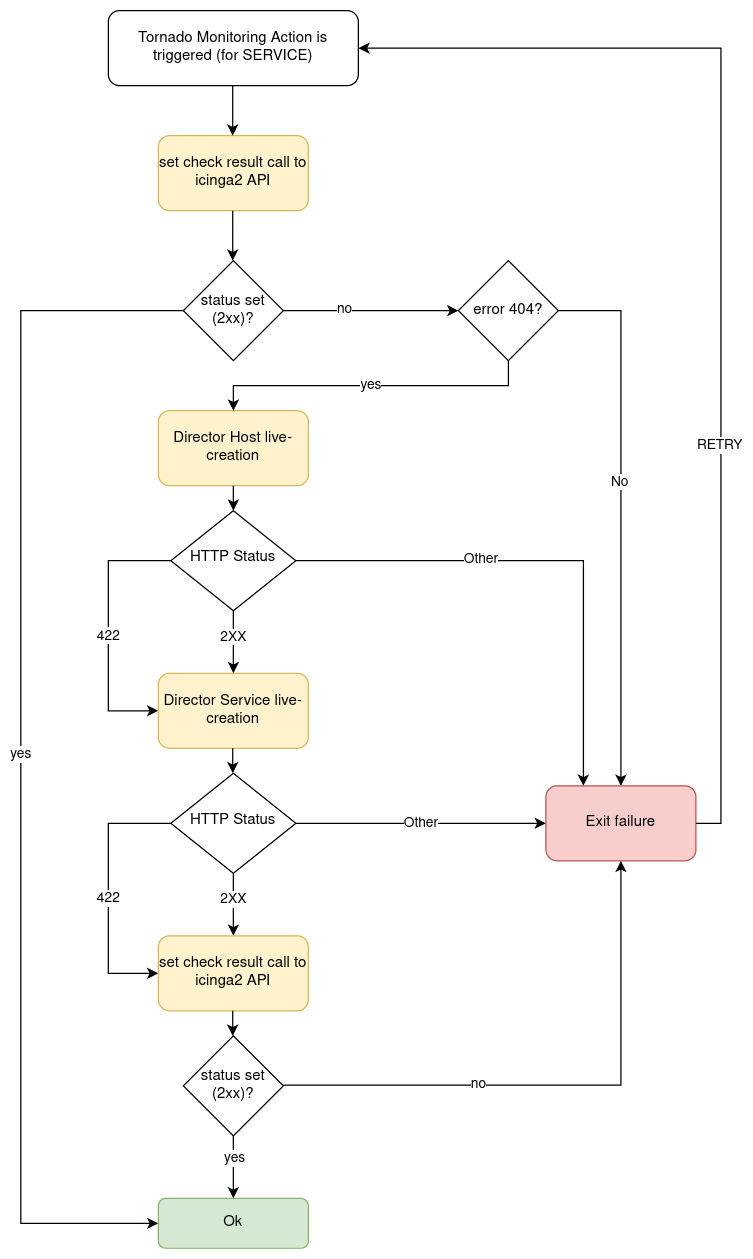

In this section we deal with some advanced topic, including passive monitoring, jobs, API, and Icinga Retention Policy.

Passive monitoring¶

When Tornado is installed, NetEye creates an Icinga Web 2 user

neteye-tornado and an associated Icinga Web 2 role

neteye_tornado_director_apis, which only gives access to the module

Director, with limited authorizations on the actions that Tornado can

perform.

Warning

These user and permission are required by the backend, for Tornado to call the Director API–and in particular for the authentication and authorization of the Tornado Director Executor to the APIs of the Icinga Director. Therefore neither the user, nor the associated role must be removed from the system.

In case you need it, for example to reconfigure the Tornado Director

Executor, the password for the user neteye-tornado is stored in the

file /root/.pwd_icingaweb2_neteye_tornado.

Processing Tree¶

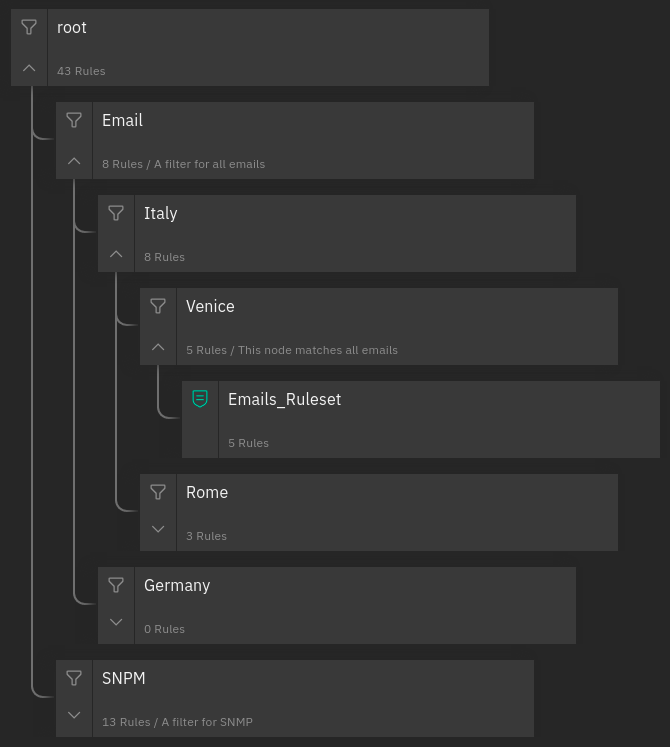

Within the NetEye interface you can view the Tornado rule configuration graphically instead of in a command shell. The Configuration Viewer (available when click on Tornado in the left side menu) shows the processing tree in a top-down format, allowing you to verify that the structure of the rules you have created is as you intended.

Fig. 106 A processing tree¶

Definitions¶

While a more complete description of all Tornado elements is available in the official documentation, they are summarized in enough detail here so that you can understanding this page without reading the full official documentation.

Filter: A node in the processing tree that contains (1) a filter definition and (2) a set of child nodes, each of which corresponds to a condition in the filter definition. You can use a Filter to create independent pipelines for different types of events, reducing the amount of time needed to process them.

Implicit Filter: If a filter is not present in a node, a default filter is created which forwards an event to ALL child nodes, rather than a particular one that matched a filter condition.

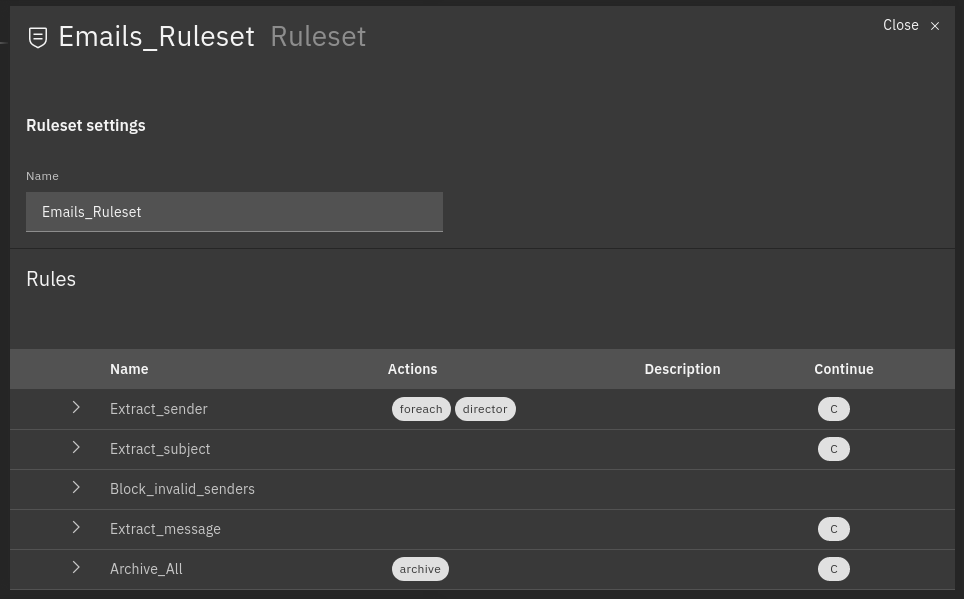

Ruleset: A leaf node that contains multiple rules to be matched against the event one by one once the filters in the parent nodes have let an event through.

Rule: A single rule within a Ruleset, which can be matched against an event.

Processing Tree: The entire set of filters and rules that creates the hierarchical structure where events are filtered and then matched against one or more rules.

Basic Configuration Information¶

The location of configuration files in the file system is pre-configured in NetEye. NetEye automatically starts Tornado as follows:

Reads the configuration from the /neteye/shared/tornado/conf/ directory

Starts the Tornado Engine

Searches for Filter and Rule definitions in /neteye/shared/tornado/conf/rules.d/

The structure of this last directory reflects the Processing Tree structure. Each subdirectory can contain either:

A Filter and a set of sub directories corresponding to the Filter’s children

A Ruleset

Each individual Rule or Filter to be included in the processing tree must be in its own file, in JSON format (Tornado will ignore all other file types). For instance, consider this directory structure:

/tornado/config/rules

|- node_0

| |- 0001_rule_one.json

| \- 0010_rule_two.json

|- node_1

| |- inner_node

| | \- 0001_rule_one.json

| \- filter_two.json

\- filter_one.json

In this example, the processing tree is organized as follows:

The root node is a filter named “filter_one”.

The filter filter_one* has two child nodes: node_0 and node_1.

node_0 is a Ruleset that contains two rules called rule_one and rule_two, with an implicit filter that forwards all incoming events to both of its child rules.

node_1 is a filter with a single child named “inner_node”. Its filter filter_two determines which incoming events are passed to its child node.

inner_node is a Ruleset with a single rule called rule_one.

Within a Ruleset, the alphanumeric order of the file names determines the execution order. The rule filename is composed of two parts separated by the first ‘*’ (underscore) symbol. The first part determines the rule execution order, and the second is the rule name. For example:

0001_rule_one.json -> 0001 determines the execution order, “rule_one” is the rule name

0010_rule_two.json -> 0010 determines the execution order, “rule_two” is the rule name

Rule names must be unique within their own Ruleset. There are no constraints on rule names in different Rulesets.

Similar to what happens for Rules, Filter names are also derived from the filenames. However, in this case, the entire filename corresponds to the Filter name.

In the example above, the “filter_one” node is the entry point of the processing tree. When an Event arrives, the Matcher will evaluate whether it matches the filter condition, and will pass the Event to one (or more) of the filter’s children. Otherwise it will ignore it.

A node’s children are processed independently. Thus node_0 and node_1 will be processed in isolation and each of them will be unaware of the existence and outcome of the other. This process logic is applied recursively to every node.

Multi Tenancy Roles Configuration¶

If your NetEye installation is tenant aware, roles associated to each user must be configured to limit their access only the processing trees they are allowed to.

In the NetEye roles (), add or edit the role

related to the tenant limited users. In the detail of the role configuration you can

find the tornadocarbon module section. You can set the tenant ID in the

tornadocarbon/tenant_id restriction.

Hint

You can find the list of available Tenant IDs by reading the directory

names in /etc/neteye-satellites.d/. You can use this command:

# basename -a $(ls -d /etc/neteye-satellite.d/*/)

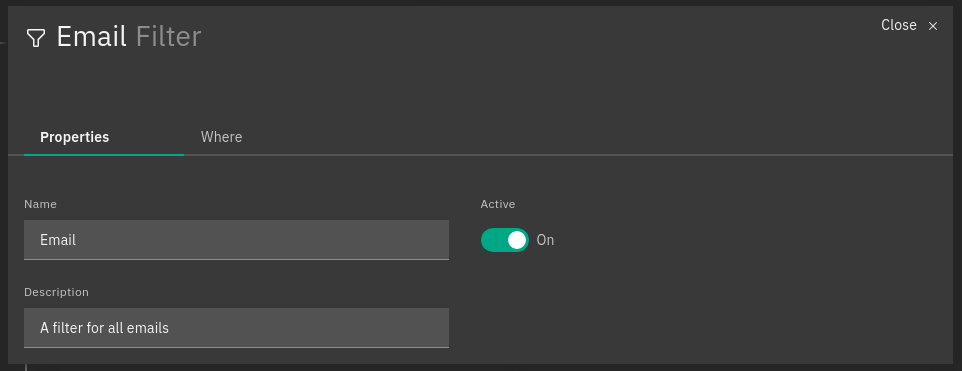

Structure of a Filter¶

A Filter contains these properties, defined in its JSON file:

Name: The filter name

Active: A boolean value; if false, the filter’s children will be ignored

Description: A string value providing a high-level description of the filter

Where tab: An operator that, when applied to an event, returns true or false. The result determines how an Event will be processed by the filter’s inner nodes.

When the configuration is read from the file system, the filter name is automatically inferred from the filename by removing its ‘.json’ extension. It can be composed only of letters, numbers and the “_” (underscore) character.

Fig. 107 A sample filter¶

Interface Overview¶

In today’s world, the UX has become a key point in successful IT products and NetEye wants it to become one of its strongest features, providing a continuously improved GUI to support the users’s daily activities.

For this purpose, NetEye provides a totally redesigned, modern looking and attractive GUI for Tornado, based on solid design and usability guidelines defined by the Carbon Design System

While the new GUI is developed to completely replace the current UI, it is currently in preview and only some features are supported such as processing tree visualization, event-driven testing and multi-tenancy.

The Graphical User Interface allows you to explore the current configuration of your Tornado Instance. The GUI has two main views. The first one is the Processing Tree View; this one allows you to examine the entire configuration processing tree of Tornado and to modify the configuration–please refer to the next section for more information. The second view, the Ruleset View, can be reached by clicking on whatever node is displayed in the Processing Tree View; from here, you can access the details of each Rule, send Events to Tornado, inspect the outcome of the Event execution, and modify the rules. In this view the information is organized into a table where each row represents a specific Rule.

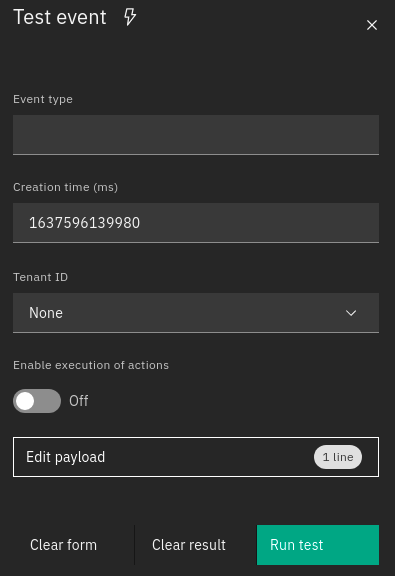

In the Ruleset View, an Event Test panel is available to send simulated Events. These Events, can be created through a dedicated form and are composed by the following four fields:

Event type: the type of the Event, such as trap, sms, email, etc.

Creation time: the Event timestamp defined as an epoch in milliseconds

Tenant ID: The tenant ID that will be added to the event. Fill this field if there are filters or conditions related to the tenant ID, otherwise just leave it empty.

Enable execution of actions: whether the actions of matching rules have to be executed or skipped

Payload: the event payload in JSON format

Fig. 109 Test window¶

When a test is executed by clicking the “Run Test” button, the linked Event is sent to Tornado and the outcome of the operation will be reported in the rule table.

At this point, a rule can be in one of the following states:

matched: If a rule matched the Eventstopped: If a rule matched the Event and then stopped the execution flow. This happens if thecontinueflag of the rule is set to falsepartially matched: If the where condition of the Rule was matched but it was not possible to process the required extracted variablesnot matched: If the Rule did not match the Event

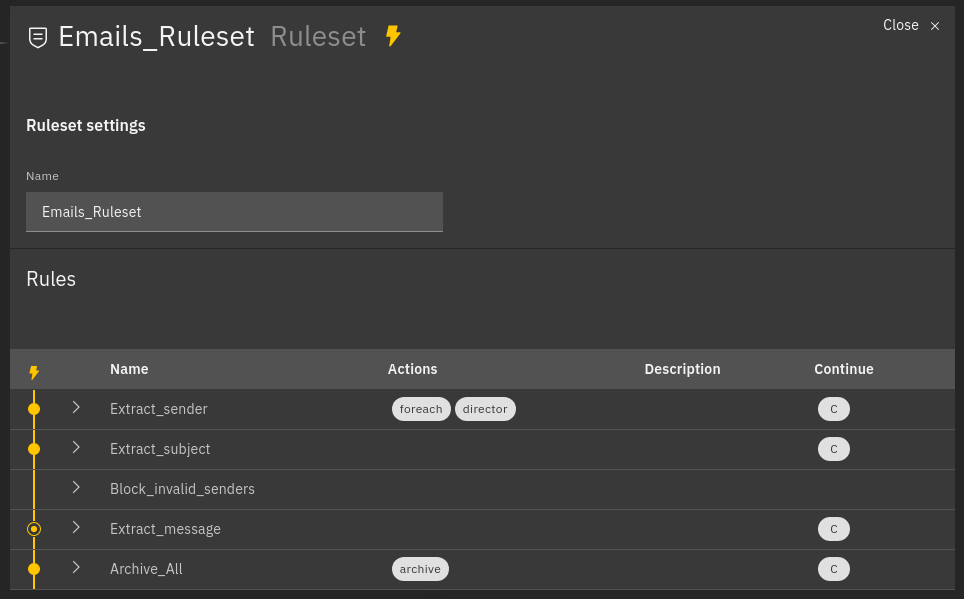

Fig. 110 Example of processed rules¶

Matched rules: Extract_sender, Extract_subject, Archive_all

Partially matched: Extract_message

Not matched: Block_invalid_senders

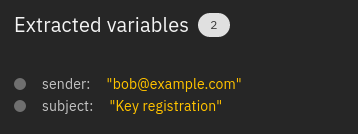

For each rule in the table, the extracted variables and the generated Action payloads are shown. In addition, all these extracted variables are also shown in the Event Test form.

Fig. 111 Sample of extracted variables¶

Two other buttons are visible, one for cleaning all the fields of the form and one for cleaning the outcome of the test.

Tornado Processing Tree Editor¶

The Tornado GUI provides an edit mode that allows to modify the configuration of the Tornado rules’ processing tree directly from NetEye’s front-end. Rules must be written in JSON and the editor features a validator, that helps you checking that the rule is syntactically correct. If you are not acquainted with JSON, you can check one of the following tutorials:

Two important principles have been used for the development of the edit mode and must be understood and taken into account when modifying Tornado’s configuration, especially because these differ from other modules in Icinga Director:

Implicit Lock Mode. Only one user at a time can modify the processing tree configuration. This prevents multiple users from changing the configuration simultaneously, which might lead to unwanted results and possibly to Tornado not working correctly due to incomplete or wrong configuration. When a user is editing the configuration, the actual, running configuration is left untouched: it continues to be operative and accepts incoming data to be processed.

Edit Mode. When starting to modify the configuration, Tornado will continue to work with the existing configuration–thanks to the implicit lock mode, while the new changes are saved in a separate draft configuration. The new configuration then must be deployed to become operative.

Note

If the user logs out without deploying the draft, the next

user that will log in–and start modifying tornado’s

configuration–will have different possibilities: to check all the

changes present in the draft by clicking on the Show Changes

button and to continue editing and then deploying the draft, or to

discard completely the existent draft and star editing a new

one. Once the user deployed the changes, the action will be

recorded in the Auditlog module (System > Auditlog). Here a

detailed diff of what has been changed, together with the user who

deployed those changes and the timestamp is displayed.

This mode has other positive side effects: one does not need to complete the changes in one session, but can stop and then continue at a later point; another user can pick up the draft and complete it; in case of a disaster (like e.g., the abrupt end of the HTTPS connection to the GUI) it is possible to resume the draft from the point where it was left.

Warning

Only one draft at a time is allowed; that is, editing of multiple draft is not supported!

When a user enters the edit mode, a new draft is created on the fly if none is present, which will be an exact copy of the running Tornado configuration. If not present in the draft, a root node of type Filter will be automatically added to the draft.

To check for the correctness of a Draft, without impacting the deployed configuration, it is possible to open the test window also while in Edit Mode. The event will be processed using the Draft and the result will be displayed, while keeping the existing configuration running.

In order to modify the processing tree, you can add nodes to each level of the processing tree, as well as inspecting and modifying each single rule. Moreover, from the main view it is also possible to add new filters or rulesets to the processing tree by clicking on the buttons on the right-hand side of the GUI.

In more details, the processing tree is shown in the main area of the GUI. The top filter node (nodes are containers of rules, please check the Definition in previous section for details) is the root one and therefore the first to be processed. Additional levels below contain further blocks with additional nodes, to allow for multiple processing of the events. Depending on the Edit mode being active or not, different interactions are possible with the processing tree.

When the Edit mode is disabled, the elements composing the Processing tree (Filters and rulesets) are shown in hierarchy. When clicking on a filter, its children–which can be other filters or rulesets–are shown; while a click on a ruleset will open the Rule Editor, to allow editing of the rules–refer to the next section for more information.

When clicking on Edit mode, it is possible to add new blocks in the processing tree or move them in other level. Using the buttons on the right-hand side of the Editor, new rulesets or filters can be added and placed within the hierarchy. For each node. it is possible to define:

a name and a description

its place in the hierarchy, by providing the name of the parent node

For a filter, these two more options are available.

whether it is active or not

the filter that should match the event. Syntax for the filter is JSON-based, and examples can be found in the various How-tos present in the tornado section of the User Guide.

Moreover, in Edit mode, in each box tree dots appear that when clicked, will open a small menu with two or three icons at the bottom of the box: Option are to edit or delete the ruleset or the filter, with the additional option, for ruleset only, to list rules:a click on the icon will open the rule GUI for editing the single rule of the ruleset.

Tornado Rule Editor¶

The tornado Rule Editor allows to manage the single rules that are in a ruleset. On top of the window, all the parents of the current ruleset are shown, to allow you to quickly check on which leaf of the processing tree the rules shown are located. Like in the processing tree editor, a JSON validator assists you in checking that the syntax of the rules is correct.

In the main area, all the defined rules are shown, together with a number of information about them: name, action, and status (enabled or not).

Like in the Processing Tree Editor, available options differ depending whether the Edit mode is active or not:

When the Edit mode is not active, it is possible to click on the

Open test window button on the top right-hand side of the window to

check which events the current rule selection would match.

With active Edit mode, the Open test window button is disabled,

but new rules can be added, modified, or deleted; each rule can also can

be moved along the list with a simple drag and drop.

Import and Export Configuration¶

The Tornado GUI provides multiple ways to import and export the whole configuration or just a subset of it.

Export Configuration¶

You have three possibilities to export Tornado configuration or part of it:

entire configuration: select the root node from the Processing Tree View and click on the export button to download the entire configuration

a node (either a ruleset or a filter): select the node from the Processing Tree View and click on the export button to download the node and its sub-nodes

a single rule: navigate to the rules table, select a rule, and click on the export button

Hint

You can backup and download Tornado configuration by exporting the entire configuration.

Import Configuration¶

You can use the import feature to upload to NetEye a previously downloaded configuration, new custom rules, or even the configuration from another NetEye instance.

When clicking on the import button a popup will appear with the following fields:

Node File: the file containing the configuration

Note

When importing a single rule the field will be labeled as Rule File.

Replace whole configuration?: If selected, the imported configuration will replace the root node and all of its sub-nodes.

Hint

You can restore a previous Tornado configuration by selecting this option.

Parent Node: The parent node where to add the imported configuration, by default it is set to the currently selected node.

Note

When a node or a rule with the same name of an already existing one is imported, the name of the new node/rule will be suffixed with _imported.

JMESPath Collector¶

This is a Collector that receives an input in JSON format and allows the creation of Events using the JMESPath JSON query language.

Email Collector¶

The Email Collector receives a MIME email message as input, parses it, and produces a Tornado Event.

How It Works¶

When the Email Collector receives a valid MIME email message as input, it parses it and produces a Tornado Event with the extracted data.

For example, given the following input:

Subject: This is a test email

Content-Type: multipart/alternative; boundary=foobar

Date: Sun, 02 Oct 2016 07:06:22 -0700 (PDT)

--foobar

Content-Type: text/plain; charset=utf-8

Content-Transfer-Encoding: quoted-printable

This is the plaintext version, in utf-8. Proof by Euro: =E2=82=AC

--foobar

Content-Type: text/html

Content-Transfer-Encoding: base64

PGh0bWw+PGJvZHk+VGhpcyBpcyB0aGUgPGI+SFRNTDwvYj4gdmVyc2lvbiwgaW4g

dXMtYXNjaWkuIFByb29mIGJ5IEV1cm86ICZldXJvOzwvYm9keT48L2h0bWw+Cg==

--foobar--

it will generate this Event:

{

"type": "email",

"created_ms": 1554130814854,

"payload": {

"date": 1475417182,

"subject": "This is a test email",

"to": "",

"from": "",

"cc": "",

"body": "This is the plaintext version, in utf-8. Proof by Euro: €",

"attachments": []

}

}

If there are attachments, then attachments that are text files will be in plain text, otherwise they will be encoded in base64.

For example, passing this email with attachments:

From: "Francesco" <francesco@example.com>

Subject: Test for Mail collector - with attachments

To: "Benjamin" <benjamin@example.com>,

francesco <francesco@example.com>

Cc: thomas@example.com, francesco@example.com

Date: Sun, 02 Oct 2016 07:06:22 -0700 (PDT)

MIME-Version: 1.0

Content-Type: multipart/mixed;

boundary="------------E5401F4DD68F2F7A872C2A83"

Content-Language: en-US

This is a multi-part message in MIME format.

--------------E5401F4DD68F2F7A872C2A83

Content-Type: text/html; charset=utf-8

Content-Transfer-Encoding: 7bit

<html>Test for Mail collector with attachments</html>

--------------E5401F4DD68F2F7A872C2A83

Content-Type: application/pdf;

name="sample.pdf"

Content-Transfer-Encoding: base64

Content-Disposition: attachment;

filename="sample.pdf"

JVBERi0xLjMNCiXi48/TDQoNCjEgMCBvYmoNCjw8DQovVHlwZSAvQ2F0YWxvZw0KT0YNCg==

--------------E5401F4DD68F2F7A872C2A83

Content-Type: text/plain; charset=UTF-8;

name="sample.txt"

Content-Transfer-Encoding: base64

Content-Disposition: attachment;

filename="sample.txt"

dHh0IGZpbGUgY29udGV4dCBmb3IgZW1haWwgY29sbGVjdG9yCjEyMzQ1Njc4OTA5ODc2NTQz

MjEK

--------------E5401F4DD68F2F7A872C2A83--

will generate this Event:

{

"type": "email",

"created_ms": 1554130814854,

"payload": {

"date": 1475417182,

"subject": "Test for Mail collector - with attachments",

"to": "\"Benjamin\" <benjamin@example.com>, francesco <francesco@example.com>",

"from": "\"Francesco\" <francesco@example.com>",

"cc": "thomas@example.com, francesco@example.com",

"body": "<html>Test for Mail collector with attachments</html>",

"attachments": [

{

"filename": "sample.pdf",

"mime_type": "application/pdf",

"encoding": "base64",

"content": "JVBERi0xLjMNCiXi48/TDQoNCjEgMCBvYmoNCjw8DQovVHlwZSAvQ2F0YWxvZw0KT0YNCg=="

},

{

"filename": "sample.txt",

"mime_type": "text/plain",

"encoding": "plaintext",

"content": "txt file context for email collector\n1234567890987654321\n"

}

]

}

}

Within the Tornado Event, the filename and mime_type properties of each attachment are the values extracted from the incoming email.

Instead, the encoding property refers to how the content is encoded in the Event itself. It can be one of two types:

plaintext: The content is included in plain text

base64: The content is encoded in base64

Particular cases¶

The email collector follows these rules to generate the Tornado Event:

If more than one body is present in the email or its subparts, the first valid body found is used, while the others will be ignored

Content Dispositions different from Inline and Attachment are ignored

Content Dispositions of type Inline are processed only if the content type is text/*

The email subparts are not scanned recursively, thus only the subparts at the root level are evaluated

Tornado Email Collector (Executable)¶

The Email Collector Executable binary is an executable that generates Tornado Events from MIME email inputs.

How It Works¶

The Email Collector Executable is built on actix.

On startup, it creates a UDS socket where qit listens for incoming email messages. Each email published on the socket is processed by the embedded Email Collector to produce Tornado Events which are, finally, forwarded to the Tornado Engine’s TCP address.

The UDS socket is created with the same user and group as the tornado_email_collector process, with permissions set to 770 (read, write and execute for both the user and the group).

Each client that needs to write an email message to the socket should close the connection as soon as it completes its action. In fact, the Email Collector Executable will not even start processing that email until it receives an EOF signal. Only one email per connection is allowed.

Procmail Example

This client behavior can be obtained, for instance, by using procmail with the following configuration:

## .procmailrc file

MAILDIR=$HOME/Mail # You should make sure this exists

LOGFILE=$MAILDIR/procmail.log

# This is where we ask procmail to write to our UDS socket.

SHELL=/bin/sh

:0

| /usr/bin/socat - /var/run/tornado_email_collector/email.sock 2>&1

A precondition for procmail to work is that the mail server in use must be properly configured to notify procmail whenever it receives new email.

For additional information about how incoming email is processed and the structure of the generated Event, check the documentation specific to the embedded Email Collector.

Note that the Email Collector will support any email client that works with the MIME format and UDS sockets.

Tornado Rsyslog Collector (executable)¶

The rsyslog Collector binary is an executable that generates Tornado Events from rsyslog inputs.

How It Works¶

This Collector is meant to be integrated with rsyslog’s own logging through the omprog module. Consequently, it is never started manually, but instead will be started, and managed, directly by rsyslog itself.

Here is an example rsyslog configuration template that pipes logs to the rsyslog-collector (the parameters are explained below):

module(load="omprog")

action(type="omprog"

binary="/path/to/tornado_rsyslog_collector --some-collector-options")

An example of a fully instantiated startup setup is:

module(load="omprog")

action(type="omprog"

binary="/path/to/rsyslog_collector --config-dir=/tornado-rsyslog-collector/config --tornado-event-socket-ip=tornado_server_ip --tornado-event-socket-port=4747")

Note that all parameters for the binary option must be on the same line. You will need to place this configuration in a file in your rsyslog directory, for instance:

/etc/rsyslog.d/tornado.conf

In this example the collector will:

Reads the configuration from the /tornado-rsyslog-collector/config directory

Write outgoing Events to the TCP socket at tornado_server_ip:4747

The Collector will need to be run in parallel with the Tornado engine before any events will be processed, for example:

/opt/tornado/bin/tornado --tornado-event-socket-ip=tornado_server_ip

Under this configuration, rsyslog is in charge of starting the collector when needed and piping the incoming logs to it. As the last stage, the Tornado Events generated by the collector are forwarded to the Tornado Engine’s TCP socket.

This integration strategy is the best option for supporting high performance given massive amounts of log data.

Because the collector expects the input to be in JSON format, rsyslog should be pre-configured to properly pipe its inputs in this form.

Tornado Webhook Collector (executable)¶

The Webhook Collector is a standalone HTTP server that listens for REST calls from a generic webhook, generates Tornado Events from the webhook JSON body, and sends them to the Tornado Engine.

How It Works¶

The webhook collector executable is an HTTP server built on actix-web.

On startup, it creates a dedicated REST endpoint for each configured webhook. Calls received by an endpoint are processed by the embedded JMESPath Collector that uses them to produce Tornado Events. In the final step, the Events are forwarded to the Tornado Engine through the configured connection type.

For each webhook, you must provide three values in order to successfully create an endpoint:

id: The webhook identifier. This will determine the path of the endpoint; it must be unique per webhook.

token: A security token that the webhook issuer has to include in the URL as part of the query string (see the example at the bottom of this page for details). If the token provided by the issuer is missing or does not match the one owned by the collector, then the call will be rejected and an HTTP 401 code (UNAUTHORIZED) will be returned.

collector_config: The transformation logic that converts a webhook JSON object into a Tornado Event. It consists of a JMESPath collector configuration as described in its specific documentation.

Tornado Nats JSON Collector (executable)¶

The Nats JSON Collector is a standalone collector that listens for JSON messages on Nats topics, generates Tornado Events, and sends them to the Tornado Engine.

How It Works¶

The Nats JSON collector executable is built on actix.

On startup, it connects to a set of topics on a Nats server. Calls received are then processed by the embedded jmespath collector that uses them to produce Tornado Events. In the final step, the Events are forwarded to the Tornado Engine through the configured connection type.

For each topic, you must provide two values in order to successfully configure them:

nats_topics: A list of Nats topics to which the collector will subscribe.

collector_config: (Optional) The transformation logic that converts a JSON object received from Nats into a Tornado Event. It consists of a JMESPath collector configuration as described in its specific documentation.

Tornado Icinga2 Collector (executable)¶

The Icinga2 Collector subscribes to the Icinga2 API event streams, generates Tornado Events from the Icinga2 Events, and publishes them on the Tornado Engine TCP address.

How It Works¶

The Icinga2 collector executable is built on actix.

On startup, it connects to an existing Icinga2 Server API and subscribes to user defined Event Streams. Each Icinga2 Event published on the stream, is processed by the embedded jmespath collector that uses them to produce Tornado Events which are, finally, forwarded to the Tornado Engine’s TCP address.

More than one stream subscription can be defined. For each stream, you must provide two values in order to successfully create a subscription:

stream: the stream configuration composed of:

types: An array of Icinga2 Event types;

queue: A unique queue name used by Icinga2 to identify the stream;

filter: An optional Event Stream filter. Additional information about the filter can be found in the official documentation.

collector_config: The transformation logic that converts an Icinga2 Event into a Tornado Event. It consists of a JMESPath collector configuration as described in its specific documentation.

Note

Based on the Icinga2 Event Streams documentation, multiple HTTP clients can use the same queue name as long as they use the same event types and filter.

SNMP Trap Daemon Collectors¶

The _snmptrapd_collector_s of this package are embedded Perl trap handlers for Net-SNMP’s snmptrapd. When registered as a subroutine in the Net-SNMP snmptrapd process, they receives snmptrap-specific inputs, transforms them into Tornado Events, and forwards them to the Tornado Engine.

There are two collector implementations, the first one sends Events directly to the Tornado TCP socket and the second one forwards them to a NATS server.

The implementations rely on the Perl NetSNMP::TrapReceiver package. You can refer to its documentation for generic configuration examples and usage advice.

How They Work¶

The _snmptrapd_collector_s receive snmptrapd messages, parse them, generate Tornado Events and, finally, sends them to Tornado using their specific communication channel.

The received messages are kept in an in-memory non-persistent buffer

that makes the application resilient to crashes or temporary

unavailability of the communication channel. When the connection to the

channel is restored, all messages in the buffer will be sent. When the

buffer is full, the collectors will start discarding old messages. The

buffer max size is set to 10000 messages.

Consider a snmptrapd message that contains the following information:

PDU INFO:

version 1

errorstatus 0

community public

receivedfrom UDP: [127.0.1.1]:41543->[127.0.2.2]:162

transactionid 1

errorindex 0

messageid 0

requestid 414568963

notificationtype TRAP

VARBINDS:

iso.3.6.1.2.1.1.3.0 type=67 value=Timeticks: (1166403) 3:14:24.03

iso.3.6.1.6.3.1.1.4.1.0 type=6 value=OID: iso.3.6.1.4.1.8072.2.3.0.1

iso.3.6.1.4.1.8072.2.3.2.1 type=2 value=INTEGER: 123456

The collector will produce this Tornado Event:

{

"type":"snmptrapd",

"created_ms":"1553765890000",

"payload":{

"protocol":"UDP",

"src_ip":"127.0.1.1",

"src_port":"41543",

"dest_ip":"127.0.2.2",

"PDUInfo":{

"version":"1",

"errorstatus":"0",

"community":"public",

"receivedfrom":"UDP: [127.0.1.1]:41543->[127.0.2.2]:162",

"transactionid":"1",

"errorindex":"0",

"messageid":"0",

"requestid":"414568963",

"notificationtype":"TRAP"

},

"oids":{

"iso.3.6.1.2.1.1.3.0":"67",

"iso.3.6.1.6.3.1.1.4.1.0":"6",

"iso.3.6.1.4.1.8072.2.3.2.1":"2"

}

}

}

The structure of the generated Event is not configurable.

JMESPath Collector Configuration¶

The Collector configuration is composed of two named values:

event_type: Identifies the type of Event, and can be a String or a JMESPath expression (see below).

payload: A Map<String, ValueProcessor> with event-specific data.

and here the payload ValueProcessor can be one of:

A null value

A string

A bool value (i.e., true or false)

A number

An array of values

A map of type Map<String, ValueProcessor>

A JMESPath expression : A valid JMESPath expression delimited by the ‘${‘ and ‘}’ characters.

The Collector configuration defines the structure of the Event produced. The configuration’s event_type property will define the type of Event, while the Event’s payload will have the same structure as the configuration’s payload.

How it Works

The JMESPath expressions of the configuration will be applied to incoming inputs, and the results will be included in the Event produced. All other ValueProcessors, instead, are copied without modification.

For example, consider the following configuration:

{

"event_type": "webhook",

"payload": {

"name" : "${reference.authors[0]}",

"from": "jmespath-collector",

"active": true

}

}

The value ${reference.authors[0]} is a JMESPath expression, delimited

by ${ and }, and whose value depends on the incoming input.

Thus if this input is received:

{

"date": "today",

"reference": {

"authors" : [

"Francesco",

"Thomas"

]

}

}

then the Collector will produce this Event:

{

"event_type": "webhook",

"payload": {

"name" : "Francesco",

"from": "jmespath-collector",

"active": true

}

}

Runtime behavior

When the JMESPath expression returns an array or a map, the entire object will be inserted as-is into the Event.

However, if a JMESPath expression does not return a valid result, then no Event is created, and an error is produced.

Email Collector Configuration¶

The executable configuration is based partially on configuration files, and partially on command line parameters.

The available startup parameters are:

config-dir: The filesystem folder from which the collector configuration is read. The default path is /etc/tornado_email_collector/.

In addition to these parameters, the following configuration entries are available in the file ‘config-dir’/email_collector.toml:

logger:

level: The Logger level; valid values are trace, debug, info, warn, and error.

stdout: Determines whether the Logger should print to standard output. Valid values are

trueandfalse.file_output_path: A file path in the file system; if provided, the Logger will append any output to it.

email_collector:

tornado_event_socket_ip: The IP address where outgoing events will be written. This should be the address where the Tornado Engine listens for incoming events. If present, this value overrides what specified by the

tornado_connection_channelentry. This entry is deprecated and will be removed in the next release of tornado. Please, use the ``tornado_connection_channel`` instead.tornado_event_socket_port: The port where outgoing events will be written. This should be the port where the Tornado Engine listens for incoming events. This entry is mandatory if

tornado_connection_channelis set toTCP. If present, this value overrides what specified by thetornado_connection_channelentry. This entry is deprecated and will be removed in the next release of tornado. Please, use the ``tornado_connection_channel`` instead.message_queue_size: The in-memory buffer size for Events. It makes the application resilient to Tornado Engine crashes or temporary unavailability. When Tornado restarts, all messages in the buffer will be sent. When the buffer is full, the collector will start discarding older messages first.

uds_path: The Unix Socket path on which the collector will listen for incoming emails.

tornado_connection_channel: The channel to send events to Tornado. It contains the set of entries required to configure a Nats or a TCP connection. Beware that this entry will be taken into account only if ``tornado_event_socket_ip`` and ``tornado_event_socket_port`` are not provided.

In case of connection using Nats, these entries are mandatory:

nats.client.addresses: The addresses of the NATS server.

nats.client.auth.type: The type of authentication used to authenticate to NATS (Optional. Valid values are

NoneandTls. Defaults toNoneif not provided).nats.client.auth.path_to_pkcs12_bundle: The path to a PKCS12 file that will be used for authenticating to NATS (Mandatory if

nats.client.auth.typeis set toTls).nats.client.auth.pkcs12_bundle_password: The password to decrypt the provided PKCS12 file (Mandatory if

nats.client.auth.typeis set toTls).nats.client.auth.path_to_root_certificate: The path to a root certificate (in

.pemformat) to trust in addition to system’s trust root. May be useful if the NATS server is not trusted by the system as default. (Optional, valid ifnats.client.auth.typeis set toTls).nats.subject: The NATS Subject where tornado will subscribe and listen for incoming events.

In case of connection using TCP, these entries are mandatory:

tcp_socket_ip: The IP address where outgoing events will be written. This should be the address where the Tornado Engine listens for incoming events.

tcp_socket_port: The port where outgoing events will be written. This should be the port where the Tornado Engine listens for incoming events.

More information about the logger configuration is available in the Common Logger documentation.

The default config-dir value can be customized at build time by specifying the environment variable TORNADO_EMAIL_COLLECTOR_CONFIG_DIR_DEFAULT. For example, this will build an executable that uses /my/custom/path as the default value:

TORNADO_EMAIL_COLLECTOR_CONFIG_DIR_DEFAULT=/my/custom/path cargo

build

An example of a full startup command is:

./tornado_email_collector \

--config-dir=/tornado-email-collector/config \

In this example the Email Collector starts up and then reads the configuration from the /tornado-email-collector/config directory.

Tornado Rsyslog Collector Configuration¶

The executable configuration is based partially on configuration files, and partially on command line parameters.

The available startup parameters are:

config-dir: The filesystem folder from which the collector configuration is read. The default path is /etc/tornado_rsyslog_collector/.

In addition to these parameters, the following configuration entries are available in the file ‘config-dir’/rsyslog_collector.toml:

logger:

level: The Logger level; valid values are trace, debug, info, warn, and error.

stdout: Determines whether the Logger should print to standard output. Valid values are

trueandfalse.file_output_path: A file path in the file system; if provided, the Logger will append any output to it.

rsyslog_collector:

tornado_event_socket_ip: The IP address where outgoing events will be written. This should be the address where the Tornado Engine listens for incoming events. If present, this value overrides what specified by the

tornado_connection_channelentry. This entry is deprecated and will be removed in the next release of tornado. Please, use the ``tornado_connection_channel`` instead.tornado_event_socket_port: The port where outgoing events will be written. This should be the port where the Tornado Engine listens for incoming events. This entry is mandatory if

tornado_connection_channelis set toTCP. If present, this value overrides what specified by thetornado_connection_channelentry. This entry is deprecated and will be removed in the next release of tornado. Please, use the ``tornado_connection_channel`` instead.message_queue_size: The in-memory buffer size for Events. It makes the application resilient to Tornado Engine crashes or temporary unavailability. When Tornado restarts, all messages in the buffer will be sent. When the buffer is full, the collector will start discarding older messages first.

tornado_connection_channel: The channel to send events to Tornado. It contains the set of entries required to configure a Nats or a TCP connection. Beware that this entry will be taken into account only if ``tornado_event_socket_ip`` and ``tornado_event_socket_port`` are not provided.

In case of connection using Nats, these entries are mandatory:

nats.client.addresses: The addresses of the NATS server.

nats.client.auth.type: The type of authentication used to authenticate to NATS (Optional. Valid values are

NoneandTls. Defaults toNoneif not provided).nats.client.auth.path_to_pkcs12_bundle: The path to a PKCS12 file that will be used for authenticating to NATS (Mandatory if

nats.client.auth.typeis set toTls).nats.client.auth.pkcs12_bundle_password: The password to decrypt the provided PKCS12 file (Mandatory if

nats.client.auth.typeis set toTls).nats.client.auth.path_to_root_certificate: The path to a root certificate (in

.pemformat) to trust in addition to system’s trust root. May be useful if the NATS server is not trusted by the system as default. (Optional, valid ifnats.client.auth.typeis set toTls).nats.subject: The NATS Subject where tornado will subscribe and listen for incoming events.

In case of connection using TCP, these entries are mandatory:

tcp_socket_ip: The IP address where outgoing events will be written. This should be the address where the Tornado Engine listens for incoming events.

tcp_socket_port: The port where outgoing events will be written. This should be the port where the Tornado Engine listens for incoming events.

More information about the logger configuration is available in the Common Logger documentation.

The default config-dir value can be customized at build time by specifying the environment variable TORNADO_RSYSLOG_COLLECTOR_CONFIG_DIR_DEFAULT. For example, this will build an executable that uses /my/custom/path as the default value:

TORNADO_RSYSLOG_COLLECTOR_CONFIG_DIR_DEFAULT=/my/custom/path cargo build

Tornado Webhook Collector Configuration¶

The executable configuration is based partially on configuration files, and partially on command line parameters.

The available startup parameters are:

config-dir: The filesystem folder from which the collector configuration is read. The default path is /etc/tornado_webhook_collector/.

webhooks-dir: The folder where the Webhook configurations are saved in JSON format; this folder is relative to the

config_dir. The default value is /webhooks/.

In addition to these parameters, the following configuration entries are available in the file ‘config-dir’/webhook_collector.toml:

logger:

level: The Logger level; valid values are trace, debug, info, warn, and error.

stdout: Determines whether the Logger should print to standard output. Valid values are

trueandfalse.file_output_path: A file path in the file system; if provided, the Logger will append any output to it.

webhook_collector:

tornado_event_socket_ip: The IP address where outgoing events will be written. This should be the address where the Tornado Engine listens for incoming events. If present, this value overrides what specified by the

tornado_connection_channelentry. This entry is deprecated and will be removed in the next release of tornado. Please, use the ``tornado_connection_channel`` instead.tornado_event_socket_port: The port where outgoing events will be written. This should be the port where the Tornado Engine listens for incoming events. This entry is mandatory if

tornado_connection_channelis set toTCP. If present, this value overrides what specified by thetornado_connection_channelentry. This entry is deprecated and will be removed in the next release of tornado. Please, use the ``tornado_connection_channel`` instead.message_queue_size: The in-memory buffer size for Events. It makes the application resilient to errors or temporary unavailability of the Tornado connection channel. When the connection on the channel is restored, all messages in the buffer will be sent. When the buffer is full, the collector will start discarding older messages first.

server_bind_address: The IP to bind the HTTP server to.

server_port: The port to be used by the HTTP Server.

tornado_connection_channel: The channel to send events to Tornado. It contains the set of entries required to configure a Nats or a TCP connection. Beware that this entry will be taken into account only if ``tornado_event_socket_ip`` and ``tornado_event_socket_port`` are not provided.

In case of connection using Nats, these entries are mandatory:

nats.client.addresses: The addresses of the NATS server.

nats.client.auth.type: The type of authentication used to authenticate to NATS (Optional. Valid values are

NoneandTls. Defaults toNoneif not provided).nats.client.auth.path_to_pkcs12_bundle: The path to a PKCS12 file that will be used for authenticating to NATS (Mandatory if

nats.client.auth.typeis set toTls).nats.client.auth.pkcs12_bundle_password: The password to decrypt the provided PKCS12 file (Mandatory if

nats.client.auth.typeis set toTls).nats.client.auth.path_to_root_certificate: The path to a root certificate (in

.pemformat) to trust in addition to system’s trust root. May be useful if the NATS server is not trusted by the system as default. (Optional, valid ifnats.client.auth.typeis set toTls).nats.subject: The NATS Subject where tornado will subscribe and listen for incoming events.

In case of connection using TCP, these entries are mandatory:

tcp_socket_ip: The IP address where outgoing events will be written. This should be the address where the Tornado Engine listens for incoming events.

tcp_socket_port: The port where outgoing events will be written. This should be the port where the Tornado Engine listens for incoming events.

More information about the logger configuration can be found in the Common Logger documentation.

The default config-dir value can be customized at build time by specifying the environment variable TORNADO_WEBHOOK_COLLECTOR_CONFIG_DIR_DEFAULT. For example, this will build an executable that uses /my/custom/path as the default value:

TORNADO_WEBHOOK_COLLECTOR_CONFIG_DIR_DEFAULT=/my/custom/path cargo build

An example of a full startup command is:

./tornado_webhook_collector \

--config-dir=/tornado-webhook-collector/config

In this example the Webhook Collector starts up and then reads the configuration from the /tornado-webhook-collector/config directory.

Webhooks Configuration¶

As described before, the two startup parameters config-dir and webhooks-dir determine the path to the Webhook configurations, and each webhook is configured by providing id, token and collector_config.

As an example, consider how to configure a webhook for a repository hosted on Github.

If we start the application using the command line provided in the previous section, the webhook configuration files should be located in the /tornado-webhook-collector/config/webhooks directory. Each configuration is saved in a separate file in that directory in JSON format (the order shown in the directory is not necessarily the order in which the hooks are processed):

/tornado-webhook-collector/config/webhooks

|- github.json

|- bitbucket_first_repository.json

|- bitbucket_second_repository.json

|- ...

An example of valid content for a Webhook configuration JSON file is:

{

"id": "github_repository",

"token": "secret_token",

"collector_config": {

"event_type": "${commits[0].committer.name}",

"payload": {

"source": "github",

"ref": "${ref}",

"repository_name": "${repository.name}"

}

}

}

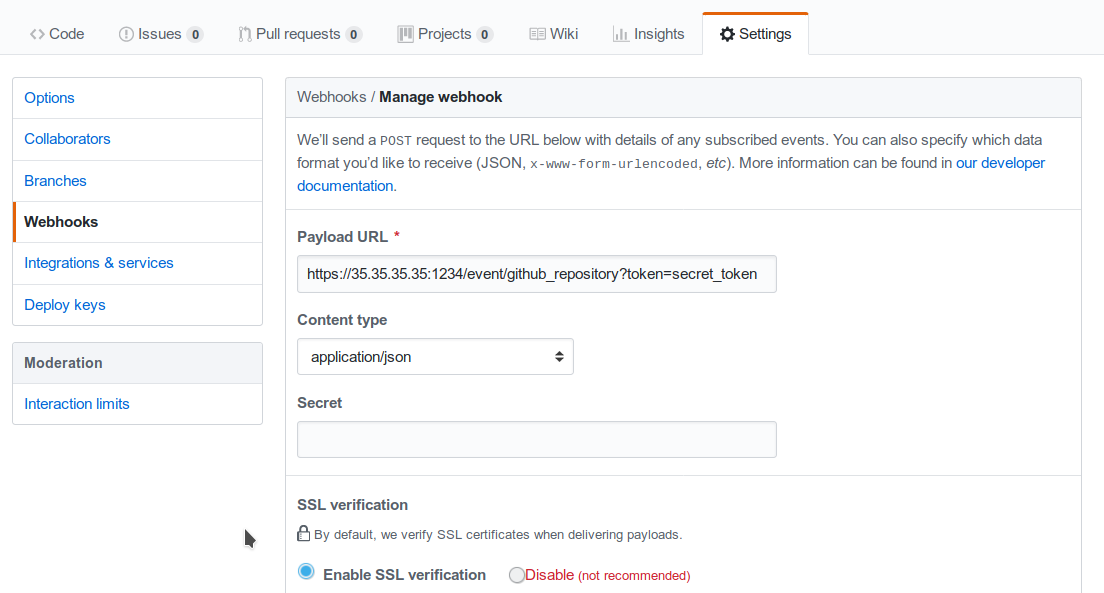

This configuration assumes that this endpoint has been created:

http(s)://collector_ip:collector_port/event/github_repository

However, the Github webhook issuer must pass the token at each call. Consequently, the actual URL to be called will have this structure:

http(s)://collector_ip:collector_port/event/github_repository?token=secret_token

Security warning: Since the security token is present in the query string, it is extremely important that the webhook collector is always deployed with HTTPS in production. Otherwise, the token will be sent unencrypted along with the entire URL.

Consequently, if the public IP of the collector is, for example, 35.35.35.35 and the server port is 1234, in Github, the webhook settings page should look like in Fig. 112.

Fig. 112 Configuring a GitHub webhook.¶

Finally, the collector_config configuration entry determines the content of the tornado Event associated with each webhook input.

So for example, if Github sends this JSON (only the relevant parts shown here):

{

"ref": "refs/heads/master",

"commits": [

{

"id": "33ad3a6df86748011ee8d5cef13d206322abc68e",

"committer": {

"name": "GitHub",

"email": "noreply@github.com",

"username": "web-flow"

}

}

],

"repository": {

"id": 123456789,

"name": "webhook-test"

}

}

then the resulting Event will be:

{

"type": "GitHub",

"created_ms": 1554130814854,

"payload": {

"source": "github",

"ref": "refs/heads/master",

"repository_name": "webhook-test"

}

}

The Event creation logic is handled internally by the JMESPath collector, a detailed description of which is available in its specific documentation.

Tornado Nats JSON Collector Configuration¶

The executable configuration is based partially on configuration files, and partially on command line parameters.

The available startup parameters are:

config-dir: The filesystem folder from which the collector configuration is read. The default path is /etc/tornado_nats_json_collector/.

topics-dir: The folder where the topic configurations are saved in JSON format; this folder is relative to the

config_dir. The default value is /topics/.

In addition to these parameters, the following configuration entries are available in the file ‘config-dir’/nats_json_collector.toml:

logger:

level: The Logger level; valid values are trace, debug, info, warn, and error.

stdout: Determines whether the Logger should print to standard output. Valid values are

trueandfalse.file_output_path: A file path in the file system; if provided, the Logger will append any output to it.

nats_json_collector:

message_queue_size: The in-memory buffer size for Events. It makes the application resilient to errors or temporary unavailability of the Tornado connection channel. When the connection on the channel is restored, all messages in the buffer will be sent. When the buffer is full, the collector will start discarding older messages first.

nats_client.addresses: The addresses of the NATS server.

nats_client.auth.type: The type of authentication used to authenticate to NATS (Optional. Valid values are

NoneandTls. Defaults toNoneif not provided).nats_client.auth.path_to_pkcs12_bundle: The path to a PKCS12 file that will be used for authenticating to NATS (Mandatory if

nats_client.auth.typeis set toTls).nats_client.auth.pkcs12_bundle_password: The password to decrypt the provided PKCS12 file (Mandatory if

nats_client.auth.typeis set toTls).nats_client.auth.path_to_root_certificate: The path to a root certificate (in

.pemformat) to trust in addition to system’s trust root. May be useful if the NATS server is not trusted by the system as default. (Optional, valid ifnats_client.auth.typeis set toTls).tornado_connection_channel: The channel to send events to Tornado. It contains the set of entries required to configure a Nats or a TCP connection.

In case of connection using Nats, these entries are mandatory:

nats_subject: The NATS Subject where tornado will subscribe and listen for incoming events.

In case of connection using TCP, these entries are mandatory:

tcp_socket_ip: The IP address where outgoing events will be written. This should be the address where the Tornado Engine listens for incoming events.

tcp_socket_port: The port where outgoing events will be written. This should be the port where the Tornado Engine listens for incoming events.

More information about the logger configurationis available in the Common Logger documentation.

The default config-dir value can be customized at build time by specifying the environment variable TORNADO_NATS_JSON_COLLECTOR_CONFIG_DIR_DEFAULT. For example, this will build an executable that uses /my/custom/path as the default value:

TORNADO_NATS_JSON_COLLECTOR_CONFIG_DIR_DEFAULT=/my/custom/path cargo build

An example of a full startup command is:

./tornado_nats_json_collector \

--config-dir=/tornado-nats-json-collector/config

In this example the Nats JSON Collector starts up and then reads the configuration from the /tornado-nats-json-collector/config directory.

Topics Configuration¶

As described before, the two startup parameters config-dir and topics-dir determine the path to the topic configurations, and each topic is configured by providing nats_topics and collector_config.

As an example, consider how to configure a “simple_test” topic.

If we start the application using the command line provided in the previous section, the topics configuration files should be located in the /tornado-nats-json-collector/config/topics directory. Each configuration is saved in a separate file in that directory in JSON format (the order shown in the directory is not necessarily the order in which the topics are processed):

/tornado-nats-json-collector/config/topics

|- simple_test.json

|- something_else.json

|- ...

An example of valid content for a Topic configuration JSON file is:

{

"nats_topics": ["simple_test_one", "simple_test_two"],

"collector_config": {

"event_type": "${content.type}",

"payload": {

"ref": "${content.ref}",

"repository_name": "${repository}"

}

}

}

With this configuration, two subscriptions are created to the Nats topics simple_test_one and simple_test_two. Messages received by those topics are processed using the collector_config that determines the content of the tornado Event associated with them.

It is important to note that, if a Nats topic name is used more than once, then the collector will perfom multiple subscriptions accordingly. This can happen if a topic name is duplicated into the nats_topics array or in multiple JSON files.

So for example, if this JSON message is received:

{

"content": {

"type": "content_type",

"ref": "refs/heads/master"

},

"repository": {

"id": 123456789,

"name": "webhook-test"

}

}

then the resulting Event will be:

{

"type": "content_type",

"created_ms": 1554130814854,

"payload": {

"ref": "refs/heads/master",

"repository": {

"id": 123456789,

"name": "webhook-test"

}

}

}

The Event creation logic is handled internally by the JMESPath collector, a detailed description of which is available in its specific documentation.

Default values

The collector_config section and all of its internal entries are optional. If not provided explicitly, the collector will use these predefined values:

When the collector_config.event_type is not provided, the name of the Nats topic that sent the message is used as Event type.

When the collector_config.payload is not provided, the entire source message is included in the payload of the generated Event with the key data.

Consequently, the simplest valid topic configuration contains only the nats_topics:

{

"nats_topics": ["subject_one", "subject_two"]

}

The above one is equivalent to:

{

"nats_topics": ["subject_one", "subject_two"],

"collector_config": {

"payload": {

"data": "${@}"

}

}

}

In this case the generated Tornado Events have type equals to the topic name and the whole source data in their payload.

Tornado Icinga2 Collector Configuration¶

The executable configuration is based partially on configuration files, and partially on command line parameters.

The available startup parameters are:

config-dir: The filesystem folder from which the collector configuration is read. The default path is /etc/tornado_icinga2_collector/.

streams_dir: The folder where the Stream configurations are saved in JSON format; this folder is relative to the

config_dir. The default value is /streams/.

In addition to these parameters, the following configuration entries are available in the file ‘config-dir’/icinga2_collector.toml:

logger:

level: The Logger level; valid values are trace, debug, info, warn, and error.

stdout: Determines whether the Logger should print to standard output. Valid values are

trueandfalse.file_output_path: A file path in the file system; if provided, the Logger will append any output to it.

icinga2_collector

tornado_event_socket_ip: The IP address where outgoing events will be written. This should be the address where the Tornado Engine listens for incoming events. If present, this value overrides what specified by the

tornado_connection_channelentry. This entry is deprecated and will be removed in the next release of tornado. Please, use the ``tornado_connection_channel`` instead.tornado_event_socket_port: The port where outgoing events will be written. This should be the port where the Tornado Engine listens for incoming events. This entry is mandatory if

tornado_connection_channelis set toTCP. If present, this value overrides what specified by thetornado_connection_channelentry. This entry is deprecated and will be removed in the next release of tornado. Please, use the ``tornado_connection_channel`` instead.message_queue_size: The in-memory buffer size for Events. It makes the application resilient to Tornado Engine crashes or temporary unavailability. When Tornado restarts, all messages in the buffer will be sent. When the buffer is full, the collector will start discarding older messages first.

connection

server_api_url: The complete URL of the Icinga2 Event Stream API.

username: The username used to connect to the Icinga2 APIs.

password: The password used to connect to the Icinga2 APIs.

disable_ssl_verification: A boolean value. If true, the client will not verify the Icinga2 SSL certificate.

sleep_ms_between_connection_attempts: In case of connection failure, the number of milliseconds to wait before a new connection attempt.

tornado_connection_channel: The channel to send events to Tornado. It contains the set of entries required to configure a Nats or a TCP connection. Beware that this entry will be taken into account only if ``tornado_event_socket_ip`` and ``tornado_event_socket_port`` are not provided.

In case of connection using Nats, these entries are mandatory:

nats.client.addresses: The addresses of the NATS server.

nats.client.auth.type: The type of authentication used to authenticate to NATS (Optional. Valid values are

NoneandTls. Defaults toNoneif not provided).nats.client.auth.path_to_pkcs12_bundle: The path to a PKCS12 file that will be used for authenticating to NATS (Mandatory if

nats.client.auth.typeis set toTls).nats.client.auth.pkcs12_bundle_password: The password to decrypt the provided PKCS12 file (Mandatory if

nats.client.auth.typeis set toTls).nats.client.auth.path_to_root_certificate: The path to a root certificate (in

.pemformat) to trust in addition to system’s trust root. May be useful if the NATS server is not trusted by the system as default. (Optional, valid ifnats.client.auth.typeis set toTls).nats.subject: The NATS Subject where tornado will subscribe and listen for incoming events.

In case of connection using TCP, these entries are mandatory:

tcp_socket_ip: The IP address where outgoing events will be written. This should be the address where the Tornado Engine listens for incoming events.

tcp_socket_port: The port where outgoing events will be written. This should be the port where the Tornado Engine listens for incoming events.

More information about the logger configuration is available in the Common Logger documentation.

The default config-dir value can be customized at build time by specifying the environment variable TORNADO_ICINGA2_COLLECTOR_CONFIG_DIR_DEFAULT. For example, this will build an executable that uses /my/custom/path as the default value:

TORNADO_ICINGA2_COLLECTOR_CONFIG_DIR_DEFAULT=/my/custom/path cargo

build

An example of a full startup command is:

./tornado_webhook_collector \

--config-dir=/tornado-icinga2-collector/config

In this example the Icinga2 Collector starts up and then reads the configuration from the /tornado-icinga2-collector/config directory.

Streams Configuration¶

As described before, the two startup parameters config-dir and streams-dir determine the path to the stream configurations.

For example, if we start the application using the command line provided in the previous section, the stream configuration files should be located in the /tornado-icinga2-collector/config/streams directory. Each configuration is saved in a separate file in that directory in JSON format:

/tornado-icinga2-collector/config/streams

|- 001_CheckResults.json

|- 002_Notifications.json

|- ...

The alphabetical ordering of the files has no impaact on the collector’s logic.

An example of valid content for a stream configuration JSON file is:

{

"stream": {

"types": ["CheckResult"],

"queue": "icinga2_CheckResult",

"filter": "event.check_result.exit_status==2"

},

"collector_config": {

"event_type": "icinga2_event",

"payload": {

"source": "icinga2",

"icinga2_event": "${@}"

}

}

}

This stream subscription will receive all Icinga2 Events of type ‘CheckResult’ with ‘exit_status’=2. It will then produce a Tornado Event with type ‘icinga2_event’ and the entire Icinga2 Event in the payload with key ‘icinga2_event’.

The Event creation logic is handled internally by the JMESPath collector, a detailed description of which is available in its specific documentation.

SNMPTrapd TCP Collector Configuration¶

Prerequisites

This collector has the following runtime requirements:

Perl 5.16 or greater

Perl packages required:

Cpanel::JSON::XS

NetSNMP::TrapReceiver

You can verify that the Perl packages are available with the command:

$ perl -e 'use Cpanel::JSON::XS;' && \

perl -e 'use NetSNMP::TrapReceiver;'

If no messages are displayed in the console, then everything is okay; otherwise, you will see error messages.

In case of missing dependencies, use your system’s package manager to install them.

For example, the required Perl packages can be installed on an Ubuntu system with:

$ sudo apt install libcpanel-json-xs-perl libsnmp-perl

Activation

This Collector is meant to be integrated with snmptrapd. To activate it, put the following line in your snmptrapd.conf file:

perl do "/path_to_the_script/snmptrapd_tcp_collector.pl";

Consequently, it is never started manually, but instead will be started, and managed, directly by snmptrapd itself.

At startup, if the collector is configured properly, you should see this entry either in the logs or in the daemon’s standard error output:

The TCP based snmptrapd_collector was loaded successfully.

Configuration options

The address of the Tornado Engine TCP instance to which the events are forwarded is configured with the following environment variables:

TORNADO_ADDR: the IP address of Tornado Engine. If not specified, it will use the default value 127.0.0.1

TORNADO_PORT: the port of the TCP socket of Tornado Engine. If not specified, it will use the default value 4747

SNMPTrapd NATS Collector Configuration¶

Prerequisites

This collector has the following runtime requirements:

Perl 5.16 or greater

Perl packages required:

Cpanel::JSON::XS

Net::NATS::Client

NetSNMP::TrapReceiver

You can verify that the Perl packages are available with the command:

$ perl -e 'use Cpanel::JSON::XS;' && \

perl -e 'use Net::NATS::Client;' && \

perl -e 'use NetSNMP::TrapReceiver;'

If no messages are displayed in the console, then everything is okay; otherwise, you will see error messages.

In case of missing dependencies, use your system’s package manager to install them.

Instructions for installing Net::NATS::Client are available at its

official repository

Activation

This Collector is meant to be integrated with snmptrapd. To activate it, put the following line in your snmptrapd.conf file:

perl do "/path_to_the_script/snmptrapd_collector.pl";

Consequently, it is never started manually, but instead will be started, and managed, directly by snmptrapd itself.

At startup, if the collector is configured properly, you should see this entry either in the logs or in the daemon’s standard error output:

The snmptrapd_collector for NATS was loaded successfully.

Configuration options

The information to connect to the NATS Server are provided by the following environment variables:

TORNADO_NATS_ADDR: the address of the NATS server. If not specified, it will use the default value 127.0.0.1:4222

TORNADO_NATS_SUBJECT: the NATS subject where the events are published. If not specified, it will use the default value tornado.events

TORNADO_NATS_SSL_CERT_PEM_FILE: The filesystem path of a PEM certificate. This entry is optional, when provided, the collector will use the certificate to connect to the NATS server

TORNADO_NATS_SSL_CERT_KEY: The filesystem path for the KEY of the PEM certificate provided by the TORNADO_NATS_SSL_CERT_PEM_FILE entry. This entry is mandatory if the TORNADO_NATS_SSL_CERT_PEM_FILE entry is provided

Tornado Engine CLI Commands and Configuration¶

The Tornado CLI has commands that allow you to use the functionality provided. Running the Tornado executable without any arguments returns a list of all available commands and global options that apply to every command.

Tornado commands:

__apm-tracing enable|disable__ : Enable or disable the APM priority logger output.

When used with enable, it:

enables the APM logger

disables the stdout logger output

sets logger level to info,tornado=debug

When used with disable, it:

disables the APM logger

enables the stdout logger output

sets logger level to value from the configuration file

check : Checks that the configuration is valid.

daemon : Starts the Tornado daemon.

help : Prints the general help page, or the specific help of the given command.

rules-upgrade : Checks the current configuration and, if available, upgrades the rules structure to the most recent one.

filter-create : Creates a Filter at the root level of the current configuration and of the open drafts.

Parameters:

name: The name of the Filter to be created.

json-definition: The JSON representation of the Filter.

In case a Node with the same name already exists at the root level of the configuration, the following will happen:

If the existing node is a Ruleset, it will be renamed to: <ruleset_name>_backup_<timestamp_in_milliseconds>.

If the existing node is a Filter having a different filter definition, it will be renamed to: <filter_name>_backup_<timestamp_in_milliseconds>.

If the existing node is a Filter having the same filter definition, nothing will be done.

Each CLI command provides its own help and usage information, you can

display using the help command.

For example, with this command you can show the help page and options of

daemon:

./tornado_engine help daemon

The Tornado configuration is partly based on configuration files and partly based on command line parameters. The location of configuration files in the file system is determined at startup based on the provided CLI options.

Tornado global options:

config-dir: The filesystem folder from which the Tornado configuration is read. The default path is /etc/tornado.

rules-dir: The folder where the Rules are saved in JSON format; this folder is relative to

config_dir. The default value is /rules.d/.

The check command does not have any specific options.

The daemon command has options specified in the tornado.daemon section of the tornado.toml configuration file.

In addition to these parameters, the following configuration entries are available in the file ‘config-dir’/tornado.toml:

logger:

level: The Logger level; valid values are trace, debug, info, warn, and error.

stdout: Determines whether the Logger should print to standard output. Valid values are

trueandfalse.file_output_path: A file path in the file system; if provided, the Logger will append any output to it.

tornado.daemon

thread_pool_config: The configuration of the thread pools bound to the internal queues. This entry is optional and should be rarely configured manually. For more details see the following Structure and Configuration: The Thread Pool Configuration section.

retry_strategy.retry_policy: The global retry policy for reprocessing failed actions. (Optional. Defaults to

MaxAttemptsif not provided). For more details see the following Structure and Configuration: Retry Strategy Configuration section.retry_strategy.backoff_policy: The global back-off policy for reprocessing failed actions. (Mandatory only if

retry_strategy.retry_policyis provided). For more details see the following Structure and Configuration: Retry Strategy Configuration section.event_tcp_socket_enabled: Whether to enable the TCP server for incoming events (Optional. Valid values are

trueandfalse. Defaults totrueif not provided).event_socket_ip: The IP address where Tornado will listen for incoming events (Mandatory if

event_tcp_socket_enabledis set to true).event_socket_port: The port where Tornado will listen for incoming events (Mandatory if

event_tcp_socket_enabledis set to true).nats_enabled: Whether to connect to the NATS server (Optional. Valid values are

trueandfalse. Defaults tofalseif not provided).nats_extractors: List of data extractors for incoming Nats messages (Optional). Valid extractors are:

FromSubject: using a regex, extracts the first matching group from the Nats subject and adds its value to the event.metadata scope using the specified key. Example:

nats_extractors = [ { type = "FromSubject", key = "tenant_id", regex = "^([^.]+)\\.tornado\\.events" } ]

nats.client.addresses: Array of addresses of the NATS nodes of a cluster. (Mandatory if

nats_enabledis set to true).nats.subject: The NATS Subject where tornado will subscribe and listen for incoming events (Mandatory if

nats_enabledis set to true).nats.client.auth.type: The type of authentication used to authenticate to NATS (Optional. Valid values are

NoneandTls. Defaults toNoneif not provided).nats.client.auth.certificate_path: The path to the client certificate that will be used for authenticating to NATS. (Mandatory if nats.client.auth.type is set to Tls).

nats.client.auth.private_key_path: The path to the client certificate private key that will be used for authenticating to NATS. (Mandatory if nats.client.auth.type is set to Tls).

nats.client.auth.path_to_root_certificate: The path to a root certificate (in

.pemformat) to trust in addition to system’s trust root. May be useful if the NATS server is not trusted by the system as default. (Optional, valid ifnats.client.auth.typeis set toTls).web_server_ip: The IP address where the Tornado Web Server will listen for HTTP requests. This is used, for example, by the monitoring endpoints.

web_server_port: The port where the Tornado Web Server will listen for HTTP requests.

web_max_json_payload_size: The max JSON size in bytes accepted by a Tornado endpoint. (Optional. Defaults to 67108860 (i.e. 64MB))

More information about the logger configuration is available in section Common Logger.

The default config-dir value can be customized at build time by specifying the environment variable TORNADO_CONFIG_DIR_DEFAULT. For example, this will build an executable that uses /my/custom/path as the default value:

TORNADO_CONFIG_DIR_DEFAULT=/my/custom/path cargo build

The command-specific options should always be used after the command name, while the global ones always precede it. An example of a full startup command is:

./tornado_engine

--config-dir=./tornado/engine/config \

daemon

In this case, the CLI executes the daemon command that starts the Engine with the configuration read from the ./tornado/engine/config directory. In addition, it will search for Filter and Rule definitions in the ./tornado/engine/config/rules.d directory in order to build the processing tree.

Structure and Configuration: The Thread Pool Configuration

Even if the default configuration should suit most of the use cases, in some particular situations it could be useful to customise the size of the internal queues used by Tornado. Tornado utilizes these queues to process incoming events and to dispatch triggered actions.

Tornado uses a dedicated thread pool per queue; the size of each queue is by default equal to the number of available logical CPUs. Consequently, in case of an action of type script, for example, Tornado will be able to run in parallel at max as many scripts as the number of CPUs.

This default behaviour can be overridden by providing a custom configuration for the thread pools size. This is achieved through the optional tornado_pool_config entry in the tornado.daemon section of the Tornado.toml configuration file.

Example of how to dynamically configure the thread pool based on the available CPUs:

[tornado.daemon]

thread_pool_config = {type = "CPU", factor = 1.0}

In this case, the size of the thread pool will be equal to

(number of available logical CPUs) multiplied by (factor) rounded to

the smallest integer greater than or equal to a number. If the resulting

value is less than 1, then 1 will be used be default.

For example, if there are 16 available CPUs, then:

{type: "CPU", factor: 0.5}=> thread pool size is 8{type: "CPU", factor: 2.0}=> thread pool size is 32

Example of how to statically configure the thread pool based:

[tornado.daemon]

thread_pool_config = {type = "Fixed", size = 20}

In this case, the size of the thread pool is statically fixed at 20. If the provided size is less than 1, then 1 will be used be default.

Structure and Configuration: Retry Strategy Configuration

Tornado allows the configuration of a global retry strategy to be applied when the execution of an Action fails.

A retry strategy is composed by:

retry policy: the policy that defines whether an action execution should be retried after an execution failure;

backoff policy: the policy that defines the sleep time between retries.

Valid values for the retry policy are:

{type = "MaxRetries", retries = 5}=> A predefined maximum amount of retry attempts. This is the default value with a retries set to 20.{type = "None"}=> No retries are performed.{type = "Infinite"}=> The operation will be retried an infinite number of times. This setting must be used with extreme caution as it could fill the entire memory buffer preventing Tornado from processing incoming events.

Valid values for the backoff policy are:

{type = "Exponential", ms = 1000, multiplier = 2 }: It increases the back off period for each retry attempt in a given set using the exponential function. The period to sleep on the first backoff is thems; themultiplieris instead used to calculate the next backoff interval from the last. This is the default configuration.{type = "None"}: No sleep time between retries. This is the default value.{type = "Fixed", ms = 1000 }: A fixed amount of milliseconds to sleep between each retry attempt.{type = "Variable", ms = [1000, 5000, 10000]}: The amount of milliseconds between two consecutive retry attempts.The time to wait after ‘i’ retries is specified in the vector at position ‘i’.

If the number of retries is bigger than the vector length, then the last value in the vector is used. For example:

ms = [111,222,333]-> It waits 111 ms after the first failure, 222 ms after the second failure and then 333 ms for all following failures.

Example of a complete Retry Strategy configuration:

[tornado.daemon]

retry_strategy.retry_policy = {type = "Infinite"}

retry_strategy.backoff_policy = {type = "Variable", ms = [1000, 5000, 10000]}

When not provided explicitly, the following default Retry Strategy is used:

[tornado.daemon]

retry_strategy.retry_policy = {type = "MaxRetries", retries = 20}

retry_strategy.backoff_policy = {type = "Exponential", ms = 1000, multiplier = 2 }

Structure and Configuration: Enable the TCP event socket

Enabling the TCP event socket server allows Tornado to receive events through a direct TCP connection.

The TCP event socket configuration entries are available in the

tornado.toml file. Example of the TCP socket section the

tornado.toml file:

# Whether to enable the TCP listener

event_tcp_socket_enabled = true