Tornado Actions¶

Overview¶

The important part of creating a Tornado Rule is specifying the Actions that are to be carried out in the case an Event matches a specific Rule.

A selection of Actions available to be defined and configured is presented in a Rule configuration under the dedicated ‘Actions’ tab. Each individual Action type is taken care of by a particular Tornado Executor, which would trigger the associated executable instructions.

There are several Action types that may be singled out according to their logic.

Monitoring Actions - are carried out by the Icinga 2 Executor, Smart Monitoring Check Result Executor and the Director Executor. These Actions are meant for triggering the actual monitoring instructions, e.g. setting process check results or creating hosts.

Logging Actions - serve for logging data, be it an Event received from the Action, or the output of an Action. This type of actions are carried out by the Logger Executor, Archive Executor and Elasticsearch Executor.

In addition to executing a Monitoring or Logging type of action mentioned above, you can customize the processing of an Action by running custom scripts with the help of Script Executor, or loop through a set of data to execute a list of Actions for each entry with the Foreach Tool.

Below you will find a full list of Action types available to be defined in a Rule’s ‘Actions’ tab.

Smart Monitoring Check Result¶

SMART_MONITORING_CHECK_RESULT Action type allows to set a specific check result for a monitored object,

also in the cases when the Icinga 2 object for which you want to carry out the Action does not exist.

Moreover, the Smart Monitoring Check Result Executor responsible for carrying out the Action also ensures

that no outdated process-check-result will overwrite newer check results already present in Icinga 2.

Note however, that the Icinga agent cannot be created live using Smart Monitoring Executor because it always requires a defined endpoint in the configuration which is not possible since the Icinga API doesn’t support live-creation of an endpoint.

To ensure that outdated check results are not processed, the Action

process-check-result is carried out by the

Icinga 2 with the parameters execution_start

and execution_end inherited by the Action definition or set equal

to the value of the created_ms property of the originating Tornado

Event. Section

Discarded Check Results

explains how the Executor handles these cases.

The SMART_MONITORING_CHECK_RESULT action type should include the following elements in its payload:

A check_result: The basic data to build the Icinga 2

process-check-resultaction payload. See more in the official Icinga 2 documentation.{ "exit_status": "2", "plugin_output": "Output message" }

The check_result should contain all mandatory parameters expected by the Icinga API except the following ones that are automatically filled by the Executor:

hostservicetype

A host: The data to build the payload which will be sent to the Icinga 2 REST API for the host creation.

{ "object_name": "myhost", "address": "127.0.0.1", "check_command": "hostalive", "vars": { "location": "Rome" } }

A service: The data to build the payload which will be sent to the Icinga 2 REST API for the service creation (optional)

{ "object_name": "myservice", "check_command": "ping" }

Discarded Check Results

Some process-check-results may be discarded by Icinga 2 if more recent

check results already exist for the target object.

In this situation the Executor does not retry the Action, but

simply logs an error containing the tag DISCARDED_PROCESS_CHECK_RESULT

in the configured Tornado Logger. Please check out how to activate debug logging

for Tornado in the Troubleshooting section.

The log message showing a discarded process-check-result will be similar

to the following excerpt, enclosed in an ActionExecutionError:

SmartMonitoringExecutor - Process check result action failed with error ActionExecutionError {

message: "Icinga2Executor - Icinga2 API returned an unrecoverable error. Response status: 500 Internal Server Error.

Response body: {\"results\":[{\"code\":409.0,\"status\":\"Newer check result already present. Check result for 'my-host!my-service' was discarded.\"}]}",

can_retry: false,

code: None,

data: {

"payload":{"execution_end":1651054222.0,"execution_start":1651054222.0,"exit_status":0,"plugin_output":"Some process check result","service":"my-host!my-service","type":"Service"},

"tags":["DISCARDED_PROCESS_CHECK_RESULT"],

"url":"https://icinga2-master.neteyelocal:5665/v1/actions/process-check-result",

"method":"POST"

}

}.

Director¶

Tornado Actions for creating hosts and services are available under the DIRECTOR Action type.

The following elements of an Action are to be specified for the Director Executor to extract data from a Tornado Action and prepare it to be sent to the Icinga Director REST API:

An action_name:

create_host,create_servicewould create an object of typehostorservicein the Director respectively. See more in the official Icinga 2 documentation.An action_payload (optional): The payload of the Director action.

{ "object_type": "object", "object_name": "my_host_name", "address": "127.0.0.1", "check_command": "hostalive", "vars": { "location": "Bolzano" } }

An icinga2_live_creation (optional): Boolean value, which determines whether to create the specified Icinga Object also in Icinga 2.

Icinga 2¶

The ICINGA 2 Action type allows to define one the existing Icinga 2 actions.

For the Icinga 2 Executor to extract data from a Tornado Action and prepare it to be sent to the Icinga 2 API, the following parameters are to be specified in the Action’s payload:

1. An icinga2_action_name: The Icinga 2 action to perform.

An icinga2_action_payload (optional): should contain all mandatory parameters expected by the specific Icinga 2 action.

{ "exit_status": "2", "filter": "host.name==\"${_variables.hostname}\"", "plugin_output": "${event.payload.plugin_output}", "type": "Host" }

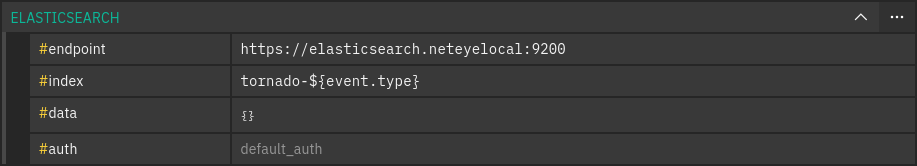

Elasticsearch¶

The ELASTICSEARCH Action type allows you to extract data from a Tornado Action and send it to Elasticsearch.

The Elasticsearch Executor behind the Action type expects a Tornado Action to include the following elements in its payload:

endpoint : The Elasticsearch endpoint which Tornado will call to create the Elasticsearch document (i.e. https://elasticsearch.neteyelocal:9200),

index : The name of the Elasticsearch index in which the document will be created. In the local elasticsearch instance, Tornado can only index data into an index with name

tornado-*,data: The content of the document that will be sent to Elasticsearch

{ "user" : "kimchy", "post_date" : "2009-11-15T14:12:12", "message" : "trying out Elasticsearch" }

auth: Method of authentication; The executor already has a

default_authconfigured in the file/neteye/shared/tornado/conf/elasticsearch_executor.toml. See more details below.

The Elasticsearch Executor will create a new document in the specified Elasticsearch index for each action executed. In case a specified index does not yet exist, it will be created by the action.

Elasticsearch authentication

When the Elasticsearch Action is created, a default authentication

method, default_auth, is defined in the Action’s payload and will be used

to authenticate to Elasticsearch.

However, the default method is available only with the |ne| Elastic Stack Feature Module installed.

In case the Feature Module has not been installed, or the default authentication method is to be overwritten, one should:

Create a new certificate, signed by signed by the Elasticsearch instance specified in the

endpointfield, or their CACopy the key, certificate and CA to

/neteye/shared/tornado/conf/certs/Specify the path to the new files in the

authfield

To use a specific authentication method the Action should include the

auth field with either of the following authentication types:

None or PemCertificatePath.

With None authentication type the client connects to Elasticsearch without authentication:

{

"type": "None"

}

PemCertificatePath authentication type means the client connects to Elasticsearch using the PEM certificates read from the local file system. When this method is used, the following information must be provided:

certificate_path: path to the public certificate accepted by Elasticsearch

private_key_path: path to the corresponding private key

ca_certificate_path: path to CA certificate needed to verify the identity of the Elasticsearch server

{

"type": "PemCertificatePath",

"certificate_path": "/neteye/shared/tornado/conf/certs/acme-elasticsearch.crt.pem",

"private_key_path": "/neteye/shared/tornado/conf/certs/private/acme-elasticsearch.key.pem",

"ca_certificate_path": "/neteye/shared/tornado/conf/certs/acme-root-ca.crt"

}

If a default method is not defined upon creation of an Action, then each action that does not specify authentication method will fail.

Archive¶

The ARCHIVE Action type allows you to write the Events from the received Tornado Actions to a file with the help of a dedicated Archive Executor.

Requirements and Limitations

The archive Executor can only write to locally mounted file systems. In addition, it needs read and write permissions on the folders and files specified in its configuration.

Configuration

The archive Executor has the following configuration options:

file_cache_size: The number of file descriptors to be cached. You can improve overall performance by keeping files from being continuously opened and closed at each write.

file_cache_ttl_secs: The Time To Live of a file descriptor. When this time reaches 0, the descriptor will be removed from the cache.

base_path: A directory on the file system where all logs are written. Based on their type, rule Actions received from the Matcher can be logged in subdirectories of the base_path. However, the archive Executor will only allow files to be written inside this folder.

default_path: A default path where all Actions that do not specify an

archive_typein the payload are loggedpaths: A set of mappings from an archive_type to an

archive_path, which is a subpath relative to the base_path. The archive_path can contain variables, specified by the syntax${parameter_name}, which are replaced at runtime by the values in the Action’s payload.

The archive path serves to decouple the type from the actual subpath, allowing you to write Action rules without worrying about having to modify them if you later change the directory structure or destination paths.

As an example of how an archive_path is computed, suppose we have the

following configuration:

base_path = "/tmp"

default_path = "/default/out.log"

file_cache_size = 10

file_cache_ttl_secs = 1

[paths]

"type_one" = "/dir_one/file.log"

"type_two" = "/dir_two/${hostname}/file.log"

and these three Rule’s actions:

action_one: “archive_type”: “type_one”, “event”: “__the_incoming_event__”

action_two: “archive_type”: “type_two”, “event”: “__the_incoming_event__”

action_three: “archive_type”: “” “event”: “__the_incoming_event__”

then:

action_one will be archived in /tmp/dir_one/file.log

action_two will be archived in /tmp/dir_two/net-test/file.log

action_three will be archived in /tmp/default/out.log

The archive Executor expects an Action to include the following elements in the payload:

An event: The Event to be archived should be included in the payload under the key

eventAn archive type (optional): The archive type is specified in the payload under the key

archive_type

When an archive_type is not specified, the default_path is used (as in

action_three). Otherwise, the Executor will use the archive_path in the

paths configuration corresponding to the archive_type key

(action_one and action_two).

When an archive_type is specified but there is no corresponding key in

the mappings under the paths configuration, or it is not possible to

resolve all path parameters, then the Event will not be archived.

Instead, the archiver will return an error.

The Event from the payload is written into the log file in JSON format, one event per line.

Logger¶

For troubleshooting purposes the LOGGER Action can be used to log all events that match a specific rule. The Logger Executor behind this Action type logs received Actions: it simply outputs the whole Action body to the standard log at the info level.

Script¶

SCRIPT Action type allows to run custom shell scripts on a Unix-like system in order to customize the Action according to your needs.

In order to be correctly processed by the Script Executor, an Action should provide two entries in its payload: the path to a script on the local filesystem of the Executor process, and all the arguments to be passed to the script itself.

The script path is identified by the payload key script.

neteye# ./usr/share/scripts/my_script.sh

It is important to verify that the Executor has both read and execute rights at that path.

The script arguments are identified by the payload key args; if present, they are passed as command line arguments when the script is executed.

Foreach Tool¶

The Foreach Tool loops through a set of data and executes a list of actions for each entry; it extracts all values from an array of elements and injects each value to a list of action under the item key.

There are two mandatory configuration entries in its payload:

target: the array of elements, e.g.

${event.payload.list_of_objects}actions: the array of action to execute

In order to access the item of the current cycle in the actions inside the Foreach Tool,

use the variable ${item}.